Why Searching using AI Chat Interface Does not work, 7 Reasons

Table of Content

As someone who wears multiple hats—blogger, developer, and doctor—I spend a lot of time interacting with technology. And let me tell you, I’ve been fascinated by how AI chat interfaces have evolved over the years. From asking Siri about the weather to having deep conversations with ChatGPT or Bard, it feels like we’re living in a sci-fi movie sometimes.

But here’s the thing: while these tools are impressive, they aren’t perfect. In fact, relying on them for searches can often lead to frustration rather than clarity.

Recently, our AI club had an interesting discussion about this very topic. We all agreed that AI chatbots are powerful but come with limitations that make them unreliable as standalone search engines. So today, I want to share my thoughts (and some personal experiences) on why searching using AI chat interfaces doesn’t always work—and what you can do about it.

1. It Requires Prompt Refining – Not Everyone Is Good at This

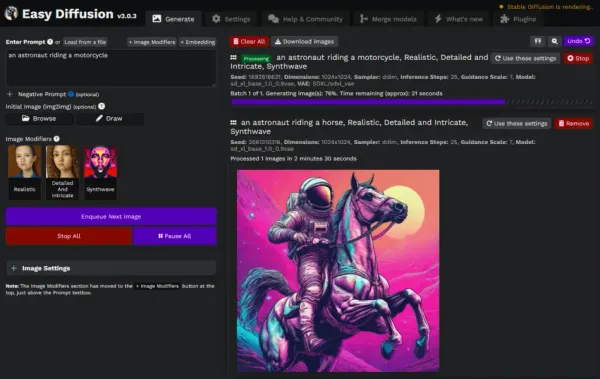

Let’s start with one of the biggest hurdles: getting the right answer depends heavily on how you ask your question. Unlike Google or Bing, where you type keywords and get results instantly, AI chatbots require more finesse. They need context, specificity, and sometimes even follow-up questions to deliver accurate answers.

For example, last week, I asked an AI chatbot, “What causes headaches?” The response was generic—things like dehydration, stress, and lack of sleep. Fair enough. But when I refined my prompt to, “What causes tension-type headaches specifically?” the answer became much more detailed and relevant. However, not everyone knows how to refine their prompts effectively. If you don’t, you might end up with vague or incomplete information.

This is where prompt engineering comes into play. People skilled at crafting precise questions tend to get better results from AI. Unfortunately, most users aren’t trained in this art, which leads to subpar outcomes.

2. AI Can Be Biased – Garbage In, Garbage Out

Here’s another hard truth: AI systems are only as good as the data they’re trained on. If the training data contains biases, inaccuracies, or outdated information, the AI will reflect those flaws. For instance, during one of our AI club sessions, we tested several bots with historical queries. One member asked, “Who were the greatest inventors of all time?” Every bot listed mostly male figures, completely ignoring women like Ada Lovelace or Hedy Lamarr.

It wasn’t because these women weren’t important—it’s just that the datasets used to train the models didn’t emphasize their contributions equally. This bias isn’t intentional, but it’s real, and it affects the quality of the answers you receive.

Well, think of it like an opinionated search engine that gives you the only result it thinks is the right one for you!

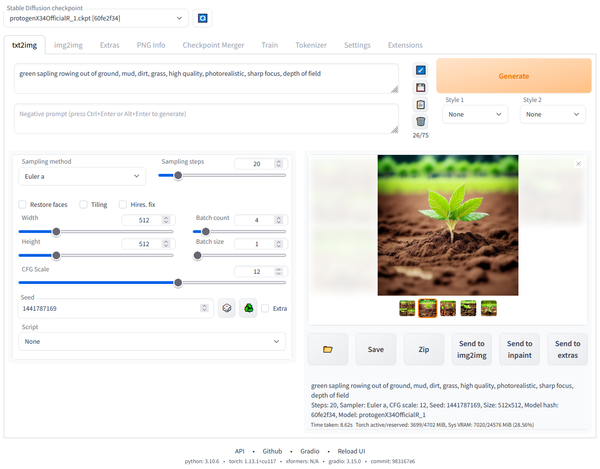

3. It’s Only as Smart as Its Training Data

Speaking of training data, AI chatbots operate within the boundaries of what they’ve learned. Their knowledge cutoff dates vary depending on the model. For example, GPT-4 has a cutoff date of late 2023, meaning anything beyond that point won’t be included in its responses. This limitation becomes glaringly obvious when you ask about recent events or cutting-edge research.

4. It Often Gives Vague or Overgeneralized Answers

Another issue I’ve encountered repeatedly is that AI tends to generalize. Take this scenario: A patient once asked me if they should worry about chest pain after eating spicy food. Curious, I decided to see what an AI would say. The response? “Chest pain could indicate heartburn, acid reflux, or something serious like a heart attack. Consult a doctor immediately.” While technically correct, this advice was so broad that it wasn’t helpful.

In contrast, a quick Google or Bing search led me to articles discussing common triggers for heartburn versus symptoms of cardiac issues. Combining both approaches gave me the nuanced understanding I needed. AI alone couldn’t provide that depth.

6. Emotional Nuance Gets Lost

As a doctor, empathy is crucial in my interactions with patients. Yet, AI struggles to replicate human emotional intelligence. During a mock consultation exercise in our AI club, we tested whether a chatbot could comfort someone worried about a potential diagnosis.

The response was clinical and impersonal: “Based on your symptoms, it’s unlikely to be serious. However, consult a healthcare professional for confirmation.”

While logically sound, the tone lacked warmth and reassurance. Humans crave connection, especially during stressful situations. AI simply can’t replicate that yet.

And man, this is the case for diagnosis as well, I wrote several articles about this here:

7. You Still Need Traditional Search Engines

Finally, perhaps the most important takeaway: AI chatbots shouldn’t replace traditional search engines—they should complement them. Think of AI as a starting point, not the final destination. After gathering initial insights from a chatbot, I almost always turn to Google, Bing, or specialized databases to dig deeper.

For instance, when writing blog posts, I use AI to brainstorm ideas or outline sections. But for factual accuracy and comprehensive research, I rely on trusted websites and academic papers. This hybrid approach saves time without compromising quality.

Even though, Google messed up their search homepage.

My Personal Experience with AI vs. Traditional Search

To illustrate this further, let me share a recent experience. I was working on a blog post about renewable energy trends. First, I asked an AI chatbot for key points to include. It gave me a decent overview—topics like solar power advancements, wind turbine innovations, and government policies. Encouraged, I dove deeper into each area using Google and found specific case studies, statistics, and expert opinions that added richness to my article.

Without combining both methods, my post wouldn’t have been nearly as informative. AI provided structure; traditional search filled in the details.

Final Thoughts

AI chat interfaces are incredible tools, but they’re not magic wands. They excel at certain tasks—like generating creative content, offering quick summaries, or helping refine complex ideas—but fall short in others. To truly harness their potential, you need to understand their strengths and weaknesses.

So next time you find yourself frustrated with an AI-generated answer, remember: it’s not the tool’s fault—it’s how we use it. Combine AI with traditional search engines, refine your prompts, and always verify critical information. By doing so, you’ll unlock the best of both worlds.

And hey, if you ever feel stuck, join us at the AI club! We love geeking out over these topics and sharing tips to make tech work for us—not the other way around. Until then, happy searching!