AI in Healthcare: A Doctor’s Perspective from Personal Experience

Table of Content

AI is making its way into every industry, and healthcare is no exception. As a doctor, I’ve seen the promise of AI—faster diagnoses, streamlined processes, and fewer human errors. But I’ve also seen the pitfalls.

AI doesn’t always get it right, and blind faith in its conclusions can be dangerous.

I’ve encountered more than one situation where AI-driven misdiagnoses collided with traditional practices, leading to potential harm.

Here are two real-world cases that made me question just how much we should rely on AI in healthcare.

Case 1: Jaundice, AI, and a Misguided Diagnosis

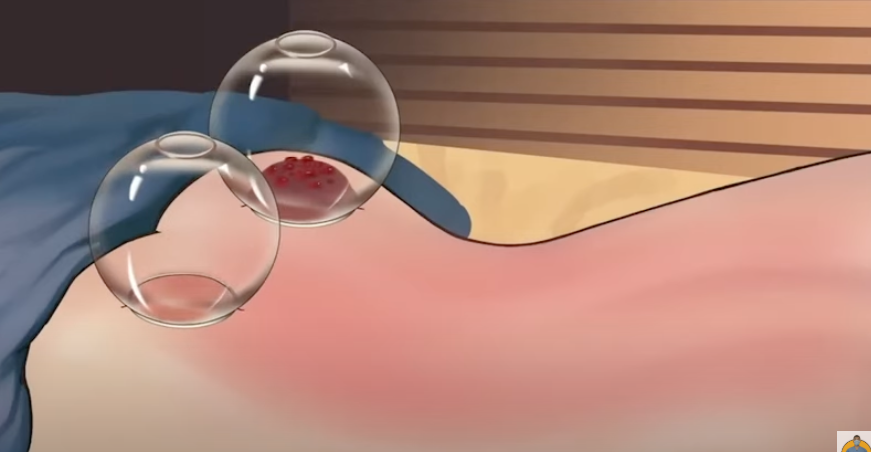

A few days ago, a friend who had recently moved back to his home country in Asia from London reached out with a troubling question. His teenage son had jaundice, and he wanted to know if wet cupping (hijama) could help. Then he mentioned liver cirrhosis.

Red flags went up immediately.

Through a series of questions, I pieced together the story. He’d gotten the diagnosis from an AI tool, and even his doctor had backed it up. My friend is a prompt engineer, so using AI tools is second nature to him.

But diagnosing liver cirrhosis in a teenager based only on jaundice symptoms didn’t add up.

Instead of accepting the AI’s conclusion, I urged him to seek proper testing. I suspected something more common, like Hepatitis A. Sure enough, the test results confirmed it: his son had Hepatitis A, not liver cirrhosis.

This incident reinforced a crucial truth: while AI can be helpful, it lacks the nuance and clinical context that human judgment provides.

Case 2: Anemia, AI, and the Migraine Misdiagnosis

Another case involved a patient who was suffering from fatigue and headaches. She also had a noticeable forward head posture—a common issue caused by poor posture or prolonged screen time.

The likely diagnosis? Anemia, which explained her symptoms perfectly.

But she had a different idea. She’d fed her symptoms into an AI health app, which confidently told her she had migraines.

To make matters worse, her traditional cultural background suggested wet cupping (hijama) as a solution for migraines. She was ready to go ahead with the procedure.

I stepped in. “Before jumping to conclusions, let’s confirm this with a blood test.” The results revealed severe iron deficiency—classic anemia.

Wet cupping would not only have failed to help but could have worsened her condition by reducing her already low blood volume.

The Dangers of Over-Reliance on AI

Both cases illustrate a growing problem: putting too much faith in AI-powered diagnostics. AI is a tool—a powerful one—but it’s not infallible. It lacks context, experience, and the human touch.

Even people who understand AI’s inner workings can fall into the trap of treating its output as gospel.

Healthcare is complex. Symptoms overlap. One missed detail can lead to a completely different diagnosis. AI doesn’t know the difference between a teenager with jaundice and a middle-aged alcoholic with liver cirrhosis—it just sees patterns.

Traditional Practices and AI: A Dangerous Combo

In many cultures, traditional medical practices like wet cupping are deeply ingrained. When AI misdiagnosis intersects with these traditions, the results can be dangerous.

Wet cupping might help in specific situations, but it’s not a cure-all—and it certainly isn’t a substitute for proper medical treatment.

AI doesn’t understand these nuances. It doesn’t know when wet cupping is appropriate and when it could do more harm than good. That’s where human judgment comes in.

The Key The Bottom Line: AI as an Assistant, Not a Replacement

AI can assist, but it shouldn’t replace human oversight—especially in healthcare. If you get an AI-generated diagnosis, don’t stop there. Consult a qualified doctor.

Get a second opinion. And if traditional practices are part of your healthcare routine, make sure they’re backed by sound medical advice.

AI is not your doctor. It shouldn’t be the final word on your health.

Stay informed, stay cautious, and trust the professionals.