Aim is a Free Open-source Experiment Tracker Data Scientist

Table of Content

Aim is an open-source, supercharged version control system designed for tracking and exploring AI experiments. It helps machine learning engineers and data scientists track their experiments' performance and easily compare metrics across different runs.

With Aim, users can visualize training progress, compare experiments, and collaborate more effectively.

It supports integrations with popular frameworks like PyTorch, TensorFlow, and Keras, making it a versatile tool for AI development.

Aim offers a powerful, flexible solution for AI experiment management, making it a valuable tool for both individual researchers and teams.

AimStack provides a versatile platform for managing AI experiments, making it an essential tool for organizations and teams involved in AI research and development.

Features

- Compare runs easily to build models faster

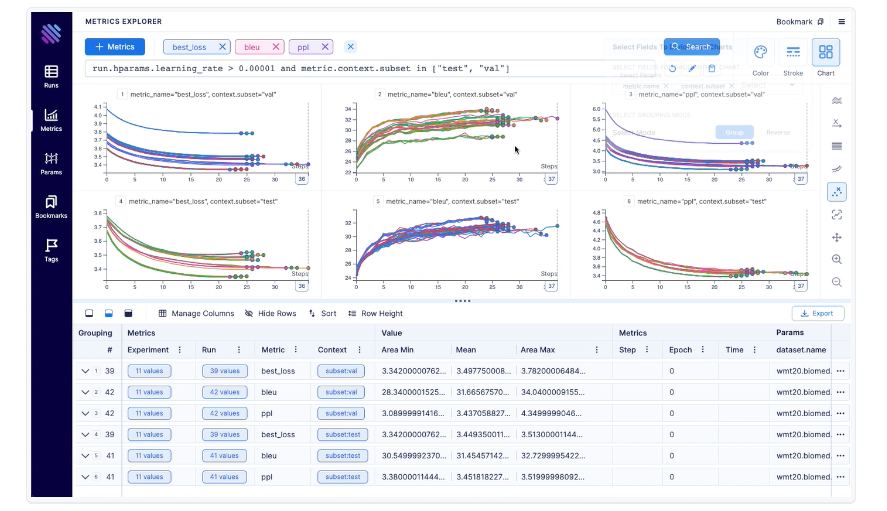

- Experiment Tracking: Track and visualize metrics from your AI experiments, such as loss, accuracy, and other custom metrics.

- Comparison Across Runs: Easily compare multiple experiments side by side, allowing for better insights and decision-making.

- Framework Integration: Seamlessly integrates with popular machine learning frameworks, including PyTorch, TensorFlow, and Keras.

- Scalability: Efficiently handles large-scale experiments with thousands of runs, ensuring smooth performance.

- Deep dive into details of each run for easy debugging

- Collaborative Features: Share and collaborate on experiments with your team, enhancing productivity and knowledge sharing.

- Open Source: Aim is fully open-source, allowing for customization and community-driven improvements.

- Interactive UI: A user-friendly interface that provides intuitive visualizations and easy navigation through your experiments.

- Logging and Analysis: Detailed logging of experiment data, enabling in-depth analysis and debugging.

- Custom Metrics: Support for custom metrics tracking, tailored to specific project needs.

- Integration with CI/CD: Incorporates into continuous integration and deployment pipelines to automate experiment tracking.

Application

- AI Experiment Tracking: AimStack is designed for tracking AI experiments, enabling data scientists and machine learning engineers to monitor various metrics such as loss, accuracy, and other custom metrics in real-time. This application is essential for iterative model development and hyperparameter tuning.

- Model Comparison: AimStack allows users to compare different models and their performance metrics side by side. This is crucial for selecting the best-performing model for deployment, especially in research and production environments.

- Collaboration and Sharing: Teams working on AI projects can use AimStack to share their experiments and findings with each other, enhancing collaboration. This application is particularly valuable for large teams spread across different locations.

- Reproducibility: By logging every experiment's configuration, AimStack ensures that experiments can be reproduced precisely, which is critical in research and development to verify and validate results.

- Integration with CI/CD Pipelines: AimStack can be integrated into continuous integration and continuous deployment (CI/CD) pipelines to automate the tracking of experiments, ensuring that every change in the model or data is properly logged and evaluated.

- Hyperparameter Optimization: AimStack supports the tracking of hyperparameter optimization processes, helping users to efficiently explore the hyperparameter space and identify the best configurations.

Use-cases

- Academic Research: Universities and research institutions can use AimStack to manage and track experiments across multiple research projects, ensuring transparency and reproducibility in AI research.

- Enterprise AI Development: Companies developing AI solutions can integrate AimStack into their workflow to monitor model performance, optimize training processes, and ensure that the best models are selected for production.

- Data Science Teams: AimStack is ideal for data science teams who need to track and compare the performance of various machine learning models, facilitating collaborative work and decision-making.

- Model Evaluation in Healthcare: In healthcare, where model accuracy is critical, AimStack can be used to meticulously track the performance of predictive models, ensuring they meet the necessary standards before deployment in clinical settings.

- Financial Sector: Financial institutions developing AI models for risk assessment, fraud detection, or algorithmic trading can use AimStack to track model performance and make informed decisions based on detailed experiment comparisons.

- Autonomous Systems Development: Companies working on autonomous vehicles or robotics can utilize AimStack to track the performance of their AI models over time, ensuring that improvements are consistent and verifiable.

Integrations

- TensorFlow

- Plotly

- Dash

- mlflow

- Jupyter

License

Apache-2.0 License