Introducing AnythingLLM: Turn any Static Docs into a Dynamic AI, Start Talking with your Docs

The all-in-one Desktop & Docker AI application with full RAG and AI Agent capabilities.

Table of Content

Have you ever wished to converse with your documents? Or perhaps you've dreamt of wielding the power of AI at your fingertips, with no frustrating setups? Well, dream no more because AnythingLLM is here to make your tech fantasies come true!

What is LLM?

LLM, which stands for Language Models, are the heart and soul of applications like AnythingLLM. They are the engines that give machines the ability to understand and generate human-like text. LLMs are what power the AI in your favorite digital assistant and recommendation algorithms.

ChatGPT is a particular type of LLM that's designed to mimic human conversation. It's what enables AnythingLLM to let you chat intelligently with any document you provide it.

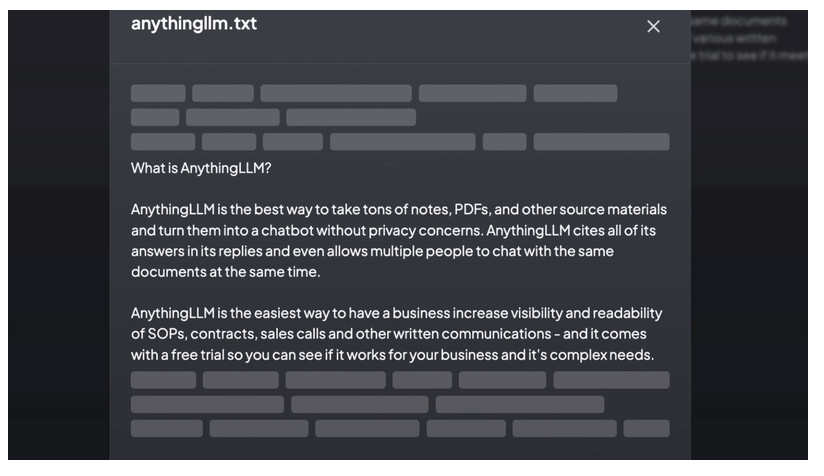

What is AnythingLLM?

AnythingLLM is an innovative, hyper-configurable, multi-user application that turns any document, resource, or piece of content into contextual fodder for AI agents. With this incredible tool, you get to pick and choose which LLM or Vector Database you want to use, giving you unparalleled control over your AI experience.

But what sets AnythingLLM apart is its unique workspace feature. Think of a workspace as a thread, but with the added bonus of document containerization. These workspaces can share documents without talking to each other, ensuring a clean, uncluttered context for each workspace.

In a nutshell, AnythingLLM is a game-changer. It's not just an AI application – it's your ticket to a seamless, intelligent, and interactive AI experience. Explore the world of AI like never before with AnythingLLM!

Open-source & Self-hosted

This application is designed as an open-source program that you can self-host either on your local machine or server. The front-end, built with viteJS and React, allows you to easily create and manage all content that the LLM can utilize. The server, powered by NodeJS Express, manages all interactions, vectorDB management, and LLM interactions.

For building from the source, Docker instructions and build processes are provided. There is also a collector, a NodeJS express server, that processes and parses documents from the user interface.

Multiple Deployment Options

You can easily deploy the app on AWS, DigitalOcean, Render.com, GCP, or even any machine using Docker.

Features

- Open-source and self-hosted

- Totally private

- Multi-user instance support and permissioning

- Agents inside your workspace (browse the web, run code, etc)

- Custom Embeddable Chat widget for your website

- Multiple document type support (PDF, TXT, DOCX, etc)

- Manage documents in your vector database from a simple UI

- Two chat modes

conversationandquery. Conversation retains previous questions and amendments. Query is simple QA against your documents - In-chat citations

- 100% Cloud deployment ready.

- Highly customizable

- "Bring your own LLM" model.

- Custom LLM Models

- Extremely efficient cost-saving measures for managing very large documents. You'll never pay to embed a massive document or transcript more than once. 90% more cost effective than other document chatbot solutions.

- Full Developer API for custom integrations!

Supported LLMs

- Any open-source llama.cpp compatible model

- OpenAI

- Azure OpenAI

- Anthropic

- Google Gemini Pro

- Hugging Face (chat models)

- Ollama (chat models)

- LM Studio (all models)

- LocalAi (all models)

- Together AI (chat models)

- Perplexity (chat models)

- OpenRouter (chat models)

- Mistral

- Groq

Supported Embedding Models

Supported Transcription models:

- AnythingLLM Built-in (default)

- OpenAI

Supported Vector Databases:

Setup the development env

First you need to clone the Github repo, then make sure you have Node.js installed at your machine, then setup your NPM packages, configure your .env and run your app.

yarn setupTo fill in the required.envfiles you'll need in each of the application sections (from root of repo).- Go fill those out before proceeding. Ensure

server/.env.developmentis filled or else things won't work right.

- Go fill those out before proceeding. Ensure

yarn dev:serverTo boot the server locally (from root of repo).yarn dev:frontendTo boot the frontend locally (from root of repo).yarn dev:collectorTo then run the document collector (from root of repo).

License

The app is released under the MIT license