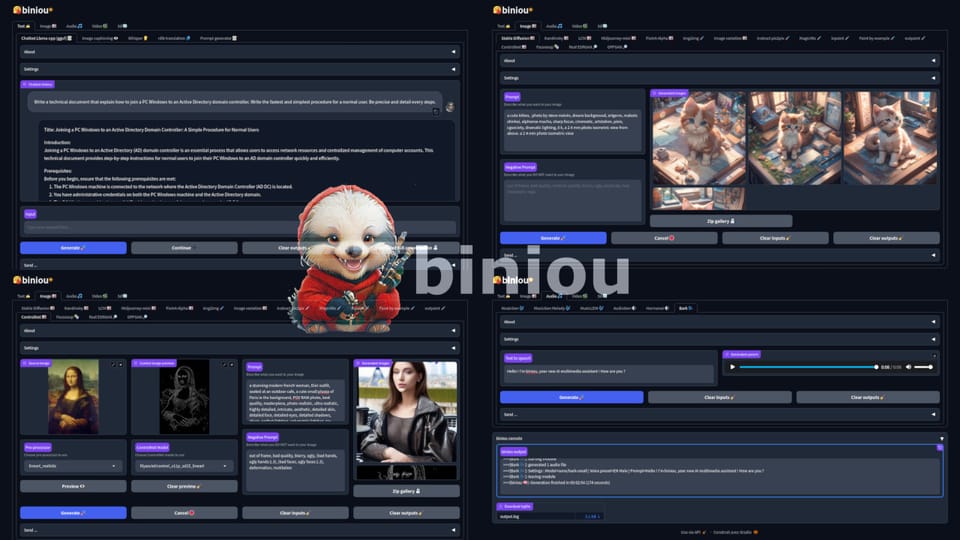

Create Text, Images, Videos, and Audio with AI Using Biniou - The Free Self-hosted GenAI App

Are You Truly Ready to Put Your Mobile or Web App to the Test?

Don`t just assume your app works—ensure it`s flawless, secure, and user-friendly with expert testing. 🚀

Why Third-Party Testing is Essential for Your Application and Website?We are ready to test, evaluate and report your app, ERP system, or customer/ patients workflow

With a detailed report about all findings

Contact us nowTable of Content

Generative AI (GenAI) is a type of artificial intelligence that creates new content such as text, images, audio, video, or 3D models. It uses machine learning models, often based on deep learning architectures, to generate outputs from user inputs like text prompts or images. Examples include text generation, image creation, audio synthesis, and video editing.

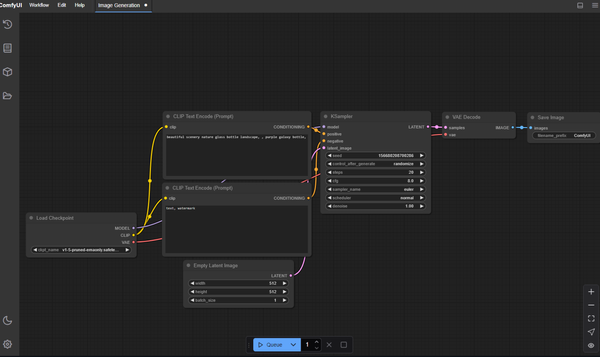

While most of the reliable services are online, there are dozens of open-source solutions that can help you run your own GenAI solution offline and on the cloud, you may check the following list:

What is Biniou?

Biniou is an open-source self-hosted web app that enables you to run 30+ generative AI easily and for free.

You can generate multimedia contents with AI and use a chatbot on your own computer, even without dedicated GPU and starting from 8GB RAM. Can work offline (once deployed and required models downloaded).

Whether you're crafting text, images, audio, videos, or even 3D objects, Biniou offers unparalleled flexibility and ease of use. Best of all, you don’t need a high-end GPU—Biniou runs seamlessly starting with just 8GB of RAM and works offline after initial setup, making it accessible and cost-effective for everyone.

Experience the freedom of self-hosted AI with Biniou and unlock limitless creativity without the dependency on cloud-based services.

Key Features

1- Text Generation

- 🖋️ Llama-CPP Chatbot: Supports

.ggufmodels. - 👁️ Llava Multimodal Chatbot: Uses

.ggufmodels. - 👁️ Microsoft GIT: Image captioning.

- 👂 Whisper: Speech-to-text.

- 👥 NLLB Translator: 200+ languages.

- 📝 Prompt Generator: ChatGPT-style output (requires 16GB+ RAM).

2- Image Generation & Modification

- 🖼️ Stable Diffusion: Text2Image, Img2Img, Inpaint, Outpaint, Variations.

- 🖼️ Advanced Modules: Kandinsky, Latent Consistency Models, PixArt-Alpha.

- 🖌️ Specialized Tools: ControlNet, IP-Adapter, Instruct Pix2Pix, MagicMix.

- 🖼️ Photobooth & Restoration: Insight Face, GFPGAN, Real-ESRGAN upscaling.

- 🖌️ Fantasy Studio: Paint by Example (16GB+ RAM).

3- Audio Generation

- 🎶 MusicGen: Melody and text-based modules (16GB+ RAM for some).

- 🔊 Harmonai, Audiogen, Bark: Versatile sound and speech tools.

4- Video Generation & Editing

- 📼 Text2Video Tools: Modelscope, AnimateDiff, Text2Video-Zero (16GB+ RAM for some).

- 🖌️ Video Instruct-Pix2Pix: Advanced video editing (16GB+ RAM).

5- 3D Object Creation

- 🧊 Shap-E Modules: Text2Shape and Img2Shape (requires 16GB+ RAM).

Compact and packed with cutting-edge generative AI modules!

Other Features

- Zeroconf installation through one-click installers or Windows exe.

- User friendly : Everything required to run biniou is installed automatically, either at install time or at first use.

- WebUI in English, French, Chinese (traditional).

- Easy management through a control panel directly inside webui : update, restart, shutdown, activate authentication, control network access or share your instance online with a single click.

- Easy management of models through a simple interface.

- Communication between modules : send an output as an input to another module

- Powered by 🤗 Huggingface and gradio

- Cross platform : GNU/Linux, Windows 10/11 and macOS(experimental, via homebrew)

- Convenient Dockerfile for cloud instances

- Generation settings saved as metadatas in each content.

- Support for CUDA (see CUDA support)

- Experimental support for ROCm

- Support for Stable Diffusion SD-1.5, SD-2.1, SD-Turbo, SDXL, SDXL-Turbo, SDXL-Lightning, Hyper-SD, Stable Diffusion 3, LCM, VegaRT, Segmind, Playground-v2, Koala, Pixart-Alpha, Pixart-Sigma, Kandinsky and compatible models, through built-in model list or standalone .safetensors files

- Support for LoRA models (SD 1.5, SDXL and SD3)

- Support for textual inversion

- Support llama-cpp-python optimizations CUDA, OpenBLAS, OpenCL BLAS, ROCm and Vulkan through a simple setting

- Support for Llama/2/3, Mistral, Mixtral and compatible GGUF quantized models, through built-in model list or standalone .gguf files.

- Easy copy/paste integration for TheBloke GGUF quantized models.

Prerequisites

- Minimal hardware :

- 64bit CPU (AMD64 architecture ONLY)

- 8GB RAM

- Storage requirements :

- for GNU/Linux : at least 20GB for installation without models.

- for Windows : at least 30GB for installation without models.

- for macOS : at least ??GB for installation without models.

- Storage type : HDD

- Internet access (required only for installation and models download) : unlimited bandwidth optical fiber internet access

- Recommended hardware :

- Massively multicore 64bit CPU (AMD64 architecture ONLY) and a GPU compatible with CUDA or ROCm

- 16GB+ RAM

- Storage requirements :

- for GNU/Linux : around 200GB for installation including all defaults models.

- for Windows : around 200GB for installation including all defaults models.

- for macOS : around ??GB for installation including all defaults models.

- Storage type : SSD Nvme

- Internet access (required only for installation and models download) : unlimited bandwidth optical fiber internet access

Supported Platforms

- a 64 bit OS :

- Debian 12

- Ubuntu 22.04.3 / 24.04

- Linux Mint 21.2+ / 22

- Rocky 9.3

- Alma 9.3

- CentOS Stream 9

- Fedora 39

- OpenSUSE Leap 15.5

- OpenSUSE Tumbleweed

- Windows 10 22H2

- Windows 11 22H2

- macOS ???

License

GNU General Public License v3.0