Container Orchestration with Kubernetes: Orchestrating Application Deployment in DevOps

Are You Truly Ready to Put Your Mobile or Web App to the Test?

Don`t just assume your app works—ensure it`s flawless, secure, and user-friendly with expert testing. 🚀

Why Third-Party Testing is Essential for Your Application and Website?We are ready to test, evaluate and report your app, ERP system, or customer/ patients workflow

With a detailed report about all findings

Contact us nowTable of Content

Introduction

DevOps automation has emerged as a key component of modern software development. By integrating infrastructure, CI/CD, and application deployment into one platform, DevOps automation allows for seamless management of applications across their lifecycle. As containers become more popular in enterprise settings, container orchestration tools are becoming essential components of DevOps toolchains. In this article, we will look at how to deploy applications with Kubernetes and how it can be used as part of the DevOps pipeline.

What is Container Orchestration?

To understand container orchestration, you need to know what containers are. A container is a package that includes all the dependencies and configurations required for an application to run. It's like a virtual machine (VM), but it doesn't have its own operating system instead, it runs on top of an existing operating system (OS).

Containers eliminate the need for VMs because they allow you to run multiple applications on one host OS without having multiple instances running at once. This means less overhead and faster startup times than traditional VMs provide.

Kubernetes: The De-facto Container Orchestration Tool

Kubernetes is an open-source container orchestration tool that automates the deployment, scaling, and management of containerized applications.

Kubernetes was initially developed by Google in 2014 as part of their internal infrastructure to manage their own workloads. It was later released as an open-source project in 2015 under the Apache 2 license.

Kubernetes has become the de facto standard for orchestrating containers because it provides a way for developers to deploy their code without having to worry about servers or clusters themselves (i.e., it handles all the hard stuff).

In addition to Kubernetes and its capabilities, there are alternatives that can also meet the needs of container orchestration in various scenarios. When considering the variety of container orchestration tools available, it’s crucial to evaluate which option provides a better alternative to Kubernetes based on your specific requirements and workload.

The Role of Container Orchestration in DevOps

DevOps is an approach to software development that aims to bridge the gap between developers and operations teams. IToutposts, a leading provider in the DevOps space, ensures that all aspects of a system's lifecycle, from inception through maintenance and retirement, are handled effectively and efficiently by both teams.

Container orchestration plays an important role in this process because it automates many tasks associated with deploying applications within containers across machines or clusters of machines. This automation helps make sure that your application is always up-to-date with the latest version so you don't have any unexpected surprises when you need it most like when there are thousands of customers using your service at once!

Deploying Applications with Kubernetes

Kubernetes may be a container orchestration platform that automates the deployment, scaling and management of containerized applications. It has a declarative model for deploying applications.

Kubernetes provides a built-in scheduler for scheduling containers on nodes according to resource requirements and priorities specified by the user using labels attached to each pod or node (e.g., "high CPU"). Kubernetes also provides a built-in service discovery mechanism that allows clients to discover services without having prior knowledge of where they are located within an infrastructure (e.g., load balancers).

Scaling and Load Balancing in Kubernetes

You can use Kubernetes to deploy and scale your application, as well as manage the containers themselves.

Kubernetes is a container orchestration platform that helps developers automate the deployment, scaling and management of containerized applications. It provides an API for defining stateless applications or stateful services that can be deployed on any infrastructure without having to worry about underlying details such as provisioning servers or managing load balancing between them.

Service Discovery and Networking

You may be familiar with service discovery from other environments, such as a microservices application or an AWS infrastructure. In Kubernetes, service discovery is used to find services within the cluster so that they can be accessed by other services or applications. Service discovery can be done through DNS, cloud provider load balancers (such as AWS ELBs), or locally using DNS (which is recommended).

The easiest way to configure service discovery in Kubernetes is through the use of an Ingress controller that manages ingress traffic into your cluster and routes requests to appropriate pods based on labels applied to them during deployment.

Monitoring and Logging in a Kubernetes Environment

Monitoring and logging are crucial to understanding the health of your system. Monitoring should be integrated with your CI/CD pipeline so that it can monitor each stage of deployment, including:

- Pre-Built Images

- Deployment Scripts (e.g., Ansible)

- Docker Containers

Logging should be integrated with Logstash or Fluentd, which then sends logs to Elasticsearch for indexing and searching later on. It's also important to use Prometheus as an alerting system because it allows you to identify patterns in metrics data; if you see a spike in CPU usage or memory consumption on one machine over time, this could indicate an issue with your application that needs further investigation before being deployed into production.

Continuous Integration and Continuous Deployment (CI/CD) with Kubernetes

Continuous integration (CI) and continuous deployment (CD) are practices that enable teams to build software more quickly and reliably. CD automates the process of building, testing, and releasing software changes into production environments.

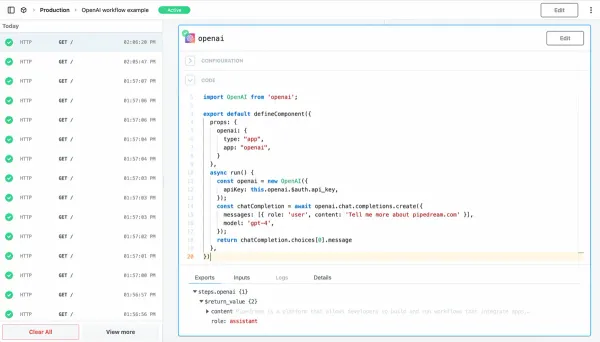

In a nutshell, CI/CD is about automating your development workflow in order that it is easy for you to make new features or fix bugs in your codebase on demand without having to stress about breaking anything within the process. By using tools like Jenkins Pipelines or Google Cloud Builds with Kubernetes pods, you can set up automated pipelines that run tests against each commit before deploying it into production automatically without any human intervention required!

Containers are a key component in DevOps, and container orchestration platforms are essential tools for DevOps teams.

Kubernetes is the de-facto container orchestration tool right now (it has been since 2016). It's an open-source framework that allows developers to deploy their applications easily across multiple environments using containers.

Conclusion

In this article, we took a look at container orchestration with Kubernetes. We discussed why it's important to have an efficient and reliable tool for deploying applications in a DevOps environment, as well as how Kubernetes can help you do that. You should now be familiar with the different components of Kubernetes and how they work together to provide a seamless experience for developers who want to deploy their code quickly without worrying about things like scaling or load-balancing issues.