CoquiTTS: An Open-Source Text-To-Speech Library

a deep learning toolkit for Text-to-Speech, battle-tested in research and production

CoquiTTS is a library for advanced Text-to-Speech generation. It's built on the latest research, was designed to achieve the best trade-off among ease-of-training, speed and quality.

It comes with pretrained models, tools for measuring dataset quality and already used in 20+ languages for products and research projects.

CoquiTTS is written with Python, and it can be a handy tool for video game developers, post-production, dubbing, and creating educational videos.

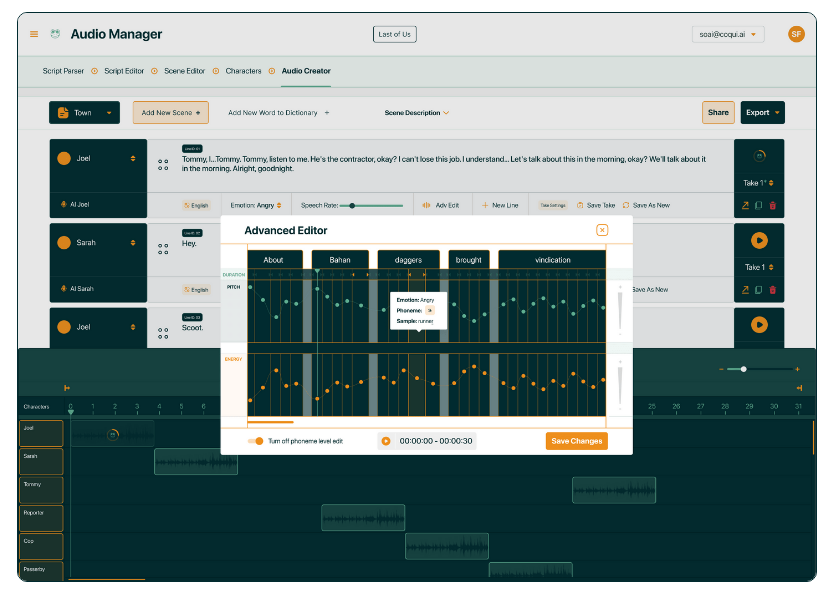

CoquiTTS developers are working now on, Coqui studio which will offer a straightforward simple user-friendly interface to clone and create text-to-speech audios in MP3 format.

Features

- High-performance Deep Learning models for Text2Speech tasks.

- Text2Spec models (Tacotron, Tacotron2, Glow-TTS, SpeedySpeech).

- Speaker Encoder to compute speaker embeddings efficiently.

- Vocoder models (MelGAN, Multiband-MelGAN, GAN-TTS, ParallelWaveGAN, WaveGrad, WaveRNN)

- Fast and efficient model training.

- Detailed training logs on the terminal and Tensorboard.

- Support for Multi-speaker TTS.

- Efficient, flexible, lightweight but feature complete

Trainer API. - Released and ready-to-use models.

- Tools to curate Text2Speech datasets under

dataset_analysis. - Utilities to use and test your models.

- Modular (but not too much) code base enabling easy implementation of new ideas.

Implemented Models

Spectrogram models

- Tacotron: paper

- Tacotron2: paper

- Glow-TTS: paper

- Speedy-Speech: paper

- Align-TTS: paper

- FastPitch: paper

- FastSpeech: paper

- SC-GlowTTS: paper

- Capacitron: paper

End-to-End Models

Attention Methods

- Guided Attention: paper

- Forward Backward Decoding: paper

- Graves Attention: paper

- Double Decoder Consistency: blog

- Dynamic Convolutional Attention: paper

- Alignment Network: paper

Speaker Encoder

Vocoders

- MelGAN: paper

- MultiBandMelGAN: paper

- ParallelWaveGAN: paper

- GAN-TTS discriminators: paper

- WaveRNN: origin

- WaveGrad: paper

- HiFiGAN: paper

- UnivNet: paper

License

The project is released under the MPL-2.0 License.