Deep Learning Framework to delineate Organs at Risk in CT Images of Patients undergoing Radiation Therapy

Radiation Therapy is a widely used treatment modality for many types of cancer, but it has potential adverse effects to healthy surrounding organs. So, accurately identifying all Organs at Risk (OARs) is a very important step in Radiation Therapy Planning. However, manually doing so is time consuming and error-prone.

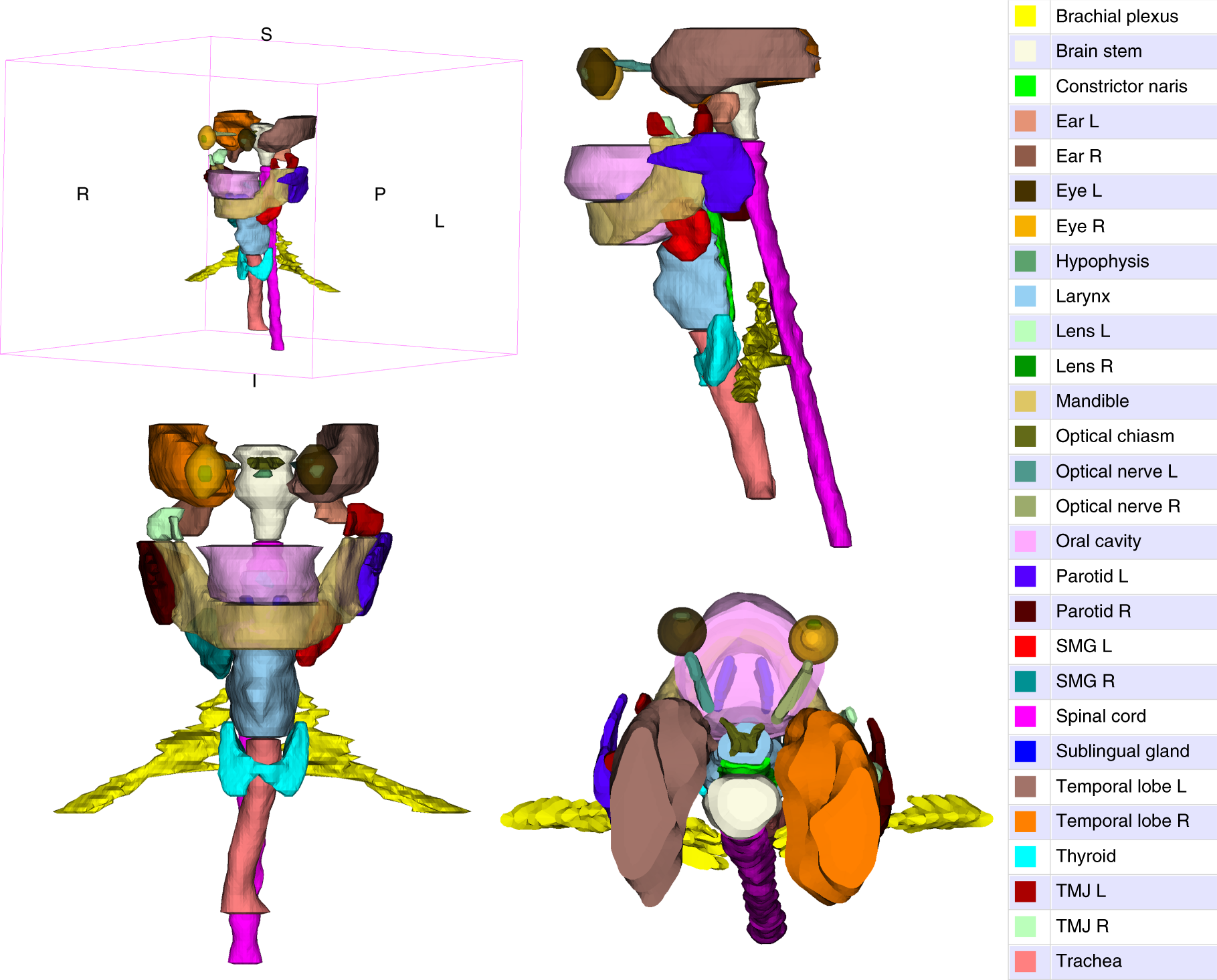

A group of researchers presented a deep learning model to automatically delineate OARs in head and neck, trained on a dataset of 215 CT scans with 28 OARs manually delineated by experienced radiation oncologists.

They chose head and neck due to the complex anatomical structures and dense distribution of important organs in this area. Damaging normal organs in the head and neck can result in a series of complications, such as xerostomia, oral mucosal damage, laryngeal oedema, dysphagia, difficulty in opening the mouth, visual deterioration, hearing loss and cognitive impairment.

This model is named Ua-Net (attention-modulated U-Net) and consists of two submodules, one for OAR detection and the other for OAR segmentation. The goal of the OAR detection module is to identify the location and size of each OAR from the CT images, while the goal of the OAR segmentation module is to further segment OARs within each detected OAR region.

Ua-Net only upsamples feature maps containing OARs, and thus is able to drastically cut down GPU memory consumption. With this model, it becomes feasible to delineate all 28 OARs from whole-volume CT images using only commodity GPUs (for example, with 11 Gb memory), which is important for the method to be able to be deployed in actual clinics.

Ua-Net can complete the entire delineation process of a case within a couple of seconds. By contrast, the human expert took, on average, 34 minutes to complete one case.

On a hold-out dataset of 100 computed tomography scans, Ua-Net achieves an average Dice similarity coefficient of 78.34% across the 28 OARs, significantly outperforming human experts and the previous state-of-the-art method by 10.05% and 5.18%, respectively.

The Code is freely available for non-commercial research purposes.