DeepFly3D: a deep learning software for 3D limb and appendage tracking

Are You Truly Ready to Put Your Mobile or Web App to the Test?

Don`t just assume your app works—ensure it`s flawless, secure, and user-friendly with expert testing. 🚀

Why Third-Party Testing is Essential for Your Application and Website?We are ready to test, evaluate and report your app, ERP system, or customer/ patients workflow

With a detailed report about all findings

Contact us nowTable of Content

A group of scientists from EPFL (École polytechnique fédérale de Lausanne) in Switzerland have developed a deep-learning based motion-capture software that uses multiple camera views to model the movements of a fly (Drosophila melanogaster) in three dimensions. The ultimate aim is to use this knowledge to design fly-like robots.

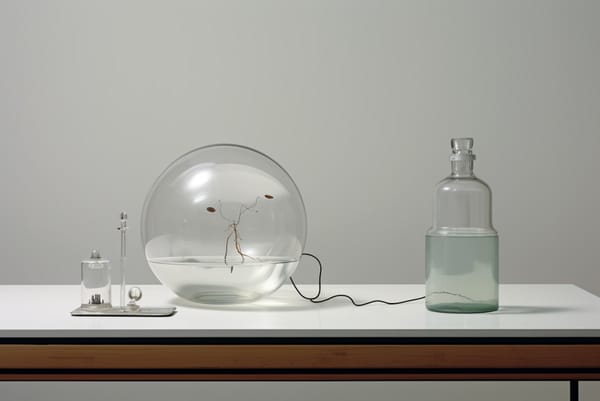

In the experimental setup, a fly walks on top of a tiny floating ball – like a miniature treadmill – while seven cameras record its every movement. The fly’s top side is glued onto an unmovable stage so that it always stays in place while walking on the ball. The collected camera images are then processed by DeepFly3D.

What’s special about DeepFly3D is that is can infer the 3D pose of the fly – or even other animals – meaning that it can automatically predict and make behavioral measurements at unprecedented resolution for a variety of biological applications. The software doesn’t need to be calibrated manually and it uses camera images to automatically detect and correct any errors it makes in its calculations of the fly’s pose. Finally, it also uses active learning to improve its own performance.

Professor Pavan Ramdya (EPFL's Brain Mind institute) says: “The fly, as a model organism, balances tractability and complexity very well. If we learn how it does what it does, we can have important impact on robotics and medicine and, perhaps most importantly, we can gain these insights in a relatively short period of time.”