Why We're Betting Big on DeepSeek-V3: A Personal Dive into the Open-Source AI That’s Changing the Game and Redefining AI Excellence

Table of Content

In a bold challenge to AI giants like OpenAI, DeepSeek has unleashed DeepSeek-R1—a revolutionary open-source model that marries brute-force intelligence with surgical precision. Boasting 671 billion parameters (only 37B active per task), this MIT-licensed marvel slashes computational costs while outperforming industry benchmarks in coding, mathematics, and complex reasoning.

With lightweight variants tailored for startups and a pricing model 27x cheaper than competitors, DeepSeek-R1 isn’t just democratizing AI—it’s rewriting the rules. Dive into how this Chinese-engineered disruptor is empowering developers and enterprises alike to harness cutting-edge AI without the corporate price tag. 🚀

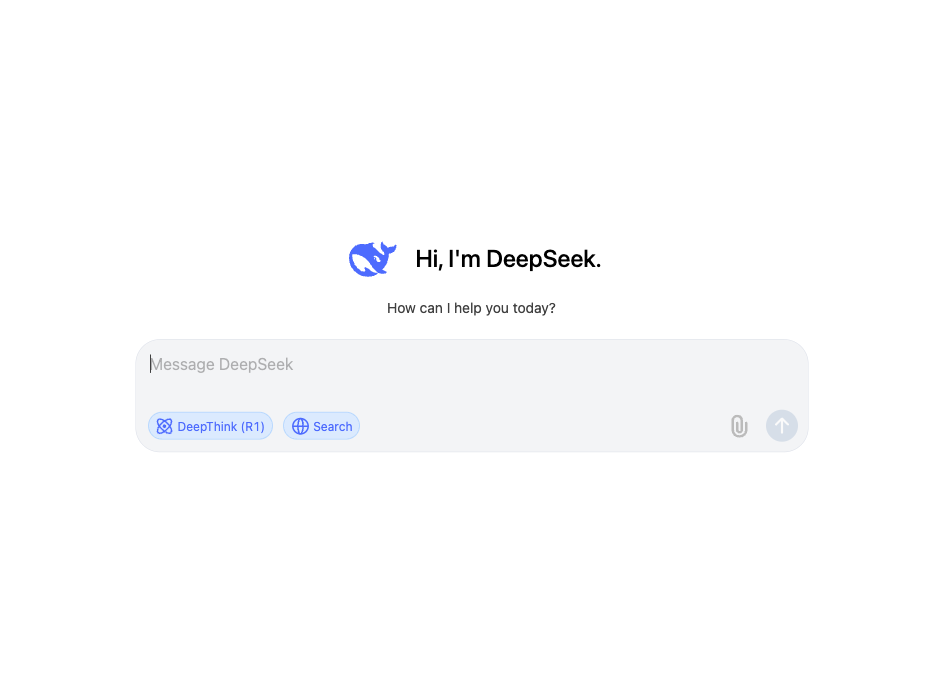

DeepSeek-R1 model's standout feature is the MIT open-source license, enabling free commercial use, modification, and collaboration among developers and businesses. Alongside the base model, DeepSeek released six lightweight variants (1.5B to 70B parameters), with the 32B and 70B versions outperforming OpenAI’s o1-mini. Accessible via chat.deepseek.com’s "Deep Thinking" mode or a competitively priced API (0.14permillioninputtokens, 0.14permillioninputtokens, 2.19 per million output tokens), the model dominates global benchmarks like AIME, MATH-500, and SWE-bench Verified in logical reasoning and answer validation.

DeepSeek-R1 isn’t just a developer tool—it’s a game-changer for businesses seeking powerful, flexible AI solutions.

Redefining Cost Efficiency: High-Quality AI Access for All

The journey of DeepSeek-V3 began with an audacious goal: to deliver top-tier AI capabilities at a fraction of the cost of its competitors. With a modest budget of just $5.58 million—compared to the astronomical sums poured into other models—DeepSeek-V3 not only meets this goal but surpasses it in ways that are hard to ignore.

As a medical doctor turned software developer, cost-efficiency resonates deeply with me, especially when recommending technological solutions to clients or integrating them into our projects.

Here i quote a conversation with my friend Zeno as he mentioned:

"DeepSeek V3 LLM, was developed in two months with a budget of just US$5,580,000, the cost of a single nice apartment in Monaco or Hong Kong.

In contrast, Meta's minimum AI budget for the financial year is a staggering US$38,000,000,000."

The ability to access such powerful tools without the prohibitive cost barrier is a significant win for developers and small businesses alike.

Technical Innovations That Impress

What truly sets DeepSeek-V3 apart are its cutting-edge features:

- Chain-of-Thought Reasoning (DeepThink): This feature is a standout, allowing the model to unravel complex problems step by step—a boon for tasks that require nuanced thought, such as medical diagnostics or software debugging.

- Efficient Architecture: Built as a 671B-parameter Mixture-of-Experts (MoE) model, only 37B parameters activate per token, optimizing computational resources.

- Innovative Training: Using 2,048 NVIDIA H800 GPUs for two months, DeepSeek slashed GPU hours by 11x compared to Meta’s Llama 3.1, thanks to techniques like DualPipe algorithm (overlapping computation/communication) and FP8 mixed precision (reducing memory usage without sacrificing accuracy).

- Cost Breakdown: At $2 per GPU hour, the total training cost was a fraction of competitors’ budgets, proving that strategic engineering trumps brute-force spending.

Specialized Modules

- DeepSeek Coder V2: Outperforms ChatGPT in code generation, supporting multi-language debugging and API integration with 97% accuracy in functional code output.

- DeepSeek Math: Tackles complex quantitative problems (e.g., statistical modeling, engineering equations) that stump general-purpose models.

- DeepSeek Search: A context-aware search function that aggregates real-time data and cross-references long-form documents, surpassing ChatGPT in accuracy for technical queries.

An Open-Source Model for Genuine Flexibility

DeepSeek-V3 is not just a tool; it's a canvas. Being open-source, it allows for unparalleled customization. This aspect is particularly crucial in my work, where adapting AI to suit specific medical or development needs can make a substantial difference in outcomes.

Community and Support That Inspires

The vibrant community around DeepSeek-V3 is a treasure trove of insights and support. From GitHub to specialized forums, the collective wisdom and rapid innovation pace are genuinely inspiring. As someone who values community-driven progress, this model feels like a partnership rather than just another product.

Benchmark Dominance: Crushing the Competition

- Coding: Surpassed GPT-4o and Llama 3.1 in Codeforces challenges, generating error-free scripts for web apps and API integrations.

- Mathematical Reasoning: Achieved top-tier scores on MATH-500 and GPQA benchmarks, rivaling specialized tools like Wolfram Alpha.

- Multilingual Mastery: Excelled in Chinese, English, and 10+ languages, with a Quality Index of 79 (higher than average).

Why DeepSeek-V3 Wins

- Cost: 10x cheaper than GPT-4o, yet equally powerful.

- Customization: Open-source flexibility vs. ChatGPT’s rigid API.

- Specialization: Modules like Coder V2 and Math outperform generalist models.

- Speed: Processes 60 tokens/second—3x faster than previous iterations

Why DeepSeek-V3 is a Mainstay in Our Toolkit

For months, we’ve integrated DeepSeek-V3 into our workflows at our AI Club and client projects. Its impact has been palpable, driving both innovation and efficiency. The model’s robust capabilities coupled with its customization features have allowed us to tailor AI-driven solutions like never before.

Our experience has been so positive that recommending DeepSeek-V3 to our clients has become commonplace. We’re not just users; we’re advocates for an AI future that is open, accessible, and highly effective.

Conclusion: A Personal Endorsement of DeepSeek-V3

In an era where AI is often shrouded in exclusivity and high costs, DeepSeek-V3 stands as a beacon of accessibility and innovation. For anyone from startup founders to fellow developers and beyond, this model isn’t just an alternative—it’s a necessity.

DeepSeek-V3 has not only impressed—it has inspired. And in this journey toward an open-source AI future, I am all in. Dive into the world of DeepSeek with us, and see why this isn’t just about technology—it’s about a vision for a more inclusive and innovative future.

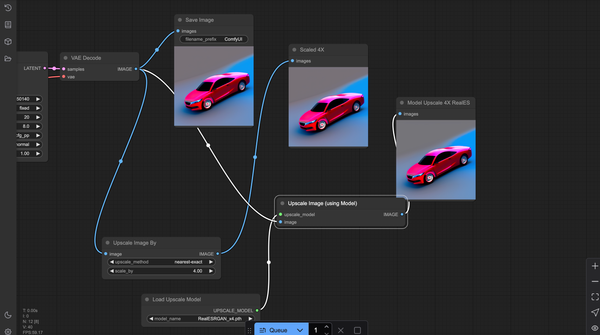

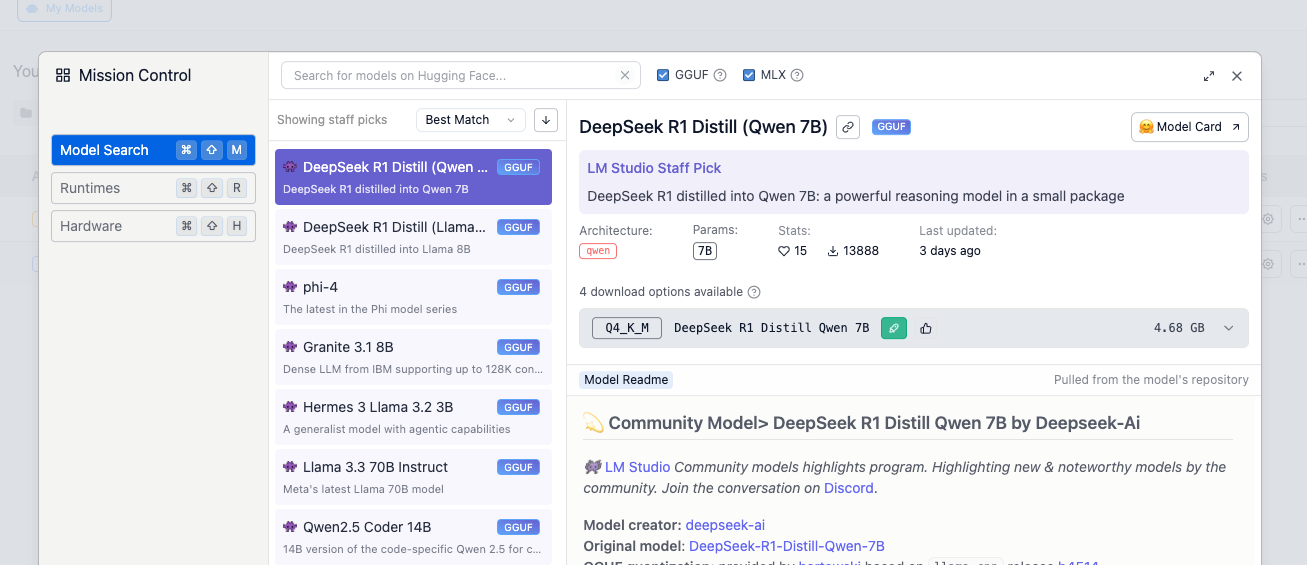

How to run DeepSeek-R1 Model locally?

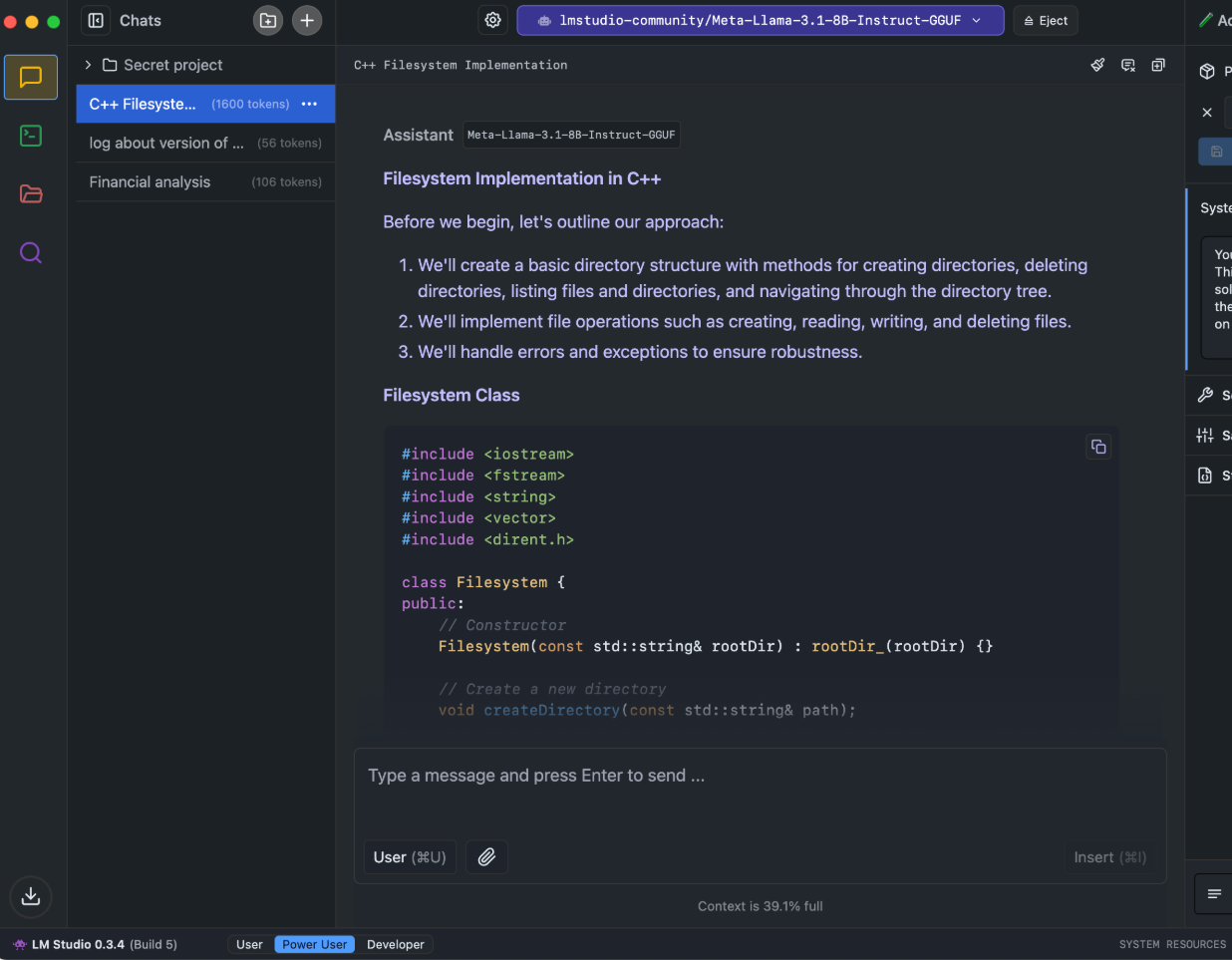

The best option is to use LM Studio, that we already reviewed here. You can easily download DeepSeek-R1 model and run it locally, but also download and use dozens of other LLMs.