Drag Your GAN (DragGAN) The Source code is finally released

"Drag Your GAN" is a technology, also referred to as DragGAN, which allows users to precisely and interactively manipulate the shape, layout, and pose of entities in generated images.

DragGAN is written using Python, and it comes with CUDA support.

The source code also comes with a simple user-friendly GUI.

This tool is especially beneficial for professionals like urban planners, as it allows them to reshape and interactively manipulate the layout, structure, and aesthetics of urban landscapes in generated images with precision and ease.

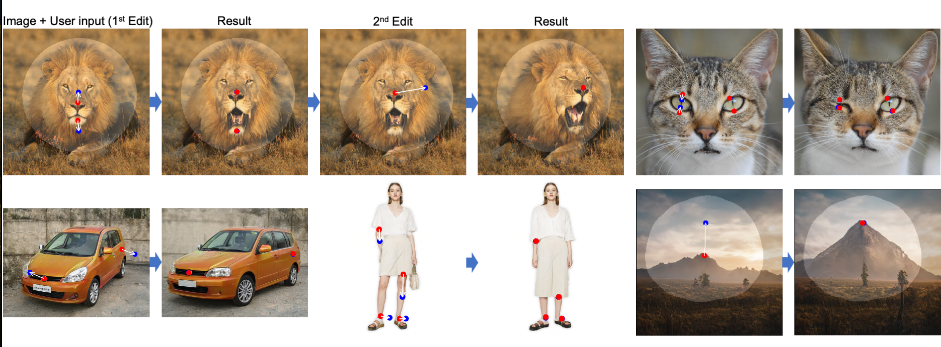

DragGAN offers flexibility in cityscape designs, allowing for transformations between architectural styles and reshaping of neighborhoods. The interactive technique lets users drag specific points in the image to desired positions, giving them significant control over the urban design process.

Author's Implementation: They perform motion supervision using shifted path loss on generator feature maps, which drives the handle points (red dots) to move towards the target points (blue dots) and the point tracking step updates the handle points to track the object in the image, this process continues until the handle points reach their corresponding target points.

The Limitations highlighted in the paper include -potential artifacts when deviating from the training distribution and tracking drift in texture-less regions. Socially, it requires adherence to personality rights and privacy regulations to prevent misuse in creating manipulated images.

Acknowledgement

This code is developed based on StyleGAN3. Part of the code is borrowed from StyleGAN-Human.

License

The code related to the DragGAN algorithm is licensed under CC-BY-NC. However, most of this project are available under a separate license terms: all codes used or modified from StyleGAN3 is under the Nvidia Source Code License.

Any form of use and derivative of this code must preserve the watermarking functionality showing "AI Generated".

Resources & Downloads