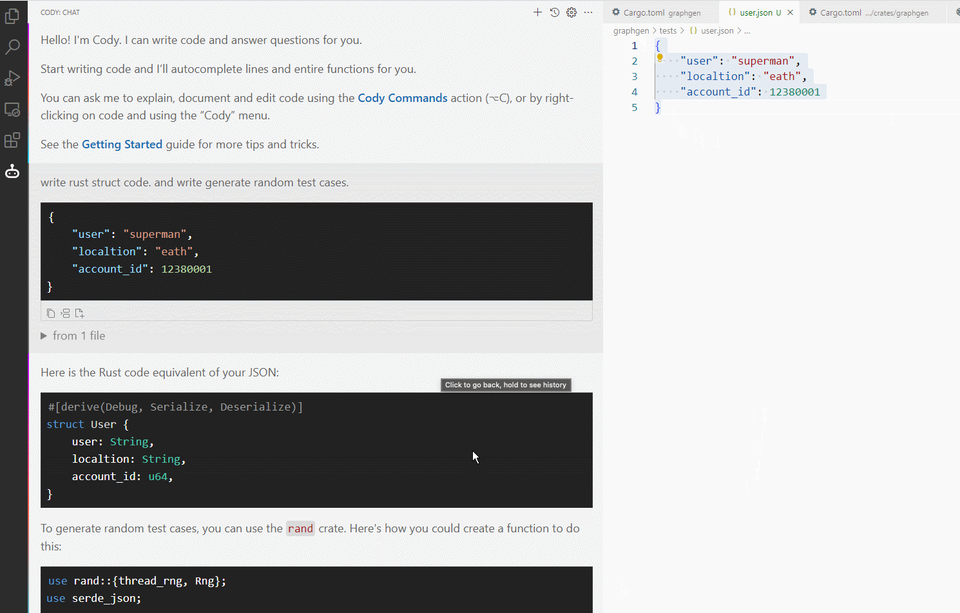

Empower your Coding Experience in VSCode with this Amazing AI Copilot

Are You Truly Ready to Put Your Mobile or Web App to the Test?

Don`t just assume your app works—ensure it`s flawless, secure, and user-friendly with expert testing. 🚀

Why Third-Party Testing is Essential for Your Application and Website?We are ready to test, evaluate and report your app, ERP system, or customer/ patients workflow

With a detailed report about all findings

Contact us nowTable of Content

What is Collama?

Collama is a free and open-source VSCode AI coding assistant powered by self-hosted llama.cpp endpoint.

Under the hood

It uses LLaMA.cpp, the Inference of Meta's LLaMA model (and others) in pure C/C++.

Llama.cpp is designed to facilitate LLM inference with minimal setup and optimal performance on various hardware, both locally and in the cloud. It's a plain C/C++ implementation with no dependencies, optimized for Apple silicon, x86 architectures, and supports integer quantization for faster inference and reduced memory use.

It also includes custom CUDA kernels for NVIDIA GPUs, supports Vulkan, SYCL, and OpenCL backends, and enables CPU+GPU hybrid inference for models larger than the total VRAM capacity.

Features

It comes as an VSCode extension, that can ease developers life. As it can:

- Chat

- Generate code

- Explain code

Getting started

- Install

Open Copilotfrom VSCode marketplace. - Set your llama.cpp server's address like http://192.168.0.101:8080 in the Cody>llama Server Endpoint configure.

- Now enjoy coding with your localized deploy models.

Platforms

- Linux

- macOS

- Windows

License

Apache-2.0 License