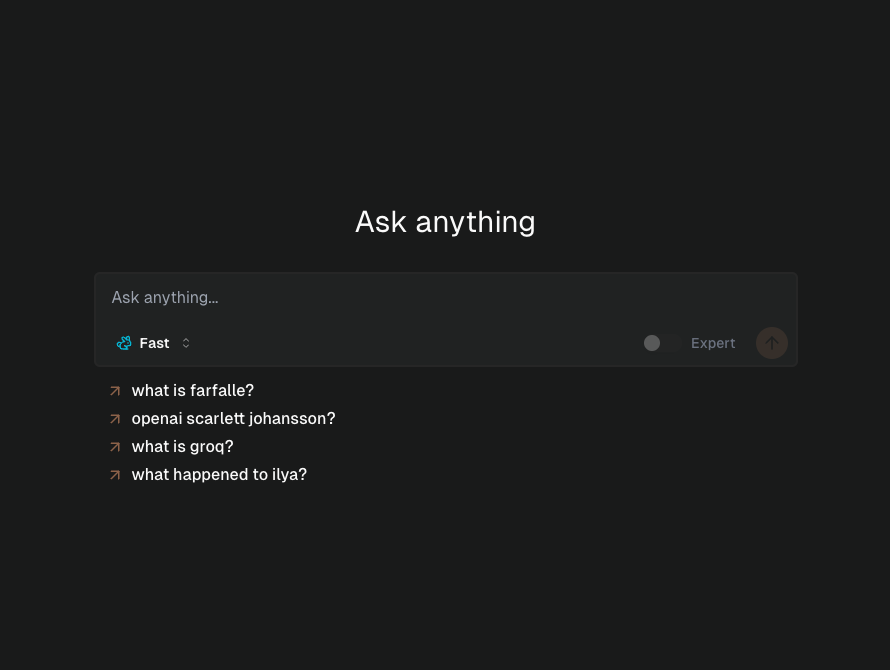

Farfalle - Open-source AI-powered search engine. And Perplexity Clone

Table of Content

Farfalle is a self-hosted open-source AI-powered search engine offers an experience similar to Perplexity, with local and cloud-based options for running language models. Users can deploy local LLMs like LLaMA 3, Gemma, Mistral, and Phi-3 or run custom LLMs using LiteLLM for streamlined integration.

It also supports cloud-based models, providing access to high-performance options such as Groq-powered LLaMA 3 and OpenAI’s GPT-4 (GPT-4o).

The engine is designed for flexibility, allowing users to switch between local and cloud solutions based on their specific needs for privacy, cost, or computational power. With these capabilities, it enables robust search functions powered by state-of-the-art language models that can handle complex queries, making it an adaptable and versatile solution for research, content generation, and personalized knowledge discovery.

Features

- Search with multiple search providers (Tavily, Searxng, Serper, Bing)

- Answer questions with cloud models (OpenAI/gpt4-o, OpenAI/gpt3.5-turbo, Groq/Llama3)

- Answer questions with local models (llama3, mistral, gemma, phi3)

- Answer questions with any custom LLMs through LiteLLM

- Search with an agent that plans and executes the search for better results

- Add support for local LLMs through Ollama

- Easy to deploy using Docker

- Create a pre-built Docker Image

- Supports chat History

This flexibility makes it a compelling tool for developers and researchers looking to harness AI search capabilities in an open-source environment.

License

Apache-2.o License

Resources