Run Ollama AI Model on Your Desktop with this Amazing Free App: Follamac

Table of Content

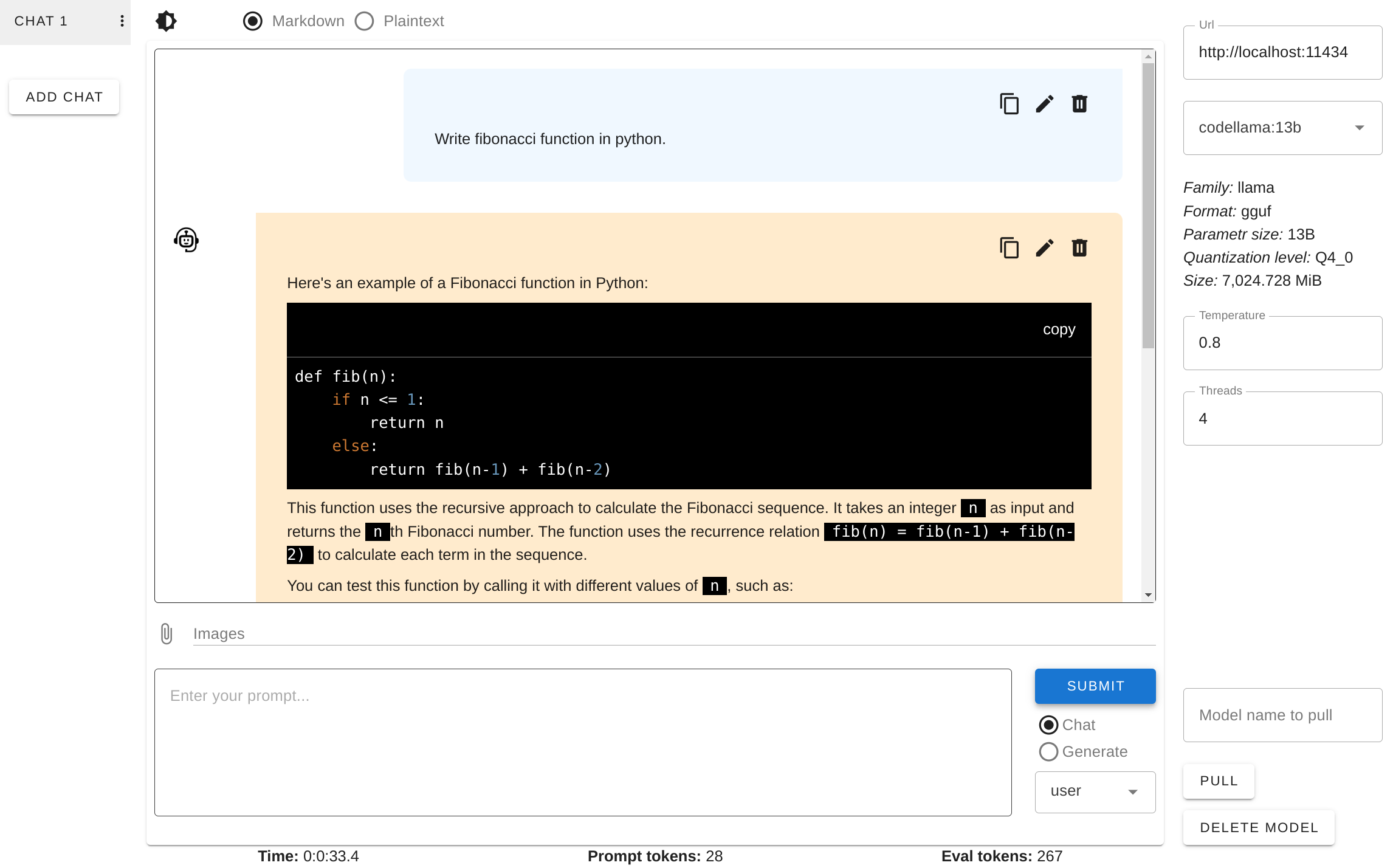

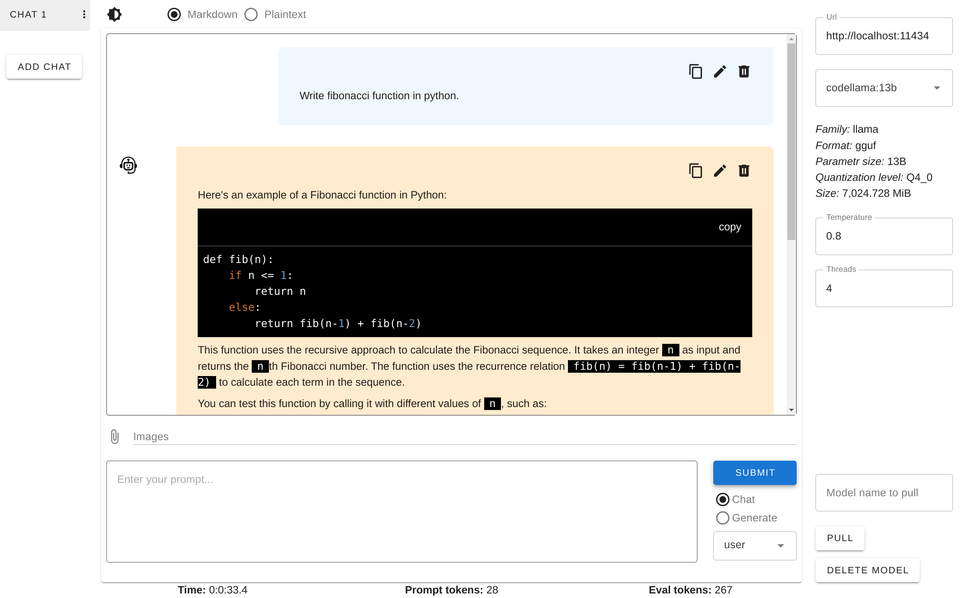

You need Ollama running on your localhost with some model. Once Ollama is running the model can be pulled from follamac or from command line. From command line type something like: ollama pull llama3 If you wish to pull from follamac you can write llama3 into "Model name to pull" input box and click the PULL button.

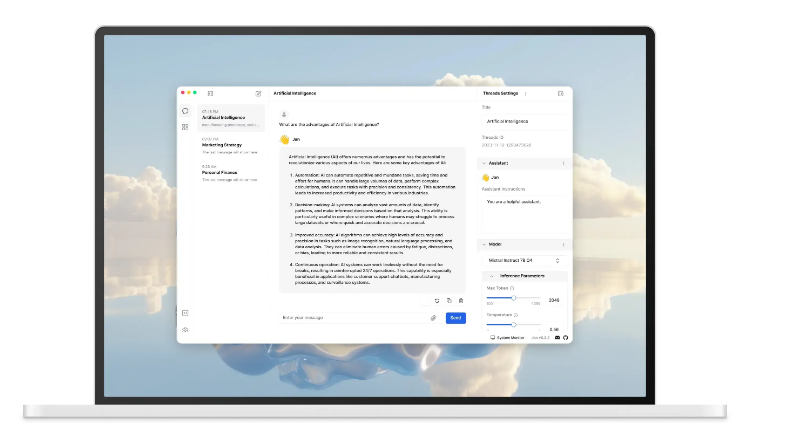

Follamac is a free and open-source desktop application which provides convenient way to work with Ollama and other large language models (LLMs).

The Ollama server must be running. On Linux you can start it using sudo systemctl start ollama.service or ollama serve commands.

Also you need to have some models pulled in the repository. You can pull latest mistral using command ollama pull mistral or you can run Follamac and pull the model from there.

You can also download Ollama clients and prepbuild models for Linux, Windows and macOS from Ollama.com.

Features

- pulling/deleting models

- sending prompts to Ollama (chat or generate)

- selecting role for a message in the chat mode and possibility to send a system message with the generate mode - basic options (temperature, threads)

- basic info about selected model

- code highlighting

- multiple chats

- editing/deleting chats

- editing/deleting messages

- copy code or whole message to clipboard

- light and dark theme (defaults to the system setting)

Platforms

Follamac works on Windows, Linux, and macOS.

License

- MIT License

Resources & Downloads