How to Read Large Text files in Python?

Reading large text files efficiently is a common task in data processing and analysis. Python provides several methods to handle this task effectively. In this blog post, we will explore different approaches to read large text files in Python and discuss their advantages and use cases.

Benefits of Using Python for Reading Large Text files

Python is a popular programming language for handling large text files due to several advantages:

- Ease of Use: Python provides simple and intuitive syntax, making it easy to read and manipulate text files.

- Rich Ecosystem: Python has a vast ecosystem of libraries and tools for data processing, such as NumPy, Pandas, and NLTK, which can be leveraged along with reading large text files.

- Memory Efficiency: Python offers various techniques, as discussed in this blog post, to efficiently read large text files without consuming excessive memory.

- Platform Independence: Python code is platform-independent, allowing you to read large text files on different operating systems.

In conclusion, reading large text files in Python is a common task that can be efficiently accomplished using various methods. Understanding the different approaches and their benefits can help you choose the most suitable method for your specific needs.

Let's go through a step-by-step tutorial with code snippets.

Step 1: Open the File

# Specify the path to your large text file

file_path = 'path/to/your/large_text_file.txt'

# Open the file in read mode

with open(file_path, 'r') as file:

# Your processing logic will go here

Step 2: Process the File Line by Line

with open(file_path, 'r') as file:

for line in file:

# Process each line as needed

# Example: Print each line

print(line.strip())

Step 3: Handle Large Files with Memory Constraints

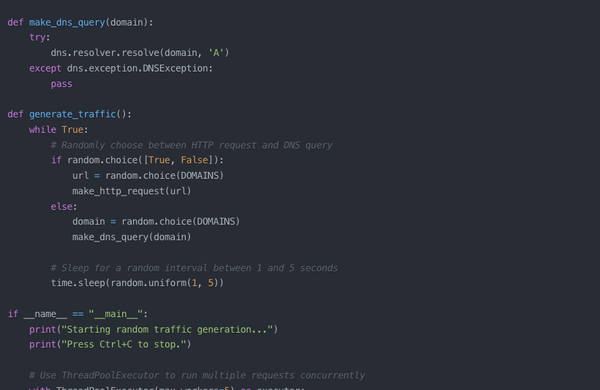

If your file is extremely large and you have memory constraints, you can use a generator to read the file in chunks:

def read_large_file(file_path, chunk_size=1024):

with open(file_path, 'r') as file:

while True:

chunk = file.read(chunk_size)

if not chunk:

break

yield chunk

# Example usage

for chunk in read_large_file(file_path):

# Process each chunk as needed

print(chunk)

Step 4: Perform Specific Processing

Now, let's say you want to perform some specific processing, such as counting the number of lines or searching for a specific pattern. Here's an example:

def count_lines(file_path):

line_count = 0

with open(file_path, 'r') as file:

for line in file:

line_count += 1

return line_count

# Example usage

lines = count_lines(file_path)

print(f'Total lines in the file: {lines}')

Step 5: Advanced Processing - Regular Expressions

If you need to extract specific information using regular expressions, you can use the re module:

import re

def extract_patterns(file_path, pattern):

with open(file_path, 'r') as file:

for line in file:

matches = re.findall(pattern, line)

if matches:

# Process the matches as needed

print(matches)

# Example usage

pattern_to_extract = r'\b\d{3}-\d{2}-\d{4}\b' # Example pattern for a Social Security Number

extract_patterns(file_path, pattern_to_extract)

Conclusion

Processing large text files efficiently in Python involves reading the file line by line or in chunks to avoid loading the entire file into memory.

Depending on your specific requirements, you can implement different processing logic, such as counting lines, searching for patterns, or extracting information using regular expressions. Adjust the code snippets according to your needs and the nature of your large text file.