How to Run DeepSeek R1 Locally? and Why it is important!

Introducing DeepSeek-R1: Why It Matters and How to Harness Its Power Locally

Table of Content

DeepSeek-R1 has been making waves in the tech community as a groundbreaking open-source language model. Its introduction into the market represents a pivotal shift in AI capabilities, making advanced machine learning accessible to a wider audience.

eepSeek-R1, known for its robustness and versatility, offers a fascinating blend of high performance and adaptability that appeals to both developers and hobbyists.

The Unique Selling Points of DeepSeek-R1

Unlike many of its contemporaries, DeepSeek-R1 distinguishes itself through its flexibility in deployment and its commitment to open-source principles. This model can be used across various platforms and is especially impactful in environments where connectivity is limited, making it an ideal choice for local operations.

The local running of DeepSeek-R1 not only ensures privacy and security but also significantly reduces reliance on cloud services, which can be costly and have variable latency issues.

The Impact on the Stock Market and Broader Implications

The release of DeepSeek-R1 has sent ripples through the stock market, emphasizing the growing importance of AI technology in economic sectors. Companies investing in AI are seeing their stocks bolstered by the promise of increased efficiency and new capabilities offered by systems like DeepSeek-R1.

For industries ranging from finance to healthcare, the implications of such technology are profound, offering the ability to process large datasets locally, enhance decision-making, and innovate at an unprecedented pace.

Running DeepSeek-R1 Locally: A Dual-Approach Guide

For those looking to leverage DeepSeek-R1 without the need for constant internet access, here are two methods to run it locally:

Method 1: For Developers - Using Python and Hugging Face Libraries

- Prerequisites: Ensure your system is equipped with Python 3.8+, a compatible NVIDIA GPU, and sufficient RAM and storage.

- Installation: Clone the DeepSeek-R1 repository and install necessary Python libraries like

torch,transformers, andsentencepiece. - Execution: Utilize simple Python scripts to load the model and start generating responses to prompts, effectively running the AI offline.

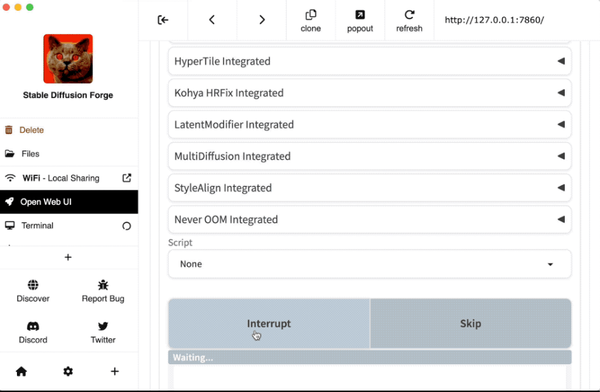

Method 2: For Non-Coders - Using LM Studio

- Setup: Install LM Studio on your system, which provides a user-friendly interface to manage AI models.

- Model Loading: Download the quantized version of DeepSeek-R1 and load it through the LM Studio interface.

- Operation: With just a few clicks, set up your AI to respond to prompts directly from your desktop.

Why Consider Running Locally?

Running DeepSeek-R1 locally offers numerous benefits:

- Privacy and Security: Keeps sensitive data within your local environment, away from external servers.

- Cost Efficiency: Eliminates ongoing costs associated with cloud computing resources.

- Performance: Local running can leverage system hardware directly, leading to faster response times without the need for internet bandwidth.

DeepSeek-R1: The Future is Local

DeepSeek-R1 exemplifies the shift towards more sustainable and accessible AI, by enabling powerful computing right at your fingertips. Whether you're a developer looking to integrate AI into your projects or a hobbyist eager to explore the capabilities of language models, running DeepSeek-R1 locally offers a promising avenue to tap into the future of technology, maintaining control over your digital environment while pushing the boundaries of what's possible in AI.