LLaMA-Factory - Open-source Fine Tuning for LLaMa Models

Table of Content

LLaMA-Factory is an open-source powerful framework designed to streamline the training and fine-tuning of LLaMA models. Built on PyTorch and Hugging Face Transformers, it enables efficient handling of long-sequence training through memory optimization and parallelization techniques, enhancing performance on GPUs like NVIDIA’s A100.

Key features include FlashAttention2 and LoRA integration, enabling dynamic, memory-efficient training, especially for large-scale models.

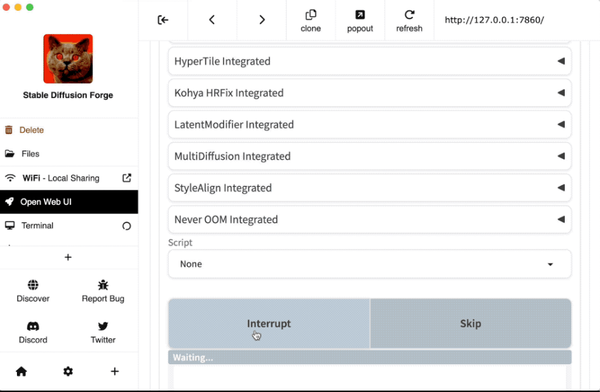

Moreover, LLaMA-Factory includes a web-based UI for easier model management and evaluation, making it suitable for both research and practical applications in large language models.

Features

- Various models: LLaMA, LLaVA, Mistral, Mixtral-MoE, Qwen, Qwen2-VL, Yi, Gemma, Baichuan, ChatGLM, Phi, etc.

- Integrated methods: (Continuous) pre-training, (multimodal) supervised fine-tuning, reward modeling, PPO, DPO, KTO, ORPO, etc.

- Scalable resources: 16-bit full-tuning, freeze-tuning, LoRA and 2/3/4/5/6/8-bit QLoRA via AQLM/AWQ/GPTQ/LLM.int8/HQQ/EETQ.

- Advanced algorithms: GaLore, BAdam, Adam-mini, DoRA, LongLoRA, LLaMA Pro, Mixture-of-Depths, LoRA+, LoftQ, PiSSA and Agent tuning.

- Practical tricks: FlashAttention-2, Unsloth, Liger Kernel, RoPE scaling, NEFTune and rsLoRA.

- Experiment monitors: LlamaBoard, TensorBoard, Wandb, MLflow, etc.

- Faster inference: OpenAI-style API, Gradio UI and CLI with vLLM worker.

Supported Models

| Model | Model size | Template |

|---|---|---|

| Baichuan 2 | 7B/13B | baichuan2 |

| BLOOM/BLOOMZ | 560M/1.1B/1.7B/3B/7.1B/176B | - |

| ChatGLM3 | 6B | chatglm3 |

| Command R | 35B/104B | cohere |

| DeepSeek (Code/MoE) | 7B/16B/67B/236B | deepseek |

| Falcon | 7B/11B/40B/180B | falcon |

| Gemma/Gemma 2/CodeGemma | 2B/7B/9B/27B | gemma |

| GLM-4 | 9B | glm4 |

| Index | 1.9B | index |

| InternLM2/InternLM2.5 | 7B/20B | intern2 |

| Llama | 7B/13B/33B/65B | - |

| Llama 2 | 7B/13B/70B | llama2 |

| Llama 3-3.2 | 1B/3B/8B/70B | llama3 |

| LLaVA-1.5 | 7B/13B | llava |

| LLaVA-NeXT | 7B/8B/13B/34B/72B/110B | llava_next |

| LLaVA-NeXT-Video | 7B/34B | llava_next_video |

| MiniCPM | 1B/2B/4B | cpm/cpm3 |

| Mistral/Mixtral | 7B/8x7B/8x22B | mistral |

| OLMo | 1B/7B | - |

| PaliGemma | 3B | paligemma |

| Phi-1.5/Phi-2 | 1.3B/2.7B | - |

| Phi-3 | 4B/14B | phi |

| Phi-3-small | 7B | phi_small |

| Pixtral | 12B | pixtral |

| Qwen (1-2.5) (Code/Math/MoE) | 0.5B/1.5B/3B/7B/14B/32B/72B/110B | qwen |

| Qwen2-VL | 2B/7B/72B | qwen2_vl |

| StarCoder 2 | 3B/7B/15B | - |

| XVERSE | 7B/13B/65B | xverse |

| Yi/Yi-1.5 (Code) | 1.5B/6B/9B/34B | yi |

| Yi-VL | 6B/34B | yi_vl |

| Yuan 2 | 2B/51B/102B | yuan |

License

Apache 2.0 License

Resources

For more details, check out its GitHub page.