Running LLMs as Backend Services: 12 Open-source Free Options - a Personal Journey on Utilizing LLMs for Healthcare Apps

Table of Content

As both a medical doctor, developer and an open-source enthusiast, I've witnessed firsthand how Large Language Models (LLMs) are revolutionizing not just healthcare, but the entire landscape of software development.

My journey into running LLMs locally began with a simple desire: maintaining patient privacy while leveraging AI's incredible capabilities in my medical practice and side projects.

My Experience with LLMs

When I first discovered LLMs, I was amazed by their potential to assist with everything from medical documentation to research analysis. However, like many healthcare professionals, I was concerned about data privacy and the implications of sending sensitive information to cloud-based services.

This led me down the fascinating path of running LLMs locally as backend services. As someone who codes in their spare time, I found that setting up local LLM instances wasn't just about privacy – it opened up a world of possibilities for creating customized healthcare applications without depending on external APIs.

Why I Choose to Run LLMs Offline

Privacy isn't the only reason I advocate for running LLMs locally. Here's what I've learned from my experience:

- Complete Control: Running models like Llama or GPT-J locally gives me full control over how the AI processes medical information. I can fine-tune the models for specific medical terminology and ensure they adhere to healthcare compliance standards.

- Cost-Effective: While the initial setup requires some investment in hardware, running LLMs locally has saved me significantly compared to pay-per-token cloud services, especially when processing large volumes of medical texts.

- Always Available: In healthcare, we can't afford downtime. Local LLMs ensure I always have access to AI capabilities, even when internet connectivity is unreliable.

Setting Up Your Own LLM Backend

Through trial and error, I've found several user-friendly ways to run LLMs across different platforms:

For Windows Users

- I've had great success with Llama.cpp, which runs smoothly even on modest hardware

- The setup process is surprisingly straightforward, perfect for healthcare professionals who might not be tech experts

For Linux Enthusiasts

- Docker containers have been my go-to solution

- Kubernetes orchestration helps when scaling up for larger medical practices

For macOS Users

- Local installations work beautifully on M1/M2 chips

- The performance rivals cloud-based solutions

Real-World Applications in Healthcare

In my practice, I use locally-hosted LLMs for:

- Analyzing patient records while maintaining HIPAA compliance

- Generating preliminary medical reports

- Research paper summarization

- Medical education materials creation

Tips from My Experience

- Start Small: Begin with lighter models like Llama-2-7B before moving to larger ones

- Monitor Resource Usage: Keep an eye on CPU/GPU utilization, especially when running multiple instances

- Regular Updates: Stay current with the latest open-source LLM developments

- Community Engagement: Join open-source AI communities to share experiences and learn from others

The Future of Local LLMs in Healthcare

I believe we're just scratching the surface of what's possible with locally-run LLMs in healthcare. As models become more efficient and hardware more powerful, we'll see more healthcare providers adopting this approach to balance AI capabilities with privacy requirements.

The following are two articles that we published about utilizing LLMs for healthcare.

1- Llama.cpp

Llama.cpp: An open-source software library written in C++ that performs inference on various large language models such as Llama. It includes command-line tools and a server with a simple web interface.

It supports several LLMs models that include (but not limited to):

Features

- LLaMA 🦙

- LLaMA 2 🦙🦙

- LLaMA 3 🦙🦙🦙

- Mistral 7B

- Mixtral MoE

- DBRX

- Falcon

- Chinese LLaMA / Alpaca and Chinese LLaMA-2 / Alpaca-2

- Vigogne (French)

- BERT

- Koala

- Baichuan 1 & 2 + derivations

- Aquila 1 & 2

- Starcoder models

- Refact

- MPT

- Bloom

- Yi models

- StableLM models

2- Compage LLM Backend

Compage LLM Backend is an open-source app that uses FastAPI to generate a fully functionally LLM backend for real-time response, code generation, and more.

It can also generate documents easily with a simple API endpoint.

3- SillyTavern

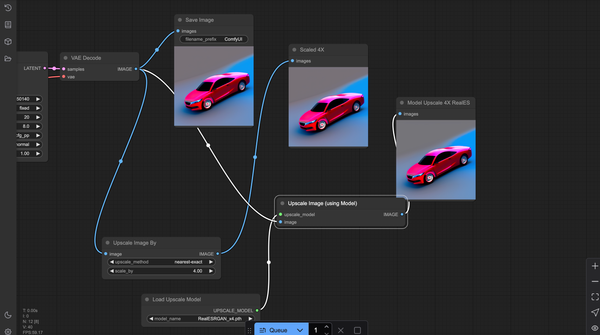

SillyTavern is a locally installed user interface designed to unify interactions with various Large Language Model (LLM) APIs, image generation engines, and TTS voice models. It supports popular LLM APIs, including KoboldAI, NovelAI, OpenAI, and Claude, with a mobile-friendly layout, Visual Novel Mode, lorebook integration, extensive prompt customization, and more.

SillyTavern integrates image generation via Automatic1111 & ComfyUI APIs and offers rich, flexible UI options.

As it is originally forked from TavernAI in 2023, it has grown through contributions from over 100 developers, making it a popular choice among AI hobbyists.

4- LlamaEdge

The LlamaEdge project makes it easy for you to run LLM inference apps and create OpenAI-compatible API services for the Llama2 series of LLMs locally.

Unlike many projects here, LlamaEdge uses Rust, as an alternative to Python, which known for its speed, portability, and security.

5- Backend GPT

The backend-GPT project provides a customizable backend server for GPT-based AI applications, supporting flexible API interactions. It’s designed for easy deployment and scalability across various environments.

6- TensorRT-LLM Backend

The TensorRT-LLM Backend for Triton Inference Server is a high-performance backend specifically designed to accelerate large language model (LLM) deployments.

It optimizes LLM inference using TensorRT, making it ideal for developers and researchers seeking faster, efficient AI model serving on NVIDIA hardware. Perfect for enterprise-grade applications requiring real-time AI responses.

7- Auto-Backend - An ✨automagical✨ Backend-DB solution powered by LLM

Auto-Backend is a self-hosted Express.js/MongoDB Backend-Database library that generates the entire backend service on runtime, powered by an LLM.

It infers business logic based on the name of the API call and connects to a hosted MongoDB database.

8- Agent-LLM (Large Language Model)

Agent-LLM is an AI automation platform for managing adaptable, multi-provider language models with plugins for diverse commands like web browsing. Designed for Docker or VMs, it offers full terminal access without restrictions.

9- 🦾 OpenLLM: Self-Hosting LLMs Made Easy

OpenLLM lets developers run open-source and custom LLMs as OpenAI-compatible APIs with one command. It includes a chat UI, advanced inference backends, and tools for seamless cloud deployment using Docker, Kubernetes, and BentoCloud.

10- ChatGPT/LLM Toolkit Backend

The ChatGPT/LLM Toolkit Backend repository enables hosting a custom backend for the ChatGPT/LLM Toolkit Bubble plugin.

The app setup requires an HTTPS-configured web server and Docker Compose to manage streaming and data-processing services.

11- LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device.

It is based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU.

12- Ray LLM

RayLLM (formerly known as Aviary) is an LLM serving solution that makes it easy to deploy and manage a variety of open source LLMs, built on Ray Serve.

Features

- Providing an extensive suite of pre-configured open source LLMs, with defaults that work out of the box.

- Supporting Transformer models hosted on Hugging Face Hub or present on local disk.

- Simplifying the deployment of multiple LLMs

- Simplifying the addition of new LLMs

- Offering unique autoscaling support, including scale-to-zero.

- Fully supporting multi-GPU & multi-node model deployments.

- Offering high performance features like continuous batching, quantization and streaming.

- Providing a REST API that is similar to OpenAI's to make it easy to migrate and cross test them.

- Supporting multiple LLM backends out of the box, including vLLM and TensorRT-LLM.

Closing Thoughts

Running LLMs as backend services isn't just about technical achievement – it's about empowering professionals to use AI responsibly while maintaining control over sensitive data. Whether you're a healthcare provider, developer, or just privacy-conscious, local LLM deployment offers a powerful solution for leveraging AI capabilities while keeping data secure.

Remember, the journey to setting up your own LLM backend might seem daunting at first, but the benefits far outweigh the initial learning curve. Start small, experiment often, and don't hesitate to reach out to the open-source community for guidance.

Stay curious, and happy coding! Let's continue pushing the boundaries of what's possible with ethical, private, and powerful AI solutions.