Harness the Power of AI: Revolutionize Your Internal Search with LLM Knowledge Base

LLM Knowledge Base - Index Your Local Files and Docs for AI-powered Internal Search

Table of Content

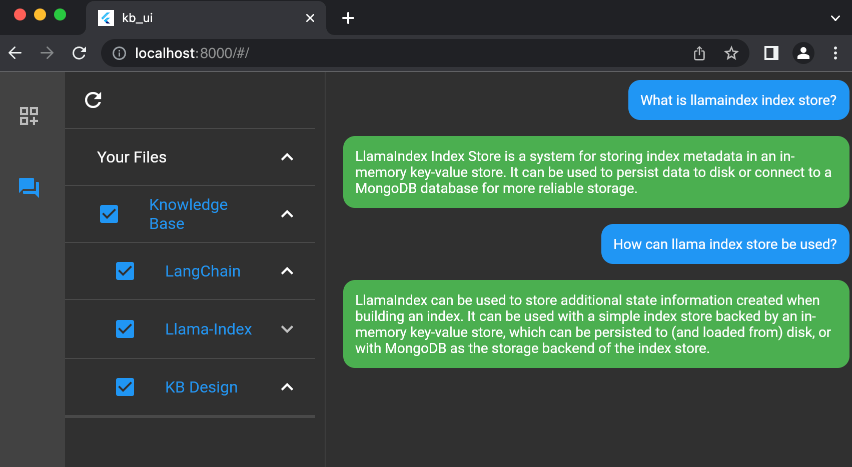

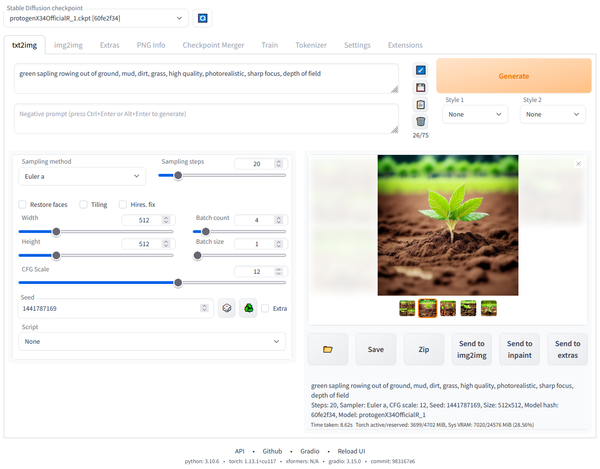

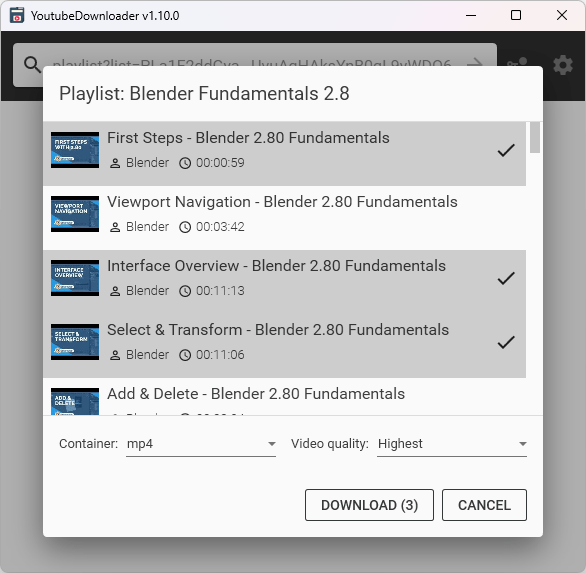

The LLM Knowledge Base is an innovative, self-hosted solution designed for internal search functionality. It presents an interface that allows you to index your data and query it using natural language, making the process of finding specific information within your datasets straightforward and user-friendly.

Full-stack and Self-hosted

This project orchestrates a full-stack application, complete with a client, server, and databases, which can be set up on your local machine, making it highly accessible and easy to manage. It currently utilizes OpenAI's GPT-3.5, an impressive language model known for its capability to understand and generate human-like text.

Benefits

The LLM Knowledge Base offers several benefits. Firstly, it allows for the efficient organization and indexing of data, which can significantly improve the speed and accuracy of internal searches. This feature can be particularly beneficial for businesses with large amounts of data, where finding specific information can be like finding a needle in a haystack.

Secondly, the project's use of natural language queries simplifies the process of searching for data. Rather than having to use complex query languages, users can ask questions in everyday language, making the tool more accessible to a wider range of users, regardless of their technical skills.

Another key benefit is its use of Docker and Docker Compose for setup and deployment. This means that the project can be easily packaged and distributed across various environments, aiding in consistency and reducing potential issues related to discrepancies between development and production environments.

Finally, with the capability to ingest your own data, the LLM Knowledge Base can be tailored to your specific needs. This feature, coupled with the generation of a title and summary for each piece of data, ensures a customized and streamlined user experience.

In conclusion, the LLM Knowledge Base is a versatile tool that can significantly enhance data management and search processes. Its benefits in terms of organization, accessibility, distribution, and customization make it an invaluable asset for businesses and individuals seeking to optimize their data search and retrieval processes.

Design

The project is a full-stack application that uses Docker compose to manage containers for a Flutter client, a Flask server, a MongoDB database, and a Weaviate vector store.

Prerequisites

Ensure the following tools are installed on your machine:

- OpenAI API key: The current LLM used is OpenAI GPT-3.5 so you need an API key to use it. You can get one here.

- Docker: Install from Docker official page

- Docker Compose: Install from Docker official page

License

Apache-2.0 License