14 Best Open-Source Tools to Run LLMs Offline on macOS: Unlock AI on M1, M2, M3, and Intel Macs

Table of Content

Running Large Language Models (LLMs) offline on your macOS device is a powerful way to leverage AI technology while maintaining privacy and control over your data. With Apple's M1, M2, and M3 chips, as well as Intel Macs, users can now run sophisticated LLMs locally without relying on cloud services.

Whether you're developing an AI assistant, building custom applications, or conducting research, offline access to LLMs offers speed, flexibility, and enhanced privacy.

Benefits of Running LLMs Offline on macOS

- Data Privacy: The primary advantage of offline LLMs is data privacy. All processing occurs locally, keeping sensitive information on your device—crucial for developers working with personal or confidential data.

- Faster Performance: Apple's powerful chipsets (M1, M2, M3) enable LLMs to perform complex tasks locally, often outpacing cloud-based solutions that depend on internet speed and server availability.

- Independence from Cloud Services: Offline LLMs eliminate reliance on external cloud services or APIs, reducing ongoing costs and mitigating risks associated with service outages.

- Customization: Running LLMs offline gives you full control over the model and its configuration, allowing for tailored solutions that fit your project's specific needs.

- Reduced Latency: Local processing eliminates the need to communicate with remote servers, reducing latency. This is particularly beneficial for real-time applications like AI assistants or interactive chatbots.

Running LLMs offline on macOS offers developers, researchers, and enthusiasts powerful capabilities for AI-driven applications while maintaining privacy, speed, and control. Stay tuned for a deep dive into the Top 14 Open-source Solutions that will empower you to build your own AI assistant on your macOS device.

Open-source Free Solutions to run LLMs on macOS

1- LLMFarm

LLMFarm is an iOS and MacOS app for working with large language models (LLMs).

Key features include:

- Support for various LLM inferences and sampling methods

- Compatibility with MacOS 13+ and iOS 16+

- Metal support (except on Intel Macs)

- LoRA adapters, fine-tuning, and export capabilities

- Apple Shortcuts integration

The app supports numerous LLM architectures and multimodal models. It offers multiple sampling methods for text generation.

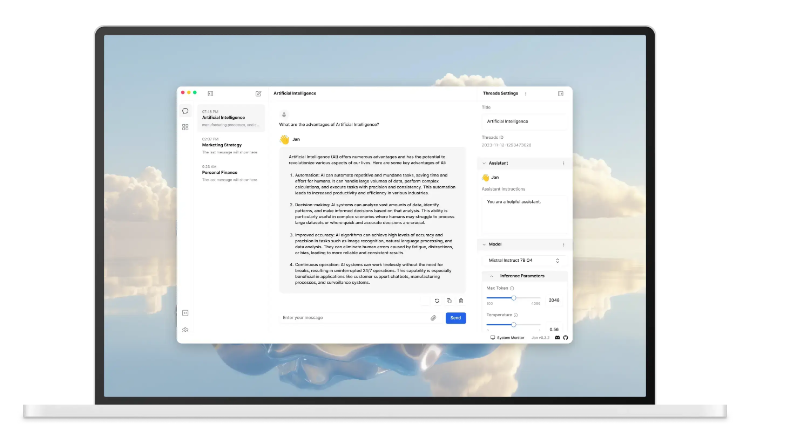

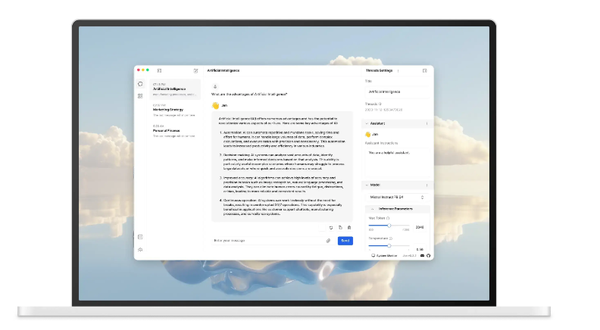

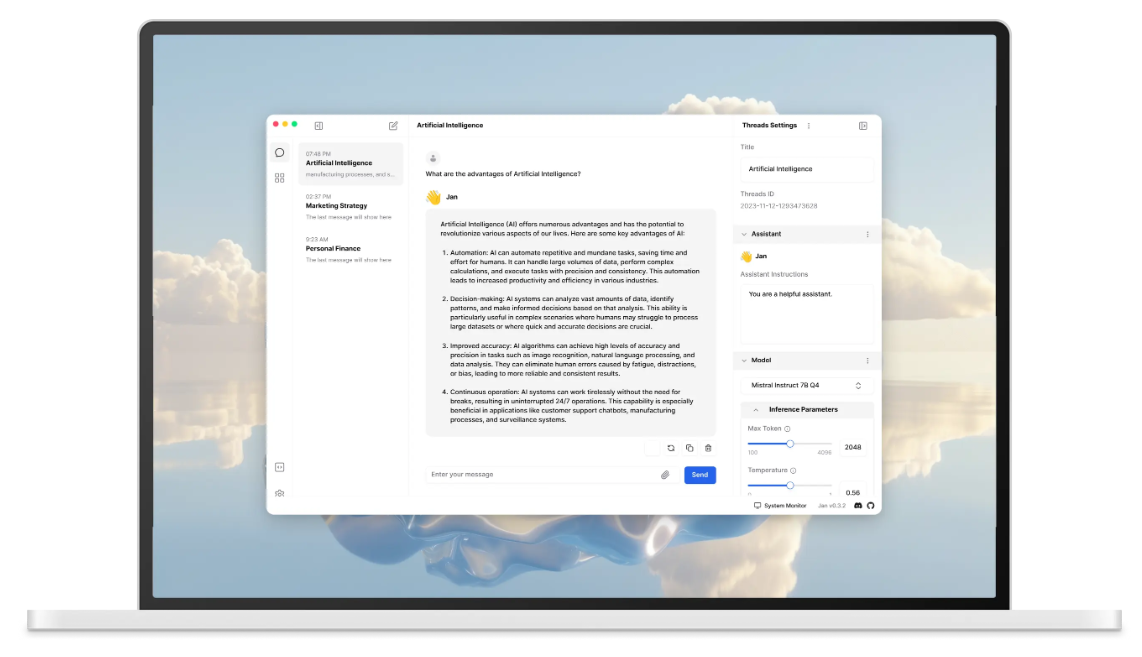

2- Jan

Jan is an innovative open-source application that serves as an alternative to ChatGPT.

Features:

- It runs entirely offline on your personal computer, ensuring privacy and local processing.

- Jan supports multiple engines, including llama.cpp and TensorRT-LLM.

- It's designed to be versatile, capable of running on various hardware configurations from personal computers to multi-GPU clusters.

Jan is compatible with a wide range of systems and architectures:

- NVIDIA GPUs (noted as fast)

- Apple M-series chips (also noted as fast)

- Apple Intel processors

- Linux Debian

- Windows x64

This broad compatibility makes Jan accessible to users across different platforms and hardware setups, offering a flexible and powerful offline alternative to cloud-based AI chatbots.

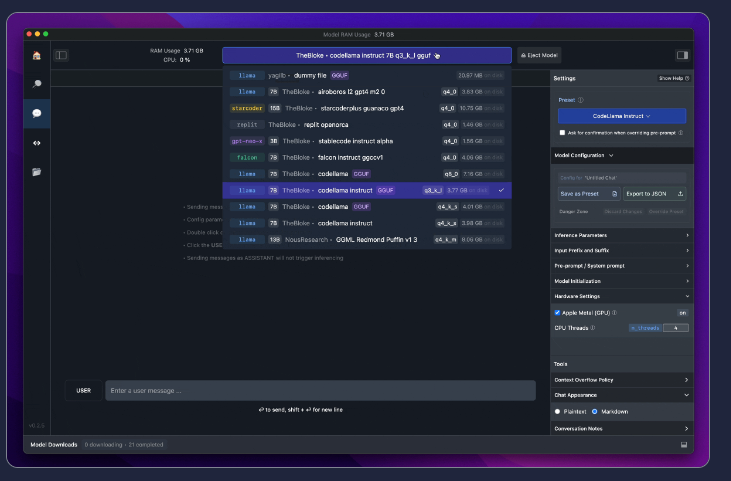

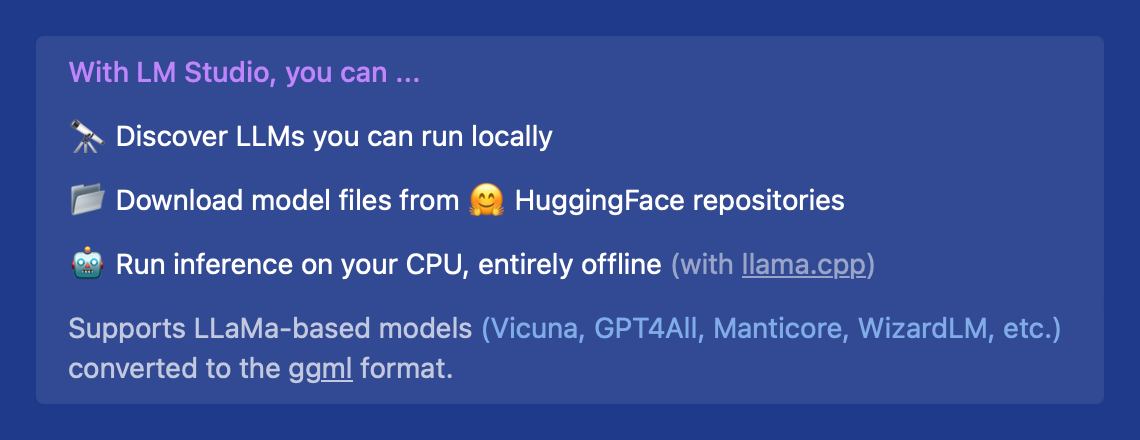

3- LM Studio

LM Studio is an open-source platform that enables users to run Large Language Models (LLMs) entirely offline on their local machines. It provides privacy, control, and efficiency, allowing individuals and businesses to harness LLMs without relying on cloud services or internet connections.

The platform supports Linux, macOS and Windows, making it accessible to a wide range of users who want to leverage LLMs without compromising data security or performance.

Key Features of LM Studio:

- Local LLM Execution: Run LLMs on your own machine, ensuring data privacy and faster performance without the need for internet connectivity or cloud services.

- Cross-Platform Compatibility: Works seamlessly on both macOS and Windows, making it versatile and easy to integrate into various environments.

- Customization Options: Fine-tune models to meet specific needs—ideal for developers, researchers, and AI enthusiasts seeking personalized AI solutions.

- Diverse Model Support: Compatible with various popular models, allowing users to select the right one for tasks such as language generation, text summarization, and other AI-driven applications.

- Cloud Independence: Gain full control over AI tools without relying on external servers, reducing costs and minimizing security risks.

- Open-Source Nature: Access and modify the codebase, ensuring transparency and fostering community-driven improvements.

4- GPT4All

GPT4All by Nomic AI is an open-source project that enables users to run Large Language Models (LLMs) locally on their devices. This initiative offers an accessible, privacy-focused solution for those wanting to harness the power of LLMs without relying on cloud-based services. GPT4All proves especially valuable for developers, researchers, and AI enthusiasts seeking a cost-effective, private, and customizable AI solution.

GPT4All offers a robust, flexible, and secure way to use LLMs on local hardware. This gives users full control over their data and AI processes. Whether you're developing AI applications or conducting research, GPT4All provides an open-source solution that prioritizes privacy and performance.

Features

- Local Model Execution: GPT4All enables users to run advanced language models on their own hardware, ensuring data privacy and eliminating the need for external APIs or cloud services.

- Cross-Platform Compatibility: The platform works on macOS, Windows, and Linux, making it accessible to users across different operating systems.

- Open-Source and Customizable: As an open-source project, GPT4All lets developers access, modify, and enhance the source code to suit specific applications.

- Wide Model Support: GPT4All offers various model architectures and pre-trained models, allowing users to select the best fit for their natural language processing tasks.

- Offline Functionality: By running entirely offline, GPT4All provides a secure and cost-effective alternative to cloud-based LLMs, ideal for privacy-conscious users or those with limited internet access.

- Active Community: As an open-source project, GPT4All benefits from a growing community of contributors who continually expand its capabilities and offer support.

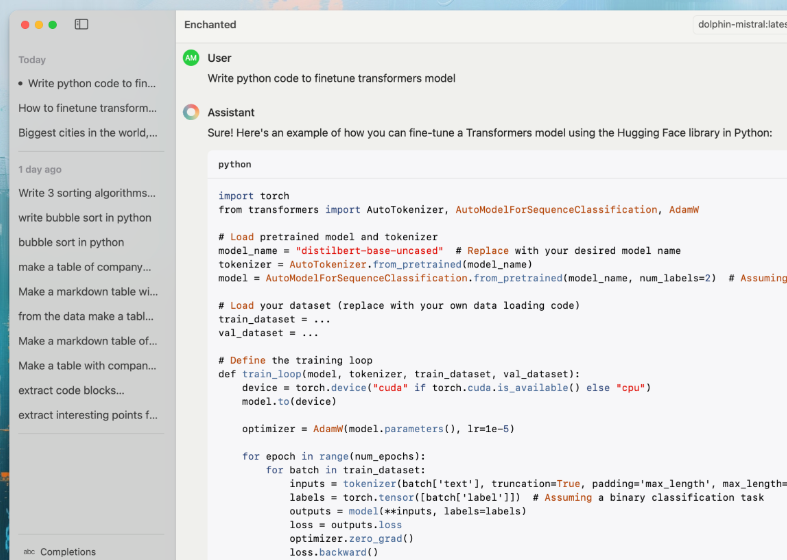

5- Enchanted

Enchanted is an open-source, Ollama-compatible app for macOS, iOS, and visionOS. It offers an elegant interface for working with privately hosted models like Llama 2, Mistral, Vicuna, Starling, and more.

Think of it as a ChatGPT-style UI that connects to your private models. Enchanted aims to provide an unfiltered, secure, private, and multimodal experience across all your devices in the Apple ecosystem (macOS, iOS, Watch, Vision Pro).

Key Features:

- Enhanced Offline Functionality: All features operate seamlessly without an internet connection

- Intuitive User Interface: Toggle between Dark and Light modes for optimal viewing comfort

- Advanced Conversation Management: Edit message content, switch models mid-conversation, and delete individual or all conversations

- Robust Privacy Features: Conversation history securely stored on your device and included in API calls

- Multimodal Input Support: Utilize voice prompts and attach images to enhance your queries

- Customizable System Prompts: Tailor the AI's behavior by specifying system prompts for each conversation

- Rich Text Rendering: Markdown support for clear display of tables, lists, and code blocks

- Accessibility Features: Text-to-Speech functionality for auditory content consumption

- Streamlined Navigation: Quick access via macOS Spotlight panel (Ctrl ⌘ K)

- Delete single conversation / delete all conversations

- macOS Spotlight panel ++

- All features works offline

6- Localpilot

LocalPilot is an open-source AI tool that functions as a local AI copilot, offering intelligent assistance without relying on cloud-based services. This project allows users to run an AI-powered copilot on their own machines, ensuring privacy, data control, and freedom from internet dependency.

LocalPilot caters to developers, researchers, and AI enthusiasts who need a privacy-focused, high-performance AI copilot for tasks like coding, content generation, and more.

7- Ava PLS

Ava PLS is an open-source desktop application that runs language models directly on your computer. It empowers users to perform various natural language processing tasks without relying on cloud-based services, ensuring complete control over their data. With Ava PLS, users can execute a wide range of language-based tasks—such as text generation, grammar correction, rephrasing, and summarization—while maintaining privacy and flexibility.

Ava PLS offers a powerful solution for those who want to use language models locally on their machines, providing both privacy and a wide range of language processing capabilities. Whether you're generating text, correcting grammar, or summarizing content, Ava PLS is an accessible and secure tool for language tasks.

Key Features

- Local Language Model Execution: Ava PLS runs language models entirely offline, offering a privacy-focused solution without the need for internet or external cloud services.

- Multi-task Language Processing: The application supports several language tasks, including text generation, grammar correction, rephrasing, summarization, and data extraction, making it versatile for various use cases.

- Open-Source and Customizable: As an open-source tool, Ava PLS allows developers to access the code, modify it, and customize the application to suit specific needs.

- Cross-Platform Compatibility: Ava PLS works across major operating systems—including macOS, Windows, and Linux—providing flexibility for a wide range of users.

- Privacy and Data Control: Since the language models run locally, users have full control over their data, making Ava PLS ideal for those who prioritize data security.

- User-Friendly Interface: The application features a straightforward, intuitive interface that makes it easy for users to access and run different language tasks without extensive technical knowledge.

8- MLX-LLM: Lightweight Large Language Model Inference Framework

MLX-LLM is a lightweight, open-source framework for efficient local execution of large language models (LLMs). It simplifies LLM deployment and inference, offering a user-friendly approach to tasks like text generation and summarization—all without cloud dependence.

This makes it perfect for developers and researchers who need offline LLM capabilities and complete data control.

MLX-LLM offers a streamlined solution for local LLM execution, combining efficiency, versatility, and privacy. Whether you're tackling text generation, summarization, or data extraction, MLX-LLM provides a robust toolkit for offline AI operations.

Key Features of MLX-LLM

- Lightweight and Efficient: MLX-LLM enables local LLM execution, even on modest hardware, without sacrificing power or efficiency.

- Support for Multiple LLMs: The framework accommodates various pre-trained models, including:

- GPT-2

- GPT-3

- BERT

- T5

- RoBERTa

- Easy Model Integration: Users can seamlessly incorporate new models, enhancing flexibility for diverse use cases and custom configurations.

- Cross-Platform Compatibility: MLX-LLM runs on macOS, Windows, and Linux, catering to users across different operating systems.

- Privacy-Focused: By processing data locally, MLX-LLM ensures complete privacy and control over sensitive information.

- Task Versatility: The framework handles a wide array of language tasks, from text generation and summarization to data extraction and translation.

- Optimized for Inference: MLX-LLM delivers fast, efficient LLM inference while minimizing resource consumption.

- Open-Source and Customizable: As an open-source project, MLX-LLM invites developers to tailor the framework to their needs and contribute improvements to the community.

9- FreeChat

FreeChat is a native LLM (Large Language Model) application for macOS that allows users to chat with AI models locally on their Mac without requiring an internet connection. Here are the key features and characteristics of FreeChat:

- Runs completely locally, ensuring privacy and offline functionality

- Compatible with llama.cpp models, allowing users to try different AI models

- Customizable personas and expertise through system prompt modifications

- Open-source project, promoting transparency and community contributions

- Focuses on simplicity and ease of use, requiring no configuration for basic usage

- Available through TestFlight beta, Mac App Store, or can be built from source

The app aims to make open, local, and private AI models accessible to a wider audience, emphasizing performance, simplicity, and user privacy.

10- 🦾 OpenLLM: Self-Hosting LLMs Made Easy

OpenLLM by BentoML is an open-source platform that simplifies the deployment and management of Large Language Models (LLMs). It offers a streamlined solution for developers to serve, fine-tune, and deploy LLMs efficiently across various environments.

OpenLLM aims to make LLMs more accessible by providing user-friendly APIs and integration tools that support diverse AI applications, including text generation, summarization, and question answering.

OpenLLM provides a powerful, flexible, and accessible framework for developers to deploy and manage large language models, enhancing AI-driven solutions with minimal effort.

Key Features

- Easy LLM Deployment: OpenLLM simplifies the process of deploying and serving LLMs, allowing developers to set up models in various environments with minimal effort.

- Integration with BentoML: Built on BentoML, OpenLLM harnesses its deployment orchestration capabilities, ensuring a seamless experience for model serving, scaling, and management.

- Support for Popular LLMs: OpenLLM supports a wide range of popular LLMs, offering flexibility for diverse AI and machine learning projects.

- Fine-Tuning and Customization: Developers can fine-tune pre-trained LLMs using OpenLLM to tailor them for specific applications, enhancing model customization options.

- Scalable and Flexible: With support for both cloud and on-premise environments, OpenLLM is highly scalable, making it suitable for enterprise applications and individual projects alike.

- API-Driven: The platform features robust APIs that enable easy integration of LLMs into applications, powering AI-driven features such as chatbots, text analysis, and more.

- Open-Source: As an open-source platform, OpenLLM empowers the community to contribute, customize, and extend its capabilities to meet diverse requirements.

11- llamafile

LlamaFile is an open-source tool developed by Mozilla-Ocho to streamline the management of large language model (LLM) files. It optimizes the process of downloading, storing, and managing large files commonly used in LLM projects. With LlamaFile, developers working with large datasets and models can handle these files efficiently without compromising performance or stability.

LlamaFile is a powerful tool for managing large language model files, offering an optimized and reliable solution for downloading, storing, and maintaining files. Its resumable downloads, integrity checks, and multi-source support make it invaluable for developers working with large datasets and AI models.

Key Features of LlamaFile

- Efficient File Management: LlamaFile optimizes the handling of large language model files, allowing developers to manage big data efficiently without system overloads or slowdowns.

- Resumable Downloads: LlamaFile supports resumable downloads, ensuring that large file transfers can be paused and resumed. This reduces the risk of data loss or incomplete downloads during unstable network connections.

- Multi-source Support: LlamaFile can download files from multiple sources, improving flexibility and download efficiency by fetching files from the fastest or most reliable source.

- Cross-Platform Compatibility: LlamaFile works across major operating systems—macOS, Windows, and Linux—making it a versatile tool for developers on any platform.

12- AnythingLLM

Anything LLM by Mintplex Labs is an open-source platform that empowers developers and organizations to run their own self-hosted language model (LLM) infrastructure. It enables users to create, manage, and integrate LLM-based applications while maintaining control over their data. By offering an all-in-one solution for deploying, managing, and scaling LLMs, Anything LLM provides a flexible and secure alternative to cloud-based LLM services.

Anything LLM offers a robust and flexible framework for running language models on self-hosted infrastructure, making it an excellent solution for businesses and developers seeking privacy, scalability, and customization.

Key Features of Anything LLM:

- Self-Hosted LLM Infrastructure: Enables users to run LLMs on their own servers, ensuring data privacy and control—ideal for companies or developers with sensitive information.

- Multi-Model Support: Accommodates various large language models, allowing users to choose and switch between different LLMs based on their specific needs.

- Customizable Pipelines: Offers configurable workflows and pipelines, enabling developers to create tailored language model operations for their applications.

- Easy Integration: Provides seamless integration with existing software through APIs, allowing developers to embed LLM capabilities into applications such as chatbots and content generation tools.

- Scalability: Designed to handle growing demands, Anything LLM can manage and scale LLMs as data and processing requirements increase, offering flexibility for both small teams and large enterprises.

- Open-Source: As an open-source project, Anything LLM invites developers to modify the platform and contribute improvements, fostering community-driven innovation and adaptability.

- Privacy-Focused: With self-hosting, all LLM data processing occurs on the user's own servers, ensuring complete control and confidentiality of sensitive information.

13- SuperAdapters

SuperAdapters is an open-source framework that streamlines adapter-based fine-tuning for large language models (LLMs). This modular system enables developers to fine-tune pre-trained models using smaller, specialized adapters, reducing computational costs while enhancing flexibility and scalability.

By simplifying the integration, management, and swapping of adapters for various NLP tasks, SuperAdapters boosts the efficiency of custom LLM development and deployment.

SuperAdapters offers an efficient, flexible, and cost-effective approach to fine-tuning large language models. It empowers developers to enhance model performance across various tasks without requiring extensive computational resources.

Key Features of SuperAdapters:

- Adapter-Based Fine-Tuning: Supports fine-tuning large pre-trained models with lightweight adapters, minimizing the need for full model retraining.

- Modular Design: Built with a modular architecture, allowing developers to easily swap adapters for different language processing tasks.

- Reduced Computational Costs: Fine-tunes smaller adapter components instead of entire models, lowering computational and memory requirements for resource-constrained environments.

- Multi-Task Support: Enables model fine-tuning across multiple tasks by switching adapters, offering flexibility in NLP applications such as text classification, sentiment analysis, and language translation.

- Compatibility with Major LLMs: Integrates with popular large language models like GPT, BERT, and T5, ensuring versatility across a wide range of NLP use cases.

- Easy Integration: Designed for seamless integration with existing machine learning and deep learning pipelines, facilitating swift implementation with minimal overhead.

- Open-Source (Apache-2.0 License) and Customizable: As an open-source tool, allows developers to tailor the framework, contribute to its development, and adapt it to specific project needs.

14- Pathway's LLM (Large Language Model) Apps

LLM-App is an open-source template for developers to build and deploy applications that harness large language models (LLMs). Created by Pathway, this project jumpstarts the integration of LLMs into various applications, enabling users to craft solutions powered by natural language processing.

By offering a structured framework that developers can tailor and expand, LLM-App streamlines the development process for diverse use cases.

LLM-App offers a solid foundation for developers creating LLM-powered applications. With its ready-made template, cross-platform compatibility, and adaptability, it serves as an excellent tool for rapidly deploying solutions based on large language models.

Features:

- Pre-Built Template: LLM-App offers a ready-to-use template for LLM-powered applications, saving developers time and effort in establishing the core structure.

- LLM Integration: The framework seamlessly incorporates various large language models, allowing developers to easily add natural language processing capabilities to their applications.

- Customizable: Developers can easily adapt the template for different use cases—such as chatbots, text analysis, and content generation—providing flexibility in tailoring solutions.

- Cross-Platform Compatibility: LLM-App works across various platforms, simplifying application deployment in desktop, web, or mobile environments.

- Support for Multiple LLMs: The framework integrates with multiple large language models, allowing developers to select the best model for their specific needs.

- Open-Source: As an open-source project, LLM-App encourages developers to contribute, modify, and extend its functionality, fostering community-driven improvements and collaboration.

- Scalability: LLM-App supports scalable applications, enabling users to manage growing data and processing demands as their projects expand.

FAQs

1. Can I run LLMs on any macOS device?

Yes, LLMs can run on both Apple Silicon Macs (M1, M2, M3) and Intel-based Macs. However, performance varies depending on the hardware, with Apple Silicon offering superior performance due to its architecture.

2. How much storage and memory do I need to run LLMs offline?

Requirements vary based on the chosen LLM. Smaller models can run on machines with 16GB of RAM, while larger models typically need at least 32GB of RAM and ample storage space.

3. Why should I choose open-source solutions?

Open-source LLM solutions provide full transparency and control. You can modify, optimize, and fine-tune models for your specific needs without vendor lock-in or expensive licensing fees.

4. Is running LLMs offline secure?

Yes, offline LLMs ensure that all data processing remains on your local device, minimizing exposure to external threats and privacy breaches often associated with cloud-based solutions.

5. Can I run different LLMs on my macOS device simultaneously?

Yes, depending on your device's capabilities, you can run multiple LLMs simultaneously. However, be aware that this requires significant computational resources, including RAM and CPU power.