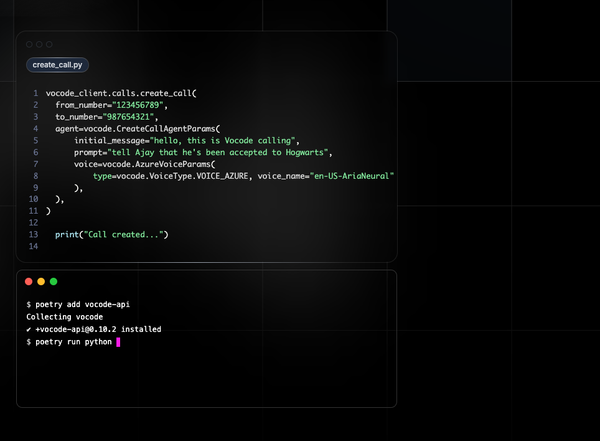

LocalAI: Self-hosted, community-driven, local OpenAI-compatible API

LocalAI is a drop-in replacement REST API that's compatible with OpenAI API specifications for local inferencing. It allows you to run LLMs (and not only) locally or on-prem with consumer grade hardware, supporting multiple model families that are compatible with the ggml format. Does not require GPU.

Features

Local, OpenAI drop-in alternative REST API. You own your data.

- NO GPU required. NO Internet access is required either

- Optional, GPU Acceleration is available in

llama.cpp-compatible LLMs. See also the build section. - Supports multiple models:

- 📖 Text generation with GPTs (

llama.cpp,gpt4all.cpp, ... and more) - 🗣 Text to Audio

- 🔈 Audio to Text (Audio transcription with

whisper.cpp) - 🎨 Image generation with stable diffusion

- 🔥 OpenAI functions 🆕

- 🏃 Once loaded the first time, it keeps models loaded in memory for faster inference

- ⚡ Doesn't shell-out, but uses C++ bindings for a faster inference and better performance.

License

- LocalAI is a community-driven project created by Ettore Di Giacinto.

- MIT