Mom Discovers Son’s Rare Diagnosis with ChatGPT, Experts Warn Against Self-Diagnosis with AI

Table of Content

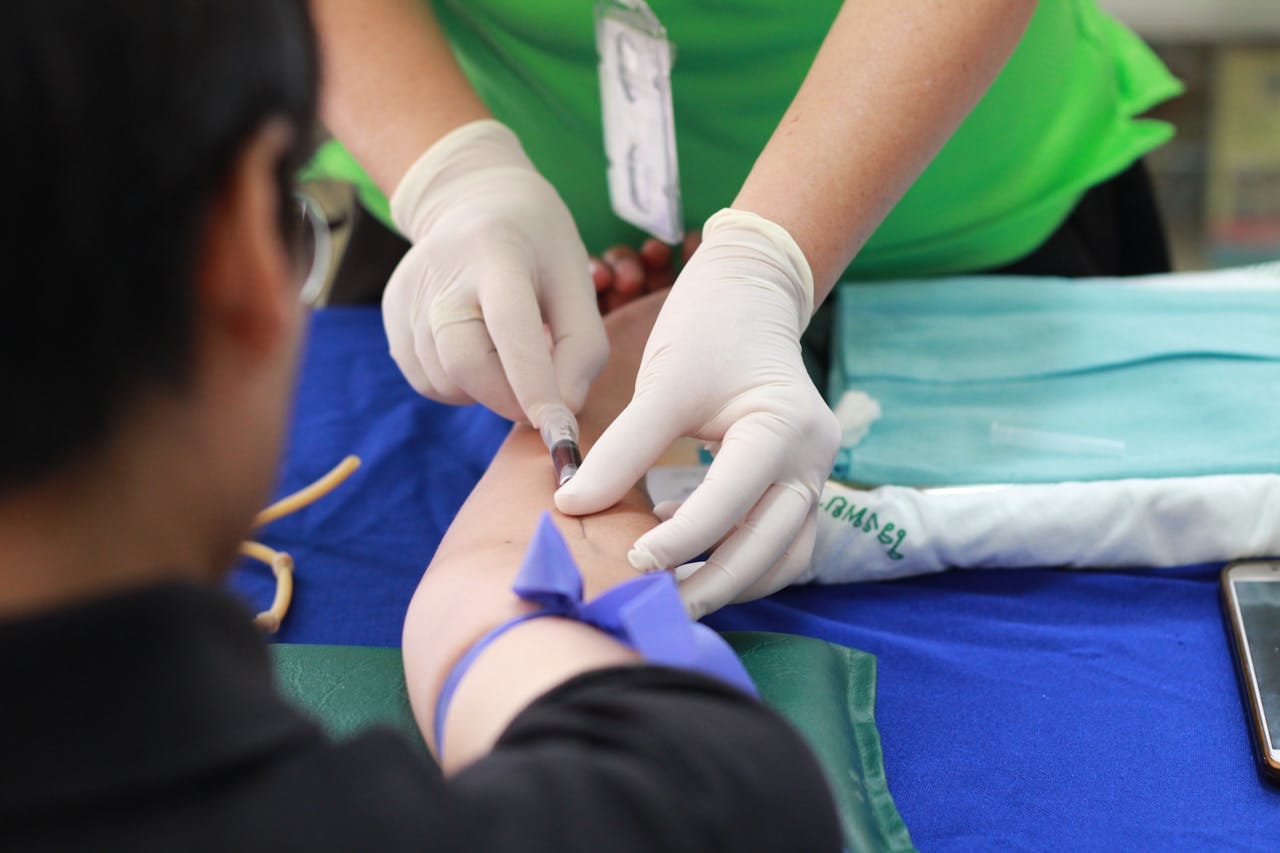

A recent news story highlights how a determined mother used ChatGPT, an AI chatbot, to identify a rare condition in her son after doctors failed to pinpoint the cause of his chronic pain. While this success story has drawn attention to the potential of AI in healthcare, experts caution against relying on ChatGPT or similar tools for medical self-diagnosis.

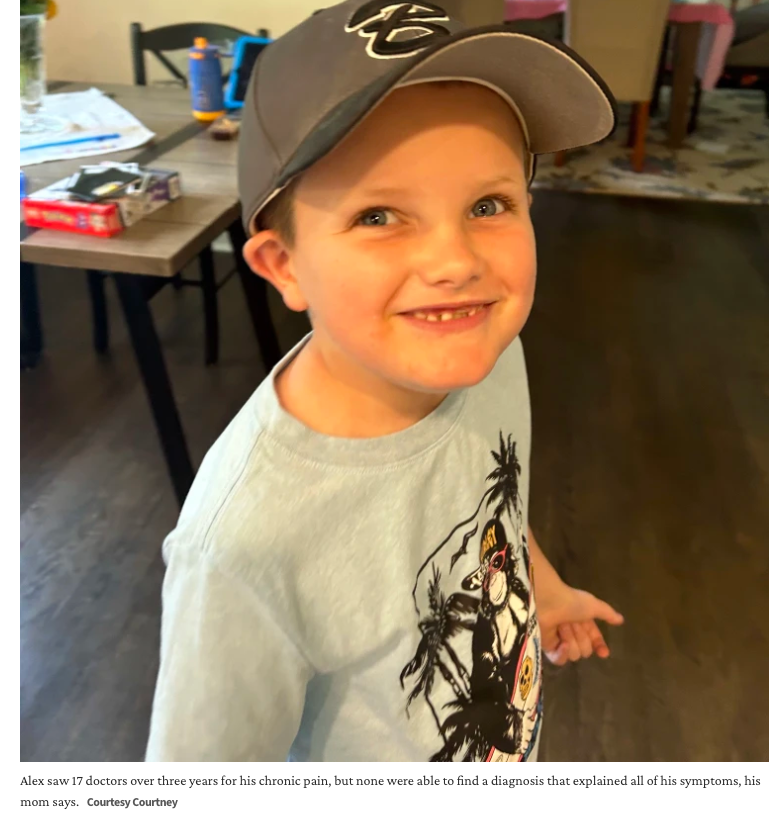

The case involved a young boy who had been suffering from unexplained pain for years.

Despite multiple medical consultations, his condition remained undiagnosed.

A Mother’s Determination: From 17 Doctors to an AI Diagnosis

For three long years, young Alex endured chronic pain without answers. His mother, Courtney, watched helplessly as they visited 17 different doctors, each trying to uncover the root cause of his mysterious symptoms. Despite their efforts, no diagnosis could explain everything he was experiencing.

Frustrated but unwilling to give up, Courtney took matters into her own hands. Turning to the internet and eventually ChatGPT, she found herself exploring an unconventional path. The AI chatbot suggested a rare condition that had eluded even seasoned medical professionals. To her surprise, it turned out to be correct.

While this extraordinary journey shines a light on a mother’s perseverance, it also opens up questions about the role of AI in healthcare—and whether tools like ChatGPT should ever be trusted for something as critical as medical diagnosis.

While the outcome in this case was positive, healthcare professionals emphasize that such tools should not replace medical expertise. ChatGPT, like other AI models, is not designed to provide accurate medical diagnoses.

It lacks the ability to perform physical examinations, analyze lab results, or consider the complexities of individual patient cases.

Risks of Using AI for Self-Diagnosis

- Accuracy Limitations: AI models can generate plausible but incorrect suggestions based on incomplete or inaccurate symptom descriptions.

- Contextual Misunderstanding: AI tools lack the nuanced understanding of a patient's full medical history and other contributing factors.

- Potential for Harm: Relying on AI without consulting a qualified doctor can delay proper treatment, leading to worsened conditions.

Read our following articles that we warn from using AI for self-diagnosis

Expert Recommendations

Medical professionals strongly advise against using AI chatbots like ChatGPT for self-diagnosis. Instead, such tools can be seen as supplementary, aiding communication with healthcare providers rather than replacing them.

Dr. Jane Smith, a family physician, commented, “While this case is remarkable, it’s crucial for the public to understand that AI is not a substitute for professional medical care.

If you’re experiencing symptoms, consult a doctor who can provide a thorough evaluation and proper diagnosis.”

Conclusion

Though the mother’s innovative use of ChatGPT in this instance underscores the potential of AI in medicine, relying on it for medical diagnoses is fraught with risks. Always seek professional medical advice for health concerns, as self-diagnosis, whether through AI or other means, can lead to serious consequences.

For the original story, visit Today.com.