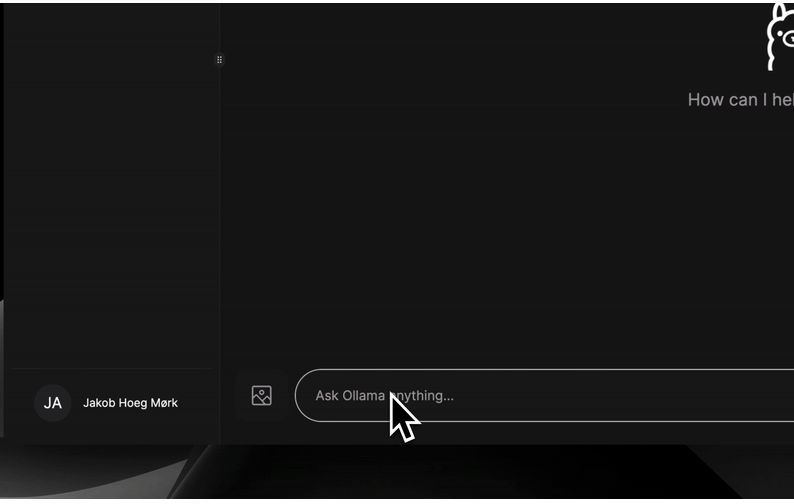

Next.js Vllm UI - Self-hosted Web Interface for vLLM and Ollama

Table of Content

Next.js Vllm UI is a free and open-source self-hosted system that enables you to have a reactive user-friendly interface for large language models 0r LLMs as Ollama.

It is easy to setup with one command using Docker.

It is built on top of Next.js, Tailwind CSS framework, uses shadcn UI framework for interface and shadcn-chat - Chat components for NextJS/React projects.

Features

- Beautiful & intuitive UI: Inspired by ChatGPT, to enhance similarity in the user experience.

- Fully local: Stores chats in localstorage for convenience. No need to run a database.

- Fully responsive: Use your phone to chat, with the same ease as on desktop.

- Easy setup: No tedious and annoying setup required. Just clone the repo and you're good to go!

- Code syntax highligting: Messages that include code, will be highlighted for easy access.

- Copy codeblocks easily: Easily copy the highlighted code with one click.

- Chat history: Chats are saved and easily accessed.

- Light & Dark mode: Switch between light & dark mode.

Setup and Install

The easiest way to get started is to use the pre-built Docker image.

docker run --rm -d -p 3000:3000 -e VLLM_URL=http://host.docker.internal:8000 ghcr.io/yoziru/nextjs-vllm-ui:latest

If you're using Ollama, you need to set the VLLM_MODEL:

docker run --rm -d -p 3000:3000 -e VLLM_URL=http://host.docker.internal:11434 -e VLLM_TOKEN_LIMIT=8192 -e VLLM_MODEL=llama3 ghcr.io/yoziru/nextjs-vllm-ui:latest

If your server is running on a different IP address or port, you can use the --network host mode in Docker, e.g.:

docker run --rm -d --network host -e VLLM_URL=http://192.1.0.110:11434 -e VLLM_TOKEN_LIMIT=8192 -e VLLM_MODEL=llama3 ghcr.io/yoziru/nextjs-vllm-ui:latest

Then go to localhost:3000 and start chatting with your favourite model!

License

MIT License