Top 7 Free Open-Source Language Models: A Comparison of Features, Pros, and Cons in 2024

Exploring Open-Source Large Language Models (LLMs): A Quick Guide

Table of Content

Large Language Models (LLMs) are sophisticated artificial intelligence systems trained on extensive text datasets to comprehend and produce human-like text.

These models have transformed natural language processing and find wide-ranging applications across diverse industries.

Benefits of LLMs:

- Versatility: LLMs can be applied to a wide range of tasks, from text generation to translation and summarization.

- Improved efficiency: They can automate many text-based tasks, saving time and resources.

- Natural language understanding: LLMs can comprehend context and nuances in human language.

- Scalability: Once trained, LLMs can handle large volumes of text-based tasks quickly.

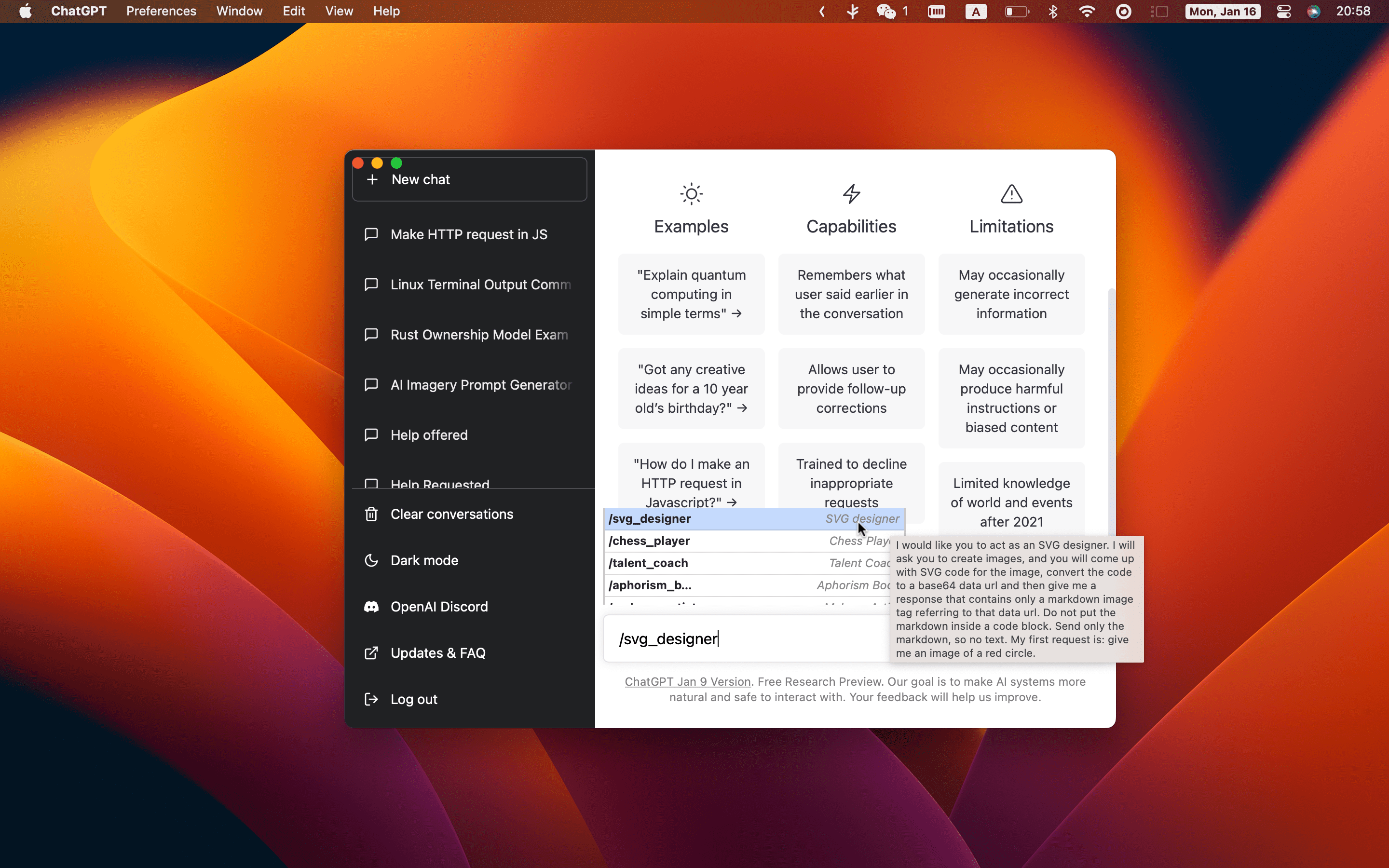

Common Usage of LLMs:

- Content creation: Generating articles, social media posts, and marketing copy.

- Customer service: Powering chatbots and virtual assistants for 24/7 support.

- Language translation: Providing accurate translations across multiple languages.

- Code generation: Assisting developers by generating and completing code snippets.

- Data analysis: Extracting insights from large text datasets and generating reports.

- Educational tools: Creating personalized learning content and answering student queries.

As we explore various open-source LLMs in this guide, you'll see how these benefits and use cases apply to different models, each with its own strengths and capabilities.

This post explores various open-source Large Language Models (LLMs). We'll provide a summary of each model, along with its features, advantages, disadvantages, and use cases. Additionally, we'll include a comparison table to help you easily contrast these models.

Best Open-source LLMs Models

1. GPT-2 (OpenAI)

GPT-2, one of the pioneering open-source models, boasts 1.5 billion parameters. Despite its age, it remains widely used thanks to its easy deployment and modest hardware requirements. The model excels in smaller-scale tasks such as content generation, summarization, and chatbot development.

Features:

- 1.5 billion parameters.

- Supports tasks like text completion, summarization, and conversational AI.

- Low computational requirements for deployment.

Pros:

- Lightweight and easy to deploy.

- Large community and many available tools.

- Great for smaller projects and experimentation.

Cons:

- Limited performance compared to newer models.

- Cannot handle complex queries or large datasets as well as larger models.

Use-Cases:

- Text generation for content creation.

- Building simple chatbots.

- Educational tools and quizzes.

2. BLOOM (BigScience)

BLOOM is a vast multilingual model boasting 176 billion parameters and supporting over 46 languages. It excels in cross-lingual applications like translation and content generation for diverse global markets. The model's collaborative development approach ensures both accessibility and inclusivity.

Features:

- 176 billion parameters.

- Multilingual support for over 46 languages and programming languages.

- Openly developed under the RAIL license for responsible AI usage.

Pros:

- Excellent for multilingual applications.

- Supports a wide range of languages and tasks.

- Large, inclusive community collaboration.

Cons:

- High hardware requirements for both training and inference.

- Complex to fine-tune and manage due to its size.

Use-Cases:

- Translation services and multilingual chatbots.

- Multinational content generation and summarization.

- Research and data analytics across diverse languages.

3. Falcon (by TII)

Falcon is a high-performance model that strikes a balance between size and quality. With versions like Falcon-7B and Falcon-40B, it's designed for efficient performance in production environments with moderate hardware requirements. The model excels at text generation and customer support automation.

Features:

- Models with 7B and 40B parameters.

- Optimized for text generation and efficient deployment.

- Open-source and commercially usable under Apache 2.0.

Pros:

- Balanced performance with moderate hardware needs.

- Open licensing makes it flexible for both research and commercial use.

- Versatile for a wide range of NLP tasks.

Cons:

- Smaller parameter size than models like GPT-NeoX or BLOOM, which limits very complex tasks.

- Fewer community-driven resources compared to more well-known models.

Use-Cases:

- Customer support automation.

- Conversational AI and virtual assistants.

- Document classification and text generation.

4. LLaMA (Meta AI)

LLaMA, a family of models (7B, 13B, 30B, 65B) developed by Meta AI, focuses on efficient performance with reduced hardware costs. It's particularly valuable for research and prototyping AI applications with a smaller infrastructure footprint, making it ideal for academic and small enterprise use.

Features:

- Models ranging from 7B to 65B parameters.

- Designed for efficient usage with smaller infrastructure.

- Custom license, restricting certain commercial uses.

Pros:

- Low hardware requirements compared to larger models.

- Great for research and prototyping.

- Flexible in terms of model size, depending on project needs.

Cons:

- Limited licensing for commercial use.

- Performance is not as powerful as some of the larger models.

Use-Cases:

- Academic research and small enterprise AI projects.

- Prototyping NLP applications.

- Text classification and summarization tasks.

5. GPT-J (EleutherAI)

GPT-J is a 6 billion parameter model that balances performance with size, providing good text generation capabilities while being relatively lightweight compared to larger models. It’s perfect for smaller projects like text completion and educational tools.

Features:

- 6 billion parameters.

- Optimized for text generation, summarization, and conversational agents.

- Open-source under the Apache 2.0 license.

Pros:

- Lightweight and relatively easy to deploy.

- Good balance of performance and resource needs.

- Well-suited for smaller projects and educational tools.

Cons:

- Less powerful than larger models like GPT-NeoX or BLOOM.

- Limited performance for complex tasks.

Use-Cases:

- Text completion and content generation.

- Educational platforms like tutoring bots.

- Small-scale research and data analysis.

6. GPT-NeoX (EleutherAI)

GPT-NeoX is a large-scale model, with the most notable being GPT-NeoX-20B. It’s built to provide powerful performance for tasks like natural language generation and conversational AI. However, it requires significant computational power, making it suitable for enterprises with robust infrastructure.

Features:

- 20 billion parameters.

- Autoregressive language model designed for high-performance NLP tasks.

- Open-source under the Apache 2.0 license.

Pros:

- High-quality text generation, conversational AI, and code completion.

- Powerful performance for large-scale tasks.

- Open-source and flexible for enterprise usage.

Cons:

- Extremely resource-intensive, requiring powerful infrastructure.

- Complex to deploy and fine-tune compared to smaller models.

Use-Cases:

- Building enterprise-grade virtual assistants and chatbots.

- Code generation and completion for developers.

- Content generation and summarization at scale.

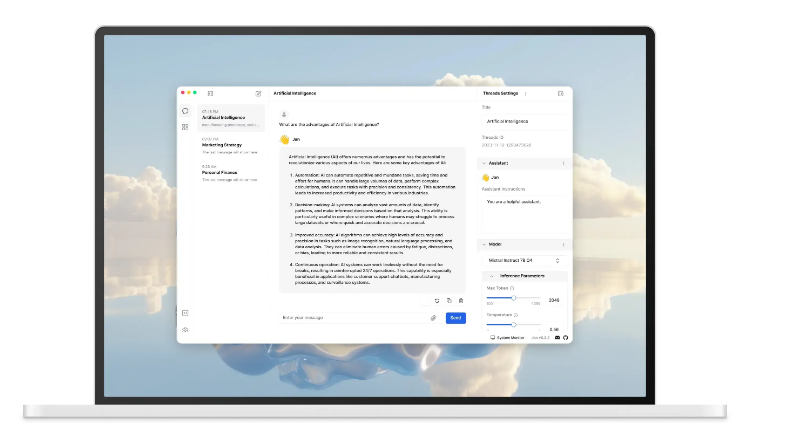

7. Mistral

Mistral is designed for both research and commercial use, offering flexibility for various industries. Its focus is on efficiency and multi-tasking, with capabilities in translation, summarization, and natural language processing. It's ideal for businesses needing versatile AI solutions.

Features:

- Models optimized for multitasking, including text generation, translation, and summarization.

- Flexible size options to suit different project needs.

- Designed for enterprise use, with lower inference costs.

Pros:

- Versatile and efficient for many industries.

- Lower cost of inference compared to larger models.

- Flexible for both research and production environments.

Cons:

- Fewer resources and community support compared to older, larger models.

- May lack some advanced capabilities found in models like GPT-NeoX.

Use-Cases:

- Healthcare and financial data analytics.

- Multi-language customer support systems.

- Text summarization for enterprise reports.

Comparison Table

| Model | Size | Supported Systems | License |

|---|---|---|---|

| GPT-2 | 1.5B parameters | Linux, macOS, Windows | OpenAI License |

| BLOOM | 176B parameters | Linux, macOS | RAIL License |

| Falcon | 7B, 40B parameters | Linux, macOS, Windows | Apache 2.0 |

| LLaMA | 7B, 13B, 30B, 65B | Linux, macOS, Windows | Custom License |

| GPT-J | 6B parameters | Linux, macOS, Windows | Apache 2.0 |

| GPT-NeoX | 20B parameters | Linux, macOS, Windows | Apache 2.0 |

| Mistral | Flexible size options | Linux, macOS, Windows | Open License |

Using LLMs in Healthcare: Benefits and Use-Cases

Large Language Models (LLMs) are revolutionizing healthcare by enhancing diagnostic efficiency, improving medical record systems, and supporting various clinical tasks.

Their capacity to process vast medical data, interpret complex language, and generate accurate responses makes them invaluable for healthcare integration.

Benefits of LLMs in Healthcare

- Streamlined Diagnosis Support: LLMs assist in diagnostic processes by analyzing patient symptoms and medical histories, cross-referencing extensive medical databases to suggest potential diagnoses.

- Enhanced Medical Record Management: LLMs automatically summarize patient interactions, extract key data from unstructured text, and maintain accurate, up-to-date medical records, saving healthcare professionals' time.

- Improved Patient Communication: LLMs enhance communication between healthcare providers and patients by automating responses to common questions and providing accurate treatment information.

- Drug Discovery and Research: By analyzing scientific literature, clinical trials, and research papers, LLMs help researchers discover new drug interactions and treatment methods more quickly.

- Personalized Treatment Plans: LLMs generate customized treatment plans by analyzing patient-specific data, including medical history, genetic information, and ongoing health monitoring.

Use-Cases of LLMs in Healthcare

- Diagnosis Assistance: In clinical settings, LLMs suggest diagnostic possibilities based on patient symptoms and history, improving doctors' decision-making.

- Medical Record System Integration: LLMs integrate with Electronic Health Record (EHR) systems to process patient notes, auto-fill forms, and organize unstructured medical data.

- Telemedicine Platforms: LLMs enhance telemedicine by handling real-time patient queries, providing recommendations, and supporting remote consultations.

- Clinical Documentation: By automating medical report and summary generation, LLMs allow healthcare providers to spend more time with patients and less on paperwork.

- Medical Research: LLMs help researchers process vast amounts of medical literature, identifying relevant data points for clinical studies and drug discovery.

LLMs offer significant advantages to healthcare, improving accuracy, accelerating data processing, and enabling better patient outcomes through automation and integration with existing medical systems.

Conclusion

Each of these open-source LLMs offers unique advantages based on size, supported platforms, and licensing model. From massive multilingual models like BLOOM to smaller, efficient options such as GPT-J and LLaMA, there's a range of choices for developers, researchers, and enterprises.

The best fit depends on specific infrastructure and use-case requirements. To effectively harness the power of LLMs, select a model that aligns with your performance needs and hardware capabilities.