13 Website copiers that help you keep offline mirrored versions of websites

Ever wanted to save a copy of a certain website to review it later when offline?

In the early 2000s, we used to copy a whole website into a static HTML format with images and scripts assets, in order to have access to them when disconnected.

Believe it or not, for many reasons, some are still doing this.

What is a Website Copier?

To have a copy of a website, you need to use a special web crawler, called a website copier that copies all the website into static HTML files alongside its images, styles, and JavaScript files.

Website Copier is an offline browser utility that allows users to download a website from the Internet to a local directory. This enables users to browse the downloaded site from link to link as if they were viewing it online, even when not connected to the Internet. It maintains the original site's relative link structure and can update mirrored sites.

In this post, we offer you a list of the best website copier, note that some of them are low-level and some are aimed at non-technical users.

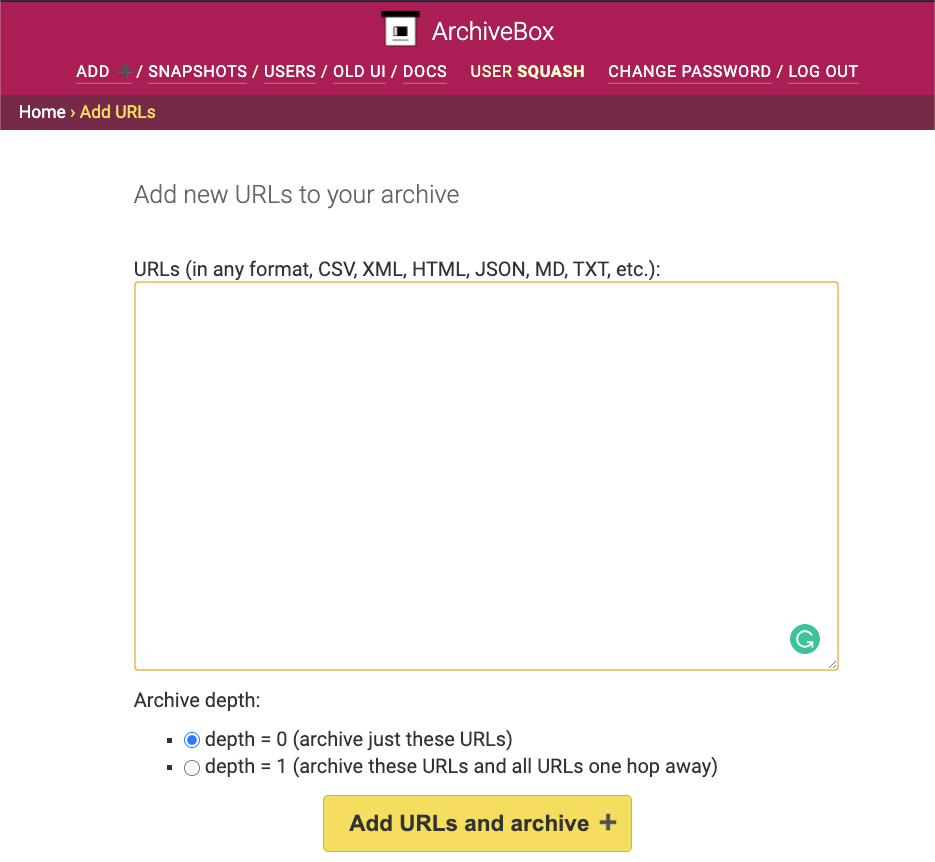

1- ArchiveBox

ArchiveBox is a free, open-source web archiving tool that takes any website, and saves it for offline usage.

Although it is a self-hosted web archiving tool, it also offers a command-line and a desktop edition.

ArchiveBox takes a snapshot of the website and saves it in several file formats. It can extract media files like YouTube, improve articles readability, and more.

2- HTTrack

HTTrack is free open-source software that helps you to get an offline copy of any website. Unfortunately, it is only available for Windows.

With HTTrack, you can get a mirrored version of any website which can be updatable if the website gets new content.

HTTrack comes in a simple multilingual interface, supports proxies, works with integrated DNS caches, offers a complete support for HTTPS and ipv6.

If you have a Windows machine, this app is a must-have.

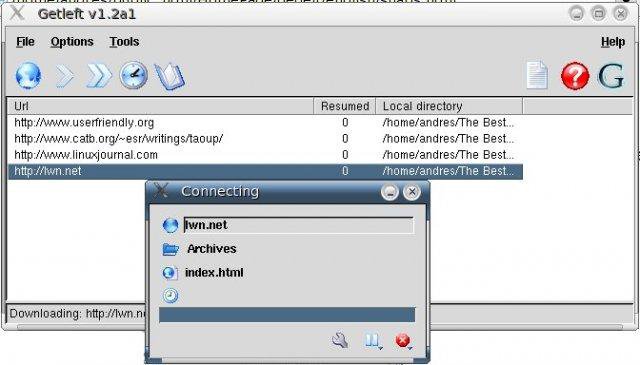

3- Getleft

Getleft is a free open-source website grabber, which is still getting weekly downloads since its first release. Getleft is available for Windows, Linux, and macOS.

4- Archivarix

Archivarix is a different kind of website grabber/ copier, It is a complete CMS "Content Management System" combined with a website downloader and a smart Wayback machine.

Before Git, many websites uses Archivarix to keep backup copies of their websites and upload them when it is required.

Archivarix works as a WordPress plugin.

5- Website Downloader

This is a customized website downloader solution that takes a copy of any website including JavaScript, Stylesheets, and Images.

This project "Website Downloader" is the brainchild of Ahmad Ibrahiim, who released it as an open-source under MIT License.

6- Goclone

Goclone clones any website to your local desk in a matter of mins. It is built using the Go programming language.

Goclone is a command-line tool, but it is extremely easy to use even for non-technical users. The project is released under MIT License.

7- PC Website Grabber

PC Website Grabber is yet another tool to help you copy websites to a local disk. It downloads all assets and saves the whole website as a zip archive.

This project is released under the GPL-2.0 License. It is part of the PageCarton website builder.

8- goscrape

The "goscrape" tool is yet another website scraper that allows users to create an offline readable version of websites with its assets.

Unlike its competitors on this list, it converts large image files into smaller versions and fetches files from external domains.

Goscrape works seamlessly from the command line, so do not expect any GUI version.

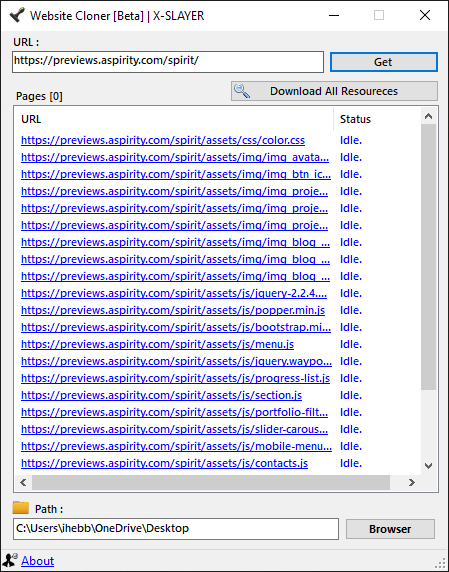

9- Website Cloner

A Visual Basic .NET tool to create a copy from any working website with its pages, with full recursive directory support.

However, there are no pre-built releases for this tool, so you need to build the app from the source code.

10- Monolith

Unlike other tools in this list, this one comes with a new concept, Monolith takes any website with its pages and compresses it into a single HTML file.

Monolith is built using Rust programming language and is ready to install either with Rush package manager (Cargo), Homebrew or MacPorts for macOS, and several package managers for Linux and FreeBSD.

11- Node-site-downloader

Node.js is a popular choice for many developers to build their web, mobile, desktop or command-line apps.

Here, Node.js is used to build this command-line script which allows anyone to copy a functional website with one command.

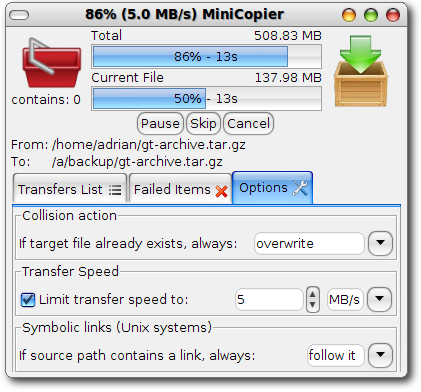

12- MiniCopier

MiniCopier is a GUI-based, multi-platform website downloader that supports multiple transfers, download pause, speed limit options, and download queue.

MiniCopier works seamlessly on Windows, Linux, and macOS. It is released under GPLv2.0.

13- Website-Downloader

This is a simple PHP script for downloading a website copy into your local disk. It does not come with complex instructions or tutorials, but it is not that hard to use for experienced developers.

Final note

A website copier is a program that helps you keep a functional copy of websites on your disk. As we listed the most popular, open-source website copiers here, we hope this list will come in handy for anyone who is looking for such tools.

If you know of any other open-source, and free website copier that we did not mention here, let us know.