Prompt Engineering: How to Be a Proper Prompt Engineer? 7 Advices and Recommended Tools

How to Be a Proper Prompt Engineer: 7 Tips and Recommended Tools

Table of Content

What is Generative AI?

Generative AI refers to AI models that create content based on patterns learned from data. Examples include text generators like ChatGPT, image creators like MidJourney, and code generation tools like GitHub Copilot.

These tools operate on large language models (LLMs) that predict outcomes based on input prompts.

What is Prompt Engineering?

Prompt engineering is the practice of crafting precise prompts to guide generative AI towards desired outputs. A "prompt" is the instruction or input given to an AI model. Effective prompt engineering can enhance creativity, accuracy, and efficiency.

Artificial Intelligence is changing how we interact with technology. Prompts serve as the bridge between human instructions and AI responses. Writing clear prompts ("prompt writing") and structuring them effectively ("prompt design") are essential for maximizing AI potential.

A Prompt Engineer is someone who specializes in designing prompts that yield optimal results from generative AI models. This role is becoming essential as AI applications grow.

7 Tips to Become a Better Prompt Engineer

1- Be Clear and Specific

To ensure clarity and precision, always provide detailed and specific prompts. Avoid vague or general instructions, as they can lead to misunderstandings or irrelevant outputs. For example, rather than saying, "Write about productivity," specify your needs: "Write five actionable tips for improving productivity while working remotely, focusing on time management, workspace setup, and communication."

This approach helps the AI deliver tailored, useful responses that meet your expectations.

The same goes for AI Art generation, however it requires several advanced models and techniques to do so.

2- Iterate and Refine

To get the best results, test your prompt repeatedly and analyze the AI's responses. Refine and tweak the wording until you get the exact output you’re looking for. Iteration ensures accuracy and relevance.

3- Use Role-Based Instructions

Want better responses from AI? Try assigning it a role to guide the output. For example, instead of a generic prompt, say, "You’re an expert historian. Explain the impact of the Industrial Revolution." Role-based instructions help tailor the AI’s answers, making them more focused, relevant, and closer to what you need.

4- Break Down Complex Tasks

If you’re dealing with a complex prompt, don’t throw it all at the AI at once. Instead, break it down into smaller, clear steps.

For instance, instead of asking, “Write a detailed guide on building a website,” try splitting it into parts like “Explain how to choose a domain” or “Describe how to set up web hosting.” This makes it easier for the AI to generate accurate and focused responses.

5- Specify the Format

Want the AI to nail your request? Specify the format you’re looking for, whether it’s a list, bullet points, an essay, or a table. For example, saying, “List 3 pros and 3 cons” gives the AI a clear direction.

Writers benefit from this because knowing the format helps them structure content more effectively and ensures the output fits the intended purpose. It keeps responses organized, concise, and easy for readers to follow.

6- Provide Context

If you want the AI to deliver exactly what you need, provide some background context. For example, instead of just asking a question, add details about the purpose or audience. The more context you give, the better the AI can tailor its response, ensuring it’s accurate, relevant, and useful.

The more background information you give, the more accurate the AI response.

7- Use Examples

Include sample responses to guide the AI’s output style.

When learning or coding, including examples helps the AI understand what you want. For instance, instead of saying, “Explain how to use a loop in Python,” say, “Explain how to use a for loop in Python with an example that prints numbers 1 to 5.” Concrete examples ensure the AI provides the right explanation and format.

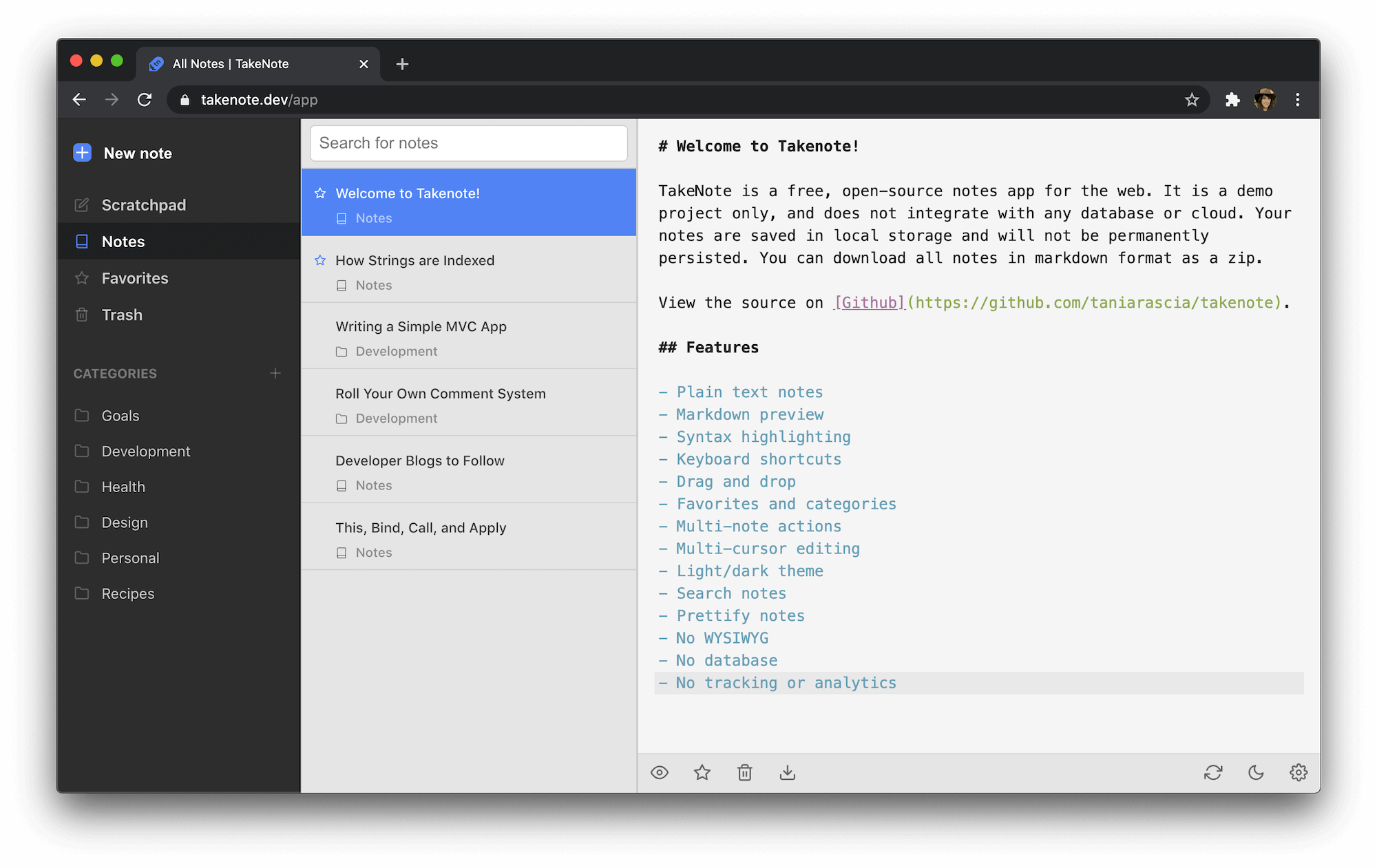

How to Collect and Archive Prompts

- Organize by Category

Create folders for types of prompts (e.g., marketing, healthcare, coding, storytelling). - Use Note-Taking Apps

Tools like Notion, Obsidian, or Evernote make it easy to store and tag prompts. - Version Control

Track prompt iterations. Note what works and what doesn’t. - Template Libraries

Maintain reusable templates for common tasks.

How to Study and Compare Prompt Results

Want to improve your prompts? Start by documenting AI responses to track performance. Compare metrics like relevance, creativity, and clarity to identify patterns. Try A/B testing by tweaking prompts slightly to see what works best.

Use tools like LangChain and PromptLayer for analysis and tracking. Refining your approach this way ensures consistently better results.

Are Prompt Results Based on the LLM Used?

Yes, prompt results vary based on the LLM (Large Language Model) powering the AI.

Each model is trained on different datasets and uses different architectures. For example, GPT-4 excels at nuanced text generation, while Claude may prioritize ethical constraints. The choice of model affects tone, accuracy, and creativity.

Tools to Compare LLM Outputs:

- OpenAI Playground (for GPT models)

- HuggingFace (for various open-source models)

- Perplexity.ai (to analyze AI output quality)

You can also download and install several LLMs models offline, we covered this in several topics.

Can You Run ChatGPT Alternatives Offline?

Yes. Many AI models can run offline, offering privacy and flexibility.

Offline AI Tools:

- LLaMA (from Meta)

- GPT4All

- Alpaca

- Mistral

We’ve covered detailed guides on running these on Windows, Linux, macOS, and web-based setups at medevel.com.

Taking Notes About Your Prompts

Keep track of your prompts to improve results. When a prompt works well, jot down what made it effective. If a prompt falls flat, note what went wrong. Look for patterns in what consistently works and what doesn’t. This helps you fine-tune future prompts. Also, list potential tweaks or new ideas to keep improving your approach.

In summary here is what to look into:

- What Worked: Record successful prompts and why they worked.

- What Didn’t Work: Note prompts that failed and why.

- Patterns: Identify patterns in successful prompts.

- Ideas for Improvement: Log ways to enhance future prompts.

Can You Use Prompts to Train AI?

Yes. While you can’t directly train LLMs without massive infrastructure, you can use prompts to fine-tune smaller models or customize responses in models like ChatGPT. Tools like LoRA (Low-Rank Adaptation) or Prompt Tuning help optimize model behavior for specific tasks.

Conclusion and Recommendations

Prompt engineering is a critical skill for leveraging AI’s full potential. By crafting clear, detailed prompts and using iterative refinement, you’ll enhance your AI interactions.

- Experiment with different prompt structures.

- Archive effective prompts for future use.

- Explore different LLMs and tools.

Stay sharp, stay curious, and refine your prompts. AI is only as good as the instructions you give it.