Promptfoo: Streamline Your LLM Testing - Test your Prompt Locally

Table of Content

If you're diving into the world of AI and Large Language models, you've probably felt the frustration of fine-tuning prompts and comparing different models. It's a bit like trying to nail jelly to a wall – messy and often disappointing.

Checkout Promptfoo, an open-source tool that's changing the game for developers working with language models (LLMs).

What's Promptfoo All About?

At its core, Promptfoo brings the concept of test-driven development to the world of AI. If you've ever wished for a way to systematically improve your AI applications, this tool might be your new best friend.

It's designed to help you optimize and secure your LLM applications, whether you're working with GPT, Claude, Gemini, Llama, or any other model out there.

Key Features That'll Make Your Life Easier

- Automated Testing and Benchmarking:

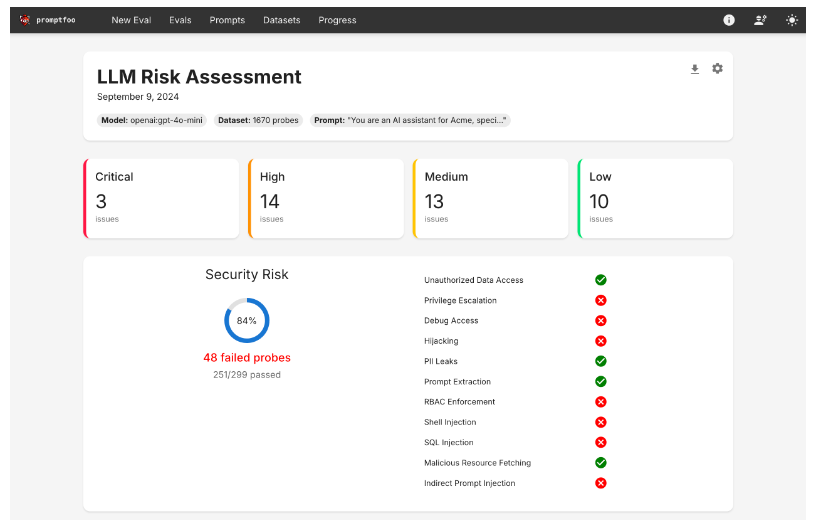

Imagine being able to set up tests for your AI models as easily as you do for regular code. Promptfoo lets you create declarative configurations to test and compare different models. The best part? It's fast, thanks to features like caching, concurrency, and live reloading. - Security First:

In the AI world, security isn't just a buzzword – it's crucial. Promptfoo includes tools for "red teaming" and pentesting your LLMs. This means you can automatically check for vulnerabilities before they become real-world problems. It's like having a security expert on your team, working 24/7. - Flexibility Is Key:

Whether you prefer working from the command line, need a library to integrate into your existing tools, or want something that plays nice with your CI/CD pipeline, Promptfoo has you covered. It supports a wide range of LLM providers, including the big names like OpenAI, Anthropic, and Google, as well as platforms like HuggingFace. You can even hook it up to custom APIs if that's your thing. - Objective Performance Scoring:

Gone are the days of guessing whether your latest tweak actually improved things. Promptfoo allows you to define custom metrics to score and benchmark your model outputs automatically. It's like having a referee for your AI experiments, ensuring you're always moving in the right direction. - RAG Testing Made Simple:

If you're working with retrieval-augmented generation (RAG) systems, you know how tricky they can be to get right. Promptfoo simplifies the process of evaluating these workflows, helping you build more reliable AI systems without the endless trial and error. - Simple, declarative test cases: Define evals without writing code or working with heavy notebooks.

- Language agnostic: Use Python, Javascript, or any other language.

- Open-source: LLM evals are a commodity and should be served by 100% open-source projects with no strings attached.

- Private: This software runs completely locally. The evals run on your machine and talk directly with the LLM.

- Rich development documentation

Why You Might Need Promptfoo

1- You're Tired of Flying Blind

If you've been developing LLM applications by feel, you know how hit-or-miss it can be. Promptfoo gives you concrete data to work with, helping you make informed decisions about your models and prompts.

2- Security Keeps You Up at Night

AI security is a hot topic, and for good reason. With Promptfoo's automated vulnerability assessments, you can sleep a little easier knowing you're proactively identifying potential issues.

3- You're All About Efficiency

Time is money, and Promptfoo can save you plenty of both. By automating comparisons and evaluations, you can iterate faster and focus on the creative aspects of your work.

4- Consistency Is Key

If you're building AI applications for clients or a larger team, consistency is crucial. Promptfoo helps ensure predictable outcomes across different models and scenarios.

5- You Love Data-Driven Decisions

With custom metrics and benchmarking, Promptfoo turns the art of prompt engineering into more of a science. If you prefer hard data over gut feelings, this tool is for you.

6- RAG Is Your Thing:

For those deep in the weeds of retrieval-augmented generation, Promptfoo offers a lifeline. It simplifies the process of testing and refining these complex systems.

7- You're Scaling Up:

As your AI projects grow, manual testing becomes less feasible. Promptfoo grows with you, fitting into CI/CD pipelines and supporting large-scale evaluations.

Supported Platforms

- Linux

- Windows

- macOS

The Bottom Line

Tools like Promptfoo are becoming essential. It's not just about making development easier (though it certainly does that). It's about building more reliable, secure, and effective AI applications.

Whether you're a solo developer experimenting with LLMs or part of a large team building the next big AI-powered application, Promptfoo offers a structured, data-driven approach to development that can elevate your work.

License

MIT License