Run DeepSeek-R1 on WebGPU in Your Browser: A Revolution in Browser-Based AI

Table of Content

Are you ready to experience the future of in-browser AI, where your data privacy is paramount, and performance is astonishing? Say hello to DeepSeek-R1 WebGPU, the next-generation reasoning model that brings powerful AI capabilities right into your browser!

What is DeepSeek-R1 WebGPU?

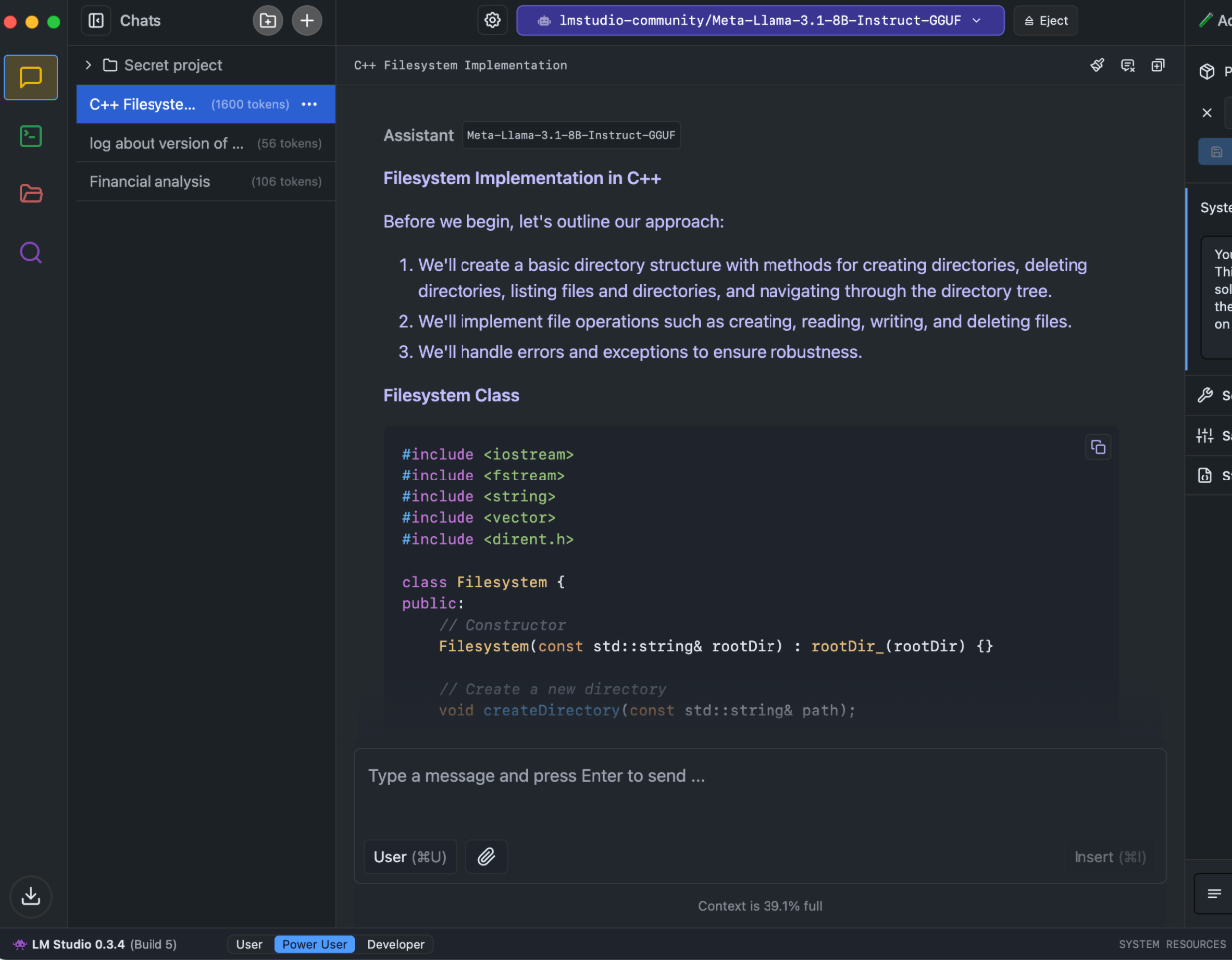

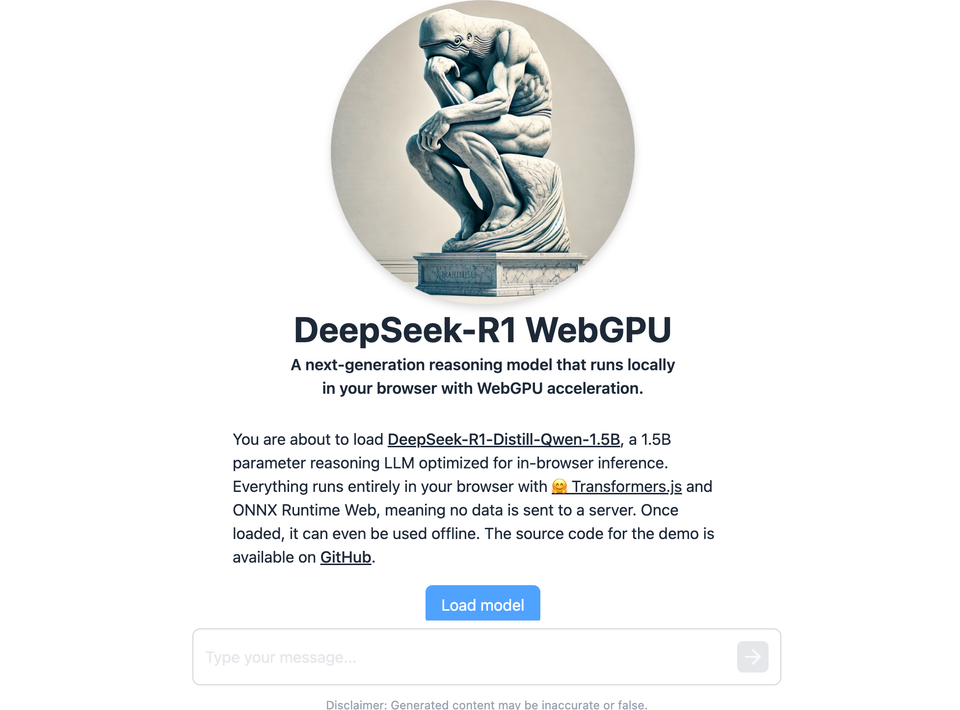

DeepSeek-R1 WebGPU is a cutting-edge language model, boasting 1.5 billion parameters and designed to run locally in your browser. This model utilizes the transformative powers of WebGPU acceleration, enabling fast, efficient processing without compromising your data security.

Developed by the innovative minds at Hugging Face, this model is available in their online space, which you can find here. The best part? It operates entirely within your browser using 🤗 Transformers.js and ONNX Runtime Web. This means your data stays yours alone – no server interactions necessary. Even better, once loaded, DeepSeek-R1 WebGPU can be used offline, making it an ideal choice for secure, portable AI tasks.

Why Should Developers Try It?

1. Privacy and Security: With DeepSeek-R1 WebGPU, the computations happen on your own device. This local processing means no data is sent to external servers, ensuring complete data privacy and security.

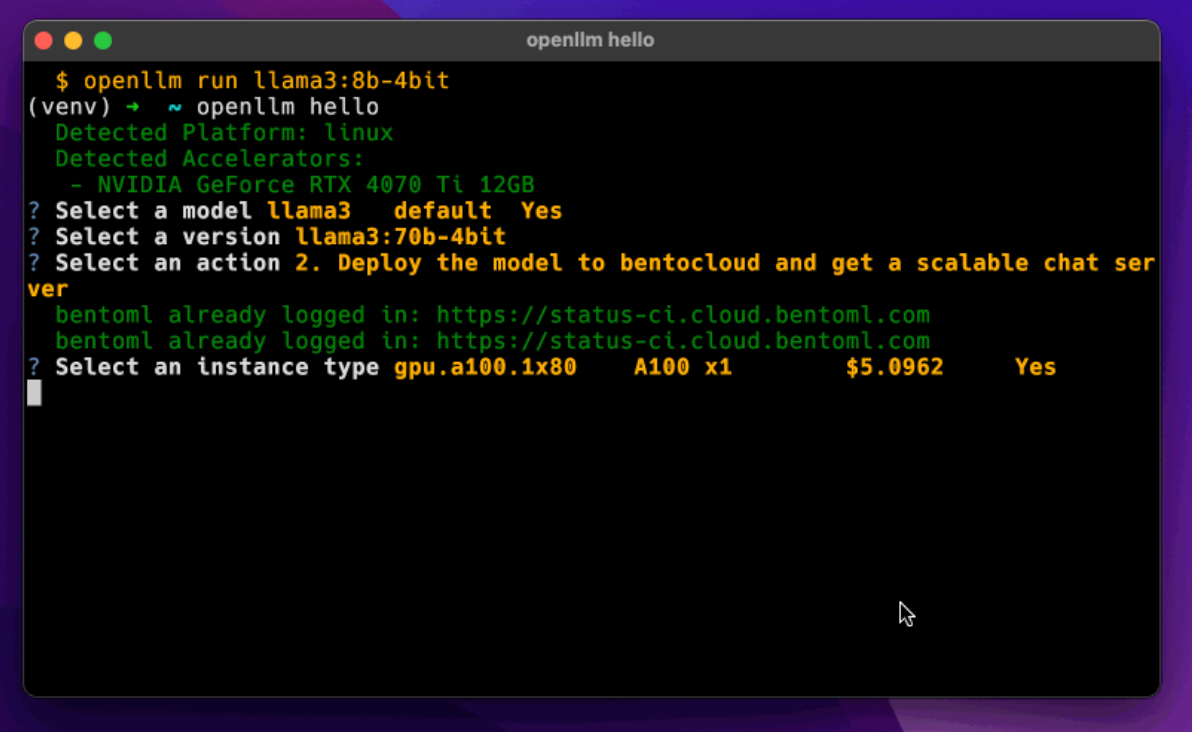

2. Performance: Powered by WebGPU, this model leverages the parallel processing capabilities of modern graphical processing units (GPUs). This leads to a significant boost in speed and responsiveness compared to traditional JavaScript executions.

3. Accessibility and Flexibility: Being browser-based, it’s incredibly easy to start with DeepSeek-R1 WebGPU. Whether you're developing complex AI-driven applications or experimenting with new AI models, setting up is as simple as loading the webpage.

4. Open Source and Community-Driven: The entire source code for the demo is available on GitHub, allowing developers to explore, modify, and even contribute to the development. This open-source approach fosters a collaborative environment where improvements and innovations thrive.

How Does It Work on WebGPU?

WebGPU is an emerging web standard designed to provide modern graphics and computation capabilities to web browsers. It offers a much-needed boost for web applications demanding high-performance computing, like AI models and 3D graphics.

For DeepSeek-R1, WebGPU accelerates the underlying computations required for the model to process data and generate responses. This not only improves the speed but also enhances the efficiency of the model, making it feasible to run such a large AI directly in the browser without lags or excessive load times.

Get Started

To get started with DeepSeek-R1 WebGPU, visit the Hugging Face Space. Here, you can directly interact with the model and see it in action. You can even try it offline to truly appreciate its capabilities without any network dependency.

So, why wait? Dive into the world of browser-based AI with DeepSeek-R1 WebGPU and experience firsthand the future of private, efficient, and powerful in-browser artificial intelligence. Whether you’re a developer looking to integrate AI into your next project or just curious about the capabilities of WebGPU, DeepSeek-R1 offers a unique and compelling opportunity to push the boundaries of what web applications can achieve.