Running LLMs on Apple Silicon M1: A Quantization Love Story

Unlock the power of AI on your Apple M1! Discover how quantization makes running large language models like LLaMA, GPT-NeoX, and more possible—without breaking a sweat. Perfect for developers, creators, and AI enthusiasts ready to push their machines to the limit

Table of Content

Let’s talk about running Large Language Models (LLMs) on your trusty Apple M1 machine. Whether you’re coding up medical chatbots, fine-tuning models for research, or just geeking out with AI experiments at home, the M1 is a powerhouse—but it has its limits.

That’s where quantization comes in to save the day.

Quantization? Yeah, let’s break it down. Imagine your model weights are like gold bars—super heavy and expensive to lug around. Quantization is like melting those gold bars into lighter coins.

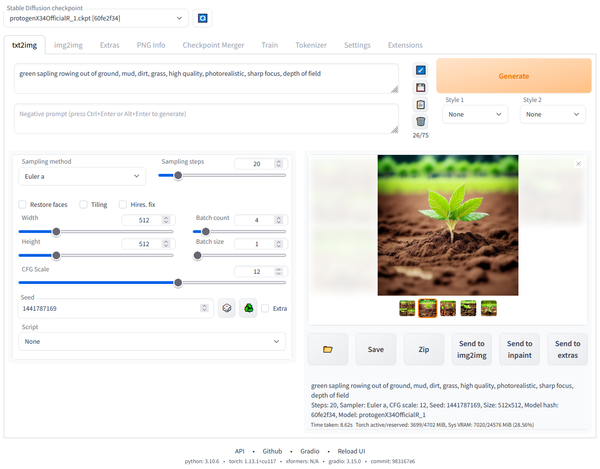

You still get the value of the gold, but now it’s easier to carry. In tech terms, quantization reduces the precision of the model weights from 32-bit floats to 8-bit integers—or even 4-bit if you’re feeling spicy. This makes the models smaller, faster, and way more efficient to run on machines like the M1, especially when memory is tight.

The M1 Memory Constraints

The M1’s got either 8GB or 16GB of memory. That’s solid for most things, but big models like GPT-J 6B or LLaMA 13B? They’re memory hogs. Trying to run them raw is like stuffing a king-sized bed into a tiny studio apartment—it just doesn’t fit. But here’s the trick: quantization.

It shrinks those models down so they can actually run on your machine. And if that’s not enough? You can offload parts of the model to disk. Slower? Yeah. But hey, it gets the job done.

Performance

The M1’s fast, no doubt about it. But when it comes to running massive models, it’s not gonna outpace a cloud rig or a beefy GPU. Bigger models like GPT-J will feel slow compared to what you’d get on dedicated hardware. Smaller models, though? They crush it. Running something like OPT-125M or DistilGPT-2 feels smooth and snappy—like your laptop’s showing off. So while the M1 might not win every race, it’s still got plenty of swagger for the lighter stuff.

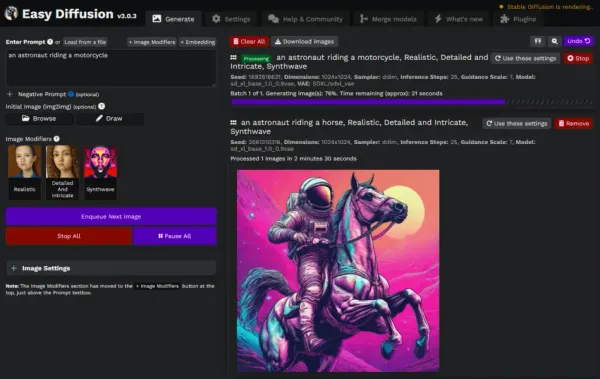

Software Optimization

Here’s the cool part: tools like PyTorch, TensorFlow, and Hugging Face Transformers have started supporting Apple’s Metal Performance Shaders (MPS). What’s that mean? It’s like giving your M1 a secret turbo button. MPS speeds up model inference, making everything faster and smoother.

I remember the first time I ran a model with MPS enabled—it was night and day. Snappier, slicker, just better. These little tweaks are what make working with AI on the M1 so fun. It’s all about pushing this chip to do more than you thought it could.

Why Quantization Rocks for M1 and Older Machines

Here’s the deal: quantization isn’t just a nerdy optimization trick—it’s a game-changer. For older machines or devices with limited resources (like the M1 with 8GB RAM), it’s the difference between “this model runs” and “this model crashes.” By reducing the precision of the weights, quantization makes models smaller and faster without sacrificing too much accuracy. It’s like giving your laptop a second wind.

Quantization reduces the precision of the model weights, making them smaller and faster to run. Many LLMs have been quantized to 8-bit or even 4-bit precision, allowing them to run on devices with limited resources like the M

So whether you’re running LLaMA, GPT-NeoX, or BERT, quantization is your best friend. It democratizes AI, making it accessible to anyone with a decent laptop and a passion for learning. And that’s something worth celebrating.

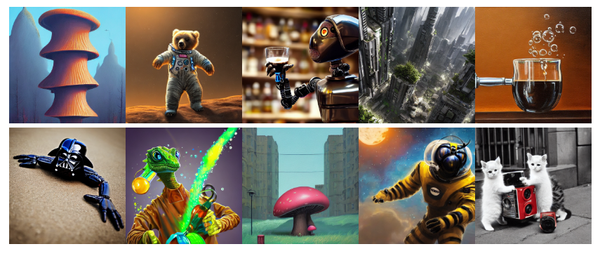

Now, let’s dive into some of the coolest models you can run on your M1 and how they vibe with quantization. Buckle up—it’s going to be a wild ride through code, creativity, and a touch of chaos.

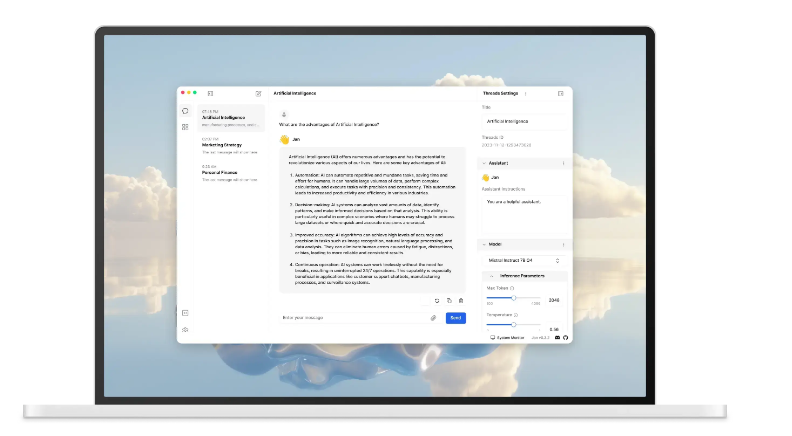

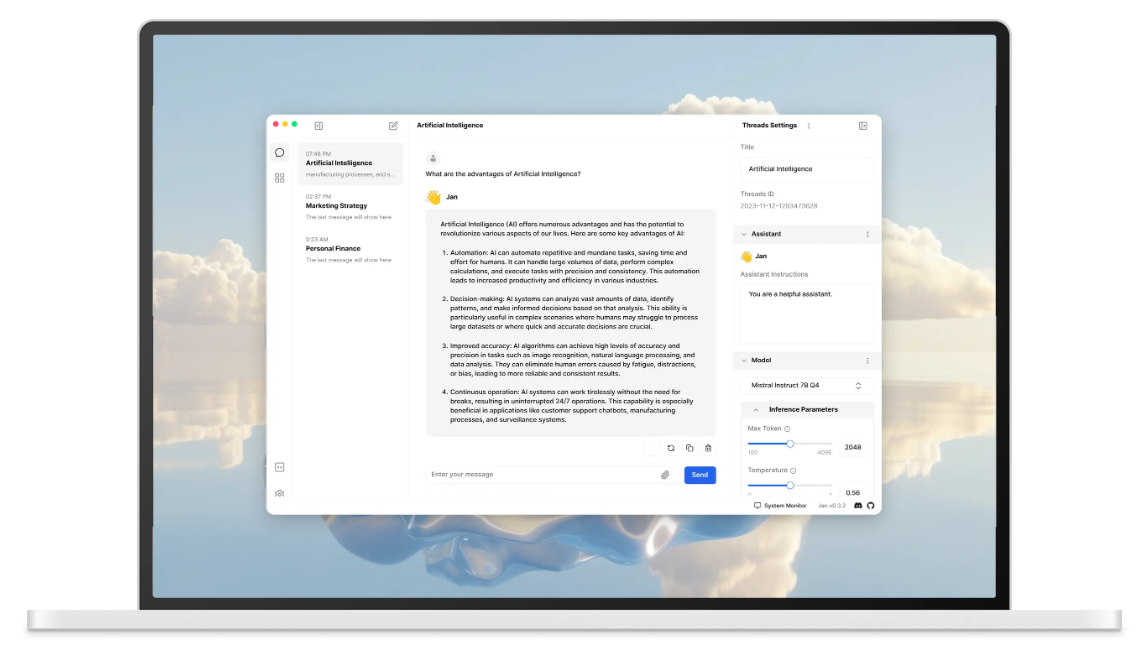

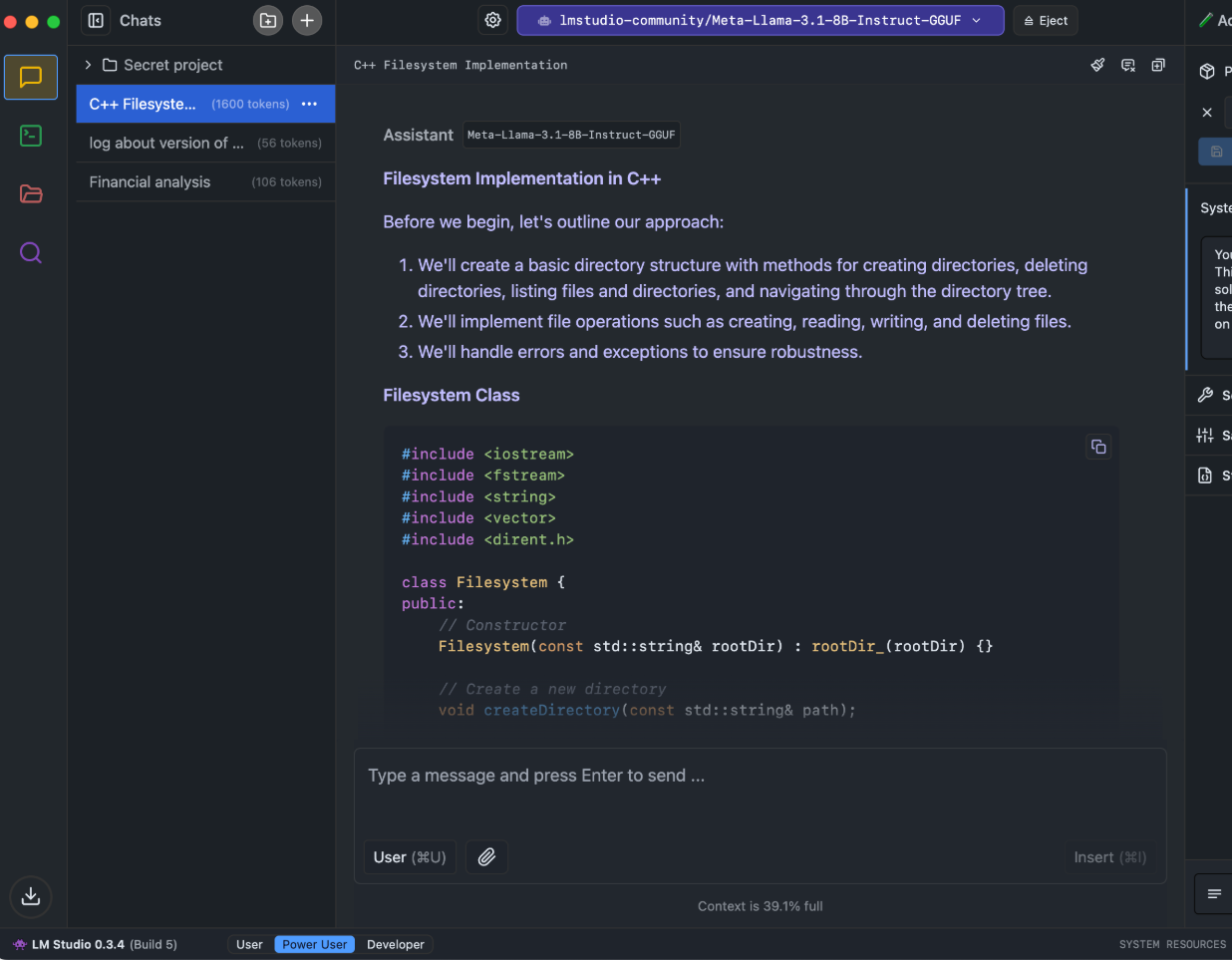

1- llama.cpp

If you haven’t heard of llama.cpp, you’re missing out. It’s like the Swiss Army knife of running LLaMA models locally. This bad boy lets you load quantized versions of LLaMA directly onto your M1 without breaking a sweat.

The best part? It’s written in C++, so it’s blazing fast. I’ve been using it to experiment with smaller LLaMA models, and honestly, it feels like having a mini supercomputer on my desk. If you’re into tinkering under the hood, this one’s for you.

2- ONNX Runtime

Let’s talk about ONNX Runtime because, honestly, it’s magic. ONNX (Open Neural Network Exchange) is like the universal translator for AI models. You can take a model trained in PyTorch, TensorFlow, or whatever, convert it to ONNX format, and then use ONNX Runtime to optimize it for your M1.

The runtime supports quantization natively, which means you can squeeze every last drop of performance out of your hardware. I’ve used it to run everything from text classifiers to image recognition models, and it’s smooth as butter. Plus, it works seamlessly with Apple’s Metal Performance Shaders (MPS), so your M1 gets to flex its GPU muscles.

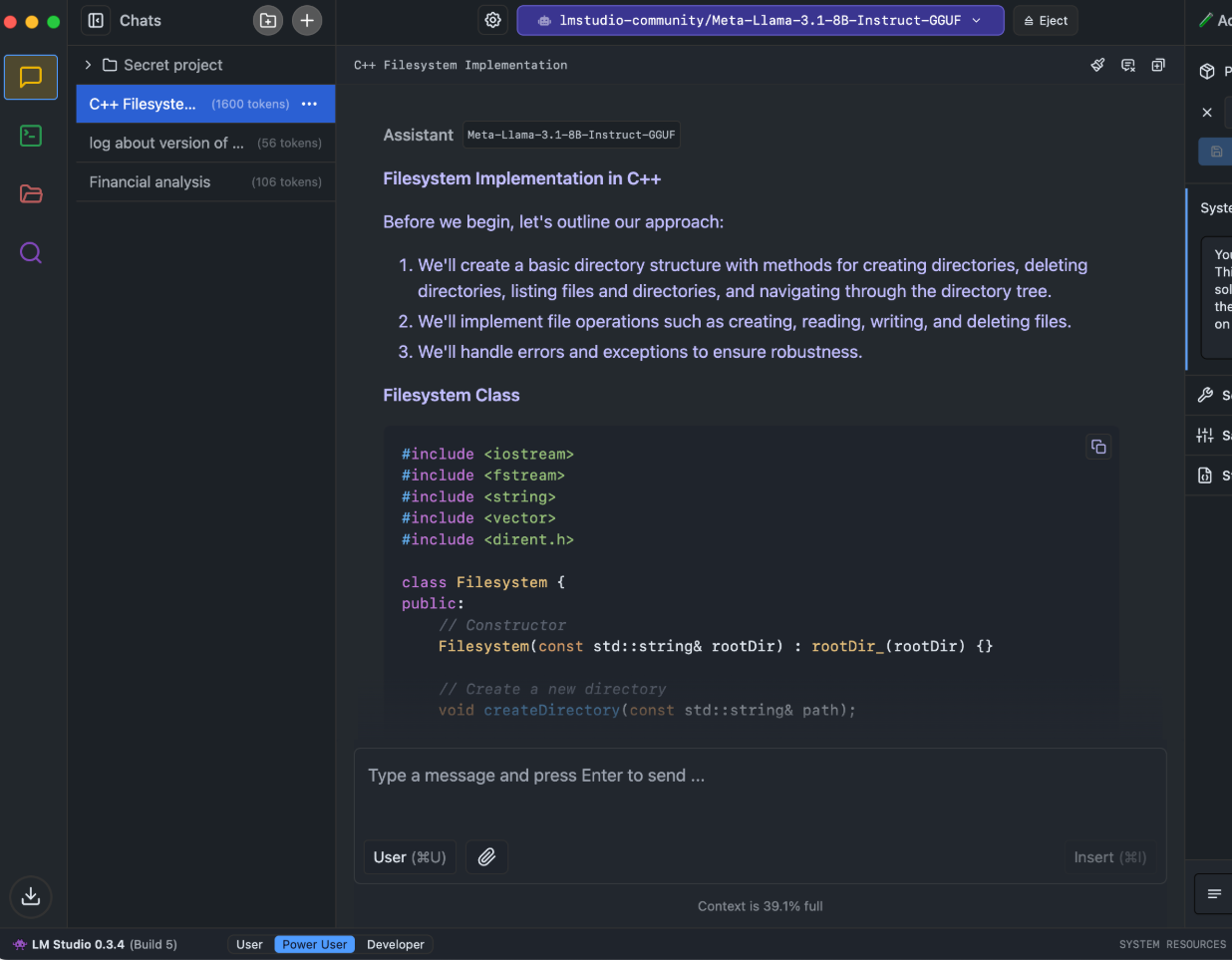

3- LLaMA / Alpaca (Quantized)

LLaMA and Alpaca are the dynamic duo of open-source language models. These models are like the cool kids in school who everyone wants to hang out with. But here’s the thing: they’re big. Like, really big. Running them on an M1 without quantization is like trying to fit an elephant into a Mini Cooper.

Enter quantization. With 4-bit or 8-bit quantized versions of LLaMA and Alpaca, you can run these models locally without melting your laptop. I’ve been using Alpaca for generating medical summaries, and it’s surprisingly good at understanding complex jargon.

4- GPT-NeoX 20B (Quantized)

Okay, so GPT-NeoX 20B is massive. Like, “I need a supercomputer” massive. But guess what? Thanks to quantization, you can actually run it on your M1. Sure, it’s not going to be lightning-fast, but it’s doable. And that’s kind of mind-blowing, right?

I mean, we’re talking about a model with 20 billion parameters running on a laptop. Quantization is the secret sauce here—it shrinks the model down to a manageable size while keeping most of its smarts intact. If you’re feeling adventurous, give it a shot. Just don’t expect real-time responses unless you’ve got the 16GB M1 Max.

5- OPT-125M / OPT-350M

These are the little siblings of the OPT family, and they’re perfect for the M1. At 125M and 350M parameters, they’re small enough to run smoothly without needing heavy quantization.

I’ve been using OPT-350M for quick brainstorming sessions and drafting emails—it’s like having a helpful assistant that doesn’t judge your grammar. If you’re new to running LLMs locally, start here. They’re lightweight, easy to set up, and still pack a punch.

6- GPT-Neo / GPT-J

GPT-Neo and GPT-J are like the OGs of open-source language models. They’re not as flashy as GPT-3 or GPT-4, but they’re solid performers. GPT-Neo comes in different sizes (125M, 1.3B, 2.7B), so you can pick one that fits your M1’s memory.

GPT-J, on the other hand, is a bit beefier at 6B parameters, but with quantization, it’s totally runnable. I’ve used GPT-J for creative writing projects, and it’s surprisingly good at generating coherent stories. Just make sure you’ve got some coffee handy because it can take a minute to generate responses.

7- GPT-2 (124M, 345M)

GPT-2 is the grandpa of modern LLMs, but don’t count it out just yet. The smaller versions (124M and 345M) are perfect for the M1. They’re fast, reliable, and great for beginners.

I’ve used GPT-2 for everything from generating poetry experiments to helping me write Python scripts. It’s not as smart as the newer models, but it’s still a lot of fun to play with. Plus, it runs like a dream on the M1 without any fancy optimizations.

8- DistilGPT-2

If GPT-2 is grandpa, then DistilGPT-2 is the spry teenager who inherited all the good genes. It’s a distilled version of GPT-2, meaning it’s smaller and faster but still pretty smart.

I love using DistilGPT-2 for quick tasks like summarizing articles or generating short snippets of text. It’s lightweight enough to run on even the base M1, and it’s a great introduction to LLMs if you’re just starting out.

9- BERT-base, RoBERTa-base, DistilBERT, MobileBERT

Last but not least, let’s talk about the BERT family. These models aren’t language generators like GPT—they’re more like detectives your AI Sherlock, great at understanding context, reasoning and extracting meaning from text. BERT-base and RoBERTa-base are solid choices for tasks like sentiment analysis or question-answering.

DistilBERT and MobileBERT are their leaner cousins, optimized for speed and efficiency. I’ve used MobileBERT for building a medical symptom checker app, and it works like a charm. If you’re working on NLP projects that don’t require full-blown LLMs, these are your go-to models.

Go forth and experiment with these models. Quantization is your secret weapon, and your M1 is more capable than you think. Let’s keep pushing the boundaries of what’s possible with AI—and have fun while we’re at it. Catch you at the next meet-up! 🚀