8 Groundbreaking Open-source AI Tools for Text-to-3D Mesh Creation

Are You Truly Ready to Put Your Mobile or Web App to the Test?

Don`t just assume your app works—ensure it`s flawless, secure, and user-friendly with expert testing. 🚀

Why Third-Party Testing is Essential for Your Application and Website?We are ready to test, evaluate and report your app, ERP system, or customer/ patients workflow

With a detailed report about all findings

Contact us nowTable of Content

I’ve always been fascinated by the power of Artificial Intelligence (AI) to bring ideas to life—particularly in the world of 3D mesh generation. Imagine typing a few words, and an AI model instantly transforms those words into a full-blown three-dimensional object. This technology isn’t just a flashy innovation; it’s reshaping how we approach architecture, simulation, and even game development.

How Does AI-Based 3D Mesh Generation Work?

Modern 3D generative models often rely on large-scale language models (LLMs) or specialized diffusion techniques. In essence:

- Text Parsing: The system reads a text prompt (e.g., “a futuristic flying car”) and decodes its semantic meaning.

- Latent Space Generation: The AI converts this text understanding into a latent 3D structure, essentially a hidden representation capturing the geometry.

- Mesh Construction: The latent structure is mapped to vertices, edges, and faces—resulting in a fully-fledged 3D mesh.

By processing massive datasets of 3D scans, images, or text descriptions, these models learn to synthesize shapes that are both consistent with the prompt and viable for real-world applications.

Why Generate 3D Meshes with AI?

- Speed & Efficiency: Instead of manually sculpting polygons, I can type a description and see a mesh pop out almost instantly.

- Reduced Cost: No need for expensive photogrammetry rigs or lengthy modeling sessions.

- Consistency: AI-driven pipelines reduce human errors and maintain a uniform style across multiple assets (especially beneficial for games and simulations).

- Endless Creativity: Brainstorm different architectural layouts or character designs without major manual interventions.

From my perspective, these technologies offer tremendous benefits across several industries:

- Game Design: Developers can prototype characters, environments, and props quickly.

- 3D Simulation: Rapidly creating realistic 3D objects for virtual tests in robotics or engineering.

- Education: Students can learn geometric principles by iteratively generating and analyzing 3D objects.

- 3D Printing: AI-generated meshes can be sent straight to a 3D printer for tangible prototypes.

- Architecture: Architects can experiment with unique forms and structures at the conceptual stage.

- Industrial Design: Product prototypes can be drafted in minutes, offering immediate visual feedback.

Best Open-Source LLMs to make 3D Meshes

Below is a list of noteworthy open-source initiatives. I’ve laid them out in the exact order I was examining them, but I’ll discuss them in a somewhat inconsistent style—just to keep things interesting.

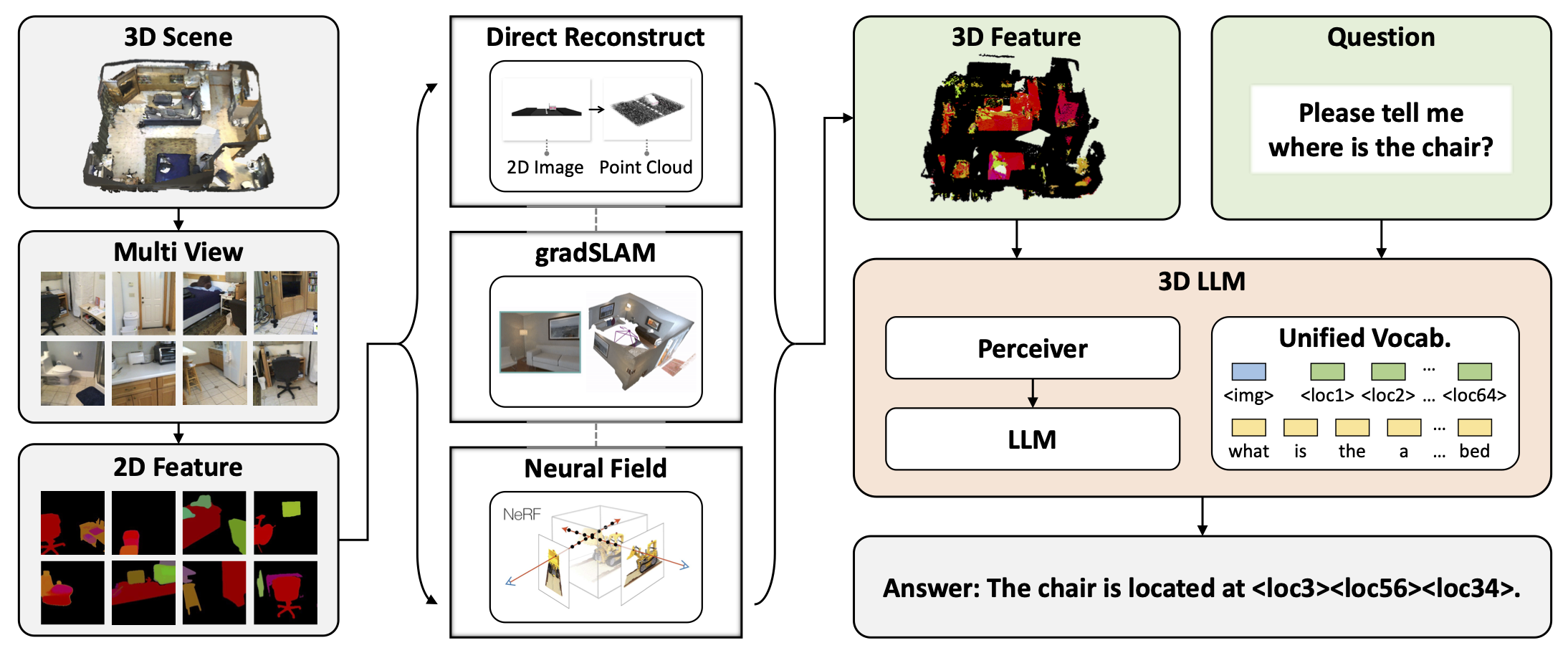

1- 3D LLM

I see this as a framework merging natural language inputs with robust 3D generation. It’s intriguing because it explores how large language models can facilitate the transition from text to a structured 3D representation.

3D LLM from the UMass Foundation Model is an advanced open-source project that merges natural language understanding with 3D geometry generation. By using large-scale language models, it transforms text prompts into three-dimensional representations, enabling faster prototyping for design, gaming, or education, while democratizing the 3D creation process.

The difference: Their main selling point is how they integrate an LLM specifically for 3D tasks rather than just reusing a generic language model.

2- Tencent Hunyuan3D

Tencent’s Hunyuan3D initiative presents a versatile text-to-3D generation platform, blending high-performance computing with user-friendly design. By harnessing Tencent’s expansive infrastructure and AI research, it enables developers to produce lifelike 3D assets more efficiently.

This model actually one of the best in the AI war between China AI Models and the world.

The newer model in Hunyuan3D speeds up generation times significantly through optimized parallelization and advanced GPU acceleration, allowing for greater throughput without compromising quality.

What makes it different is its ability to handle large-scale, complex datasets while maintaining a streamlined workflow—perfect for rapid prototyping in gaming, animation, or industrial applications.

3- LLaMA-Mesh: Unifying 3D Mesh Generation with Language Models

LLaMA-Mesh harnesses the power of LLaMA, a well-known language model, to directly generate 3D meshes from text prompts in a streamlined pipeline. By merging linguistic understanding with geometry creation, it cuts out the need for multiple specialized AI modules, making the process more efficient.

Because it can handle a wide variety of prompts—ranging from abstract objects to realistic scenes—it’s quickly gaining traction among developers, researchers, and 3D enthusiasts. Its robust performance and user-friendliness are key reasons people find it so appealing.

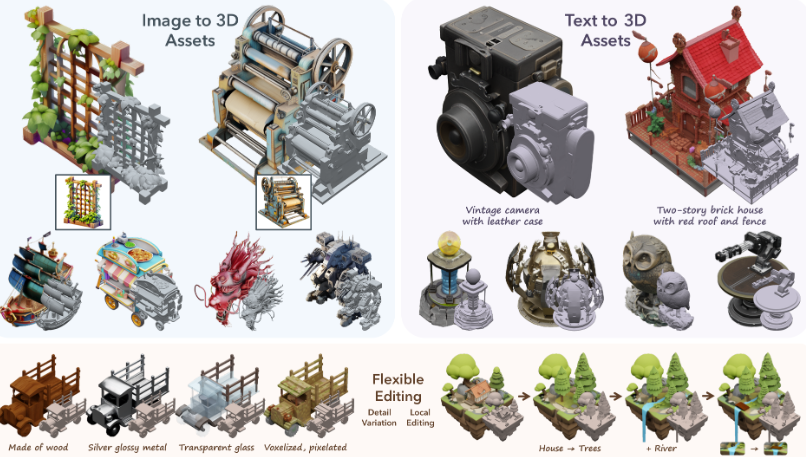

4- Structured 3D Latents for Scalable and Versatile 3D Generation

Microsoft’s Backed Project TRELLIS, short for “Structured 3D Latents,” is all about efficiently organizing the hidden representations of 3D data, allowing creators to quickly generate and modify complex shapes. Because it’s designed for scalability, it easily handles large, diverse projects—from architectural previews to game-ready assets.

I even saw a team during the Global Game Jam successfully integrate TRELLIS into their workflow, proving its accessibility and real-world value. Whether you’re prototyping or tackling full-scale production, TRELLIS stands out as a reliable and flexible 3D generation tool.

It supports also image to assets, that converts 3D images to assets easily.

5- Stable-Dreamfusion

Stable-Dreamfusion merges the power of Stable Diffusion with Google Research’s DreamFusion principles, transforming simple text inputs into neural radiance fields that can be converted into 3D meshes. This fusion of two cutting-edge approaches cleverly bridges the gap between 2D image generation and full-fledged 3D content creation.

I tested it myself and found it genuinely exciting for creating quick 3D prototypes. However, be aware that it demands fairly strong hardware to run smoothly—high-end GPUs and plenty of VRAM are usually necessary for optimal results.

Stable Dreamfusion supports both Text to 3D Mesh and Image to 3D Mesh out of the box.

6- GALA3D

Towards Text-to-3D Complex Scene Generation via Layout-guided Generative Gaussian Splatting

GALA3D is one of those projects I find especially exciting because it goes beyond creating just a single 3D object—it lets me define an entire scene layout. By using Generative Gaussian Splatting, it can fill in multiple elements based on my text inputs, maintaining a cohesive look and feel.

This approach is a big step forward in text-to-3D generation, letting us explore complex, multi-object environments without juggling multiple separate tools.

7- ChatPose: Chatting about 3D Human Pose

ChatPose is all about interactive human pose control instead of static meshes. By talking with the system, you can manipulate and understand 3D movements—perfect for animation and motion capture tasks. ChatPose focuses on the posture and motion aspect—essential for animation and motion capture tasks.

8- MeshXL

I’m really impressed by MeshXL. It bundles a range of powerful generative pre-trained models for large-scale 3D mesh creation, striking a great balance between flexibility and efficiency.

At its core is the Neural Coordinate Field (NeurCF)—an explicit coordinate setup enhanced by neural embeddings—that has proven surprisingly straightforward yet highly effective for sequential mesh modeling on a big scale.

By leveraging modern LLM techniques, MeshXL handles unstructured 3D data with ease, making it a perfect fit for integrating advanced pipelines into my existing projects. It’s robust, customizable, and great for handling complex datasets.

Concluding Thoughts

AI-based 3D generation stands at a crossroads of innovation and practical application. For me, the most exciting part is the prospect of democratizing 3D creation. Instead of requiring specialized 3D modeling expertise, more people can now shape their ideas into tangible digital or even physical (through 3D printing) forms.

Whether it’s designing a brand-new game world, simulating a high-risk industrial scenario, or teaching students the fundamentals of geometry—these open-source projects are blazing the trail to a future where text-driven 3D modeling is as common and intuitive as writing an email.

Citations & Further Reading

- 3D LLM (UMass Foundation Model)

- Tencent Hunyuan3D, GitHub Hunyuan3D-2, GitHub Hunyuan3D-1

- LLaMA-Mesh: Unifying 3D Mesh Generation with Language Models

- Structured 3D Latents (TRELLIS) by Microsoft

- Stable-Dreamfusion

- GALA3D: Towards Text-to-3D Complex Scene Generation

- ChatPose: Chatting about 3D Human Pose

- MeshXL