The Dangers of Self-Diagnosis Using AI Chatbots: A Doctor’s Perspective

Table of Content

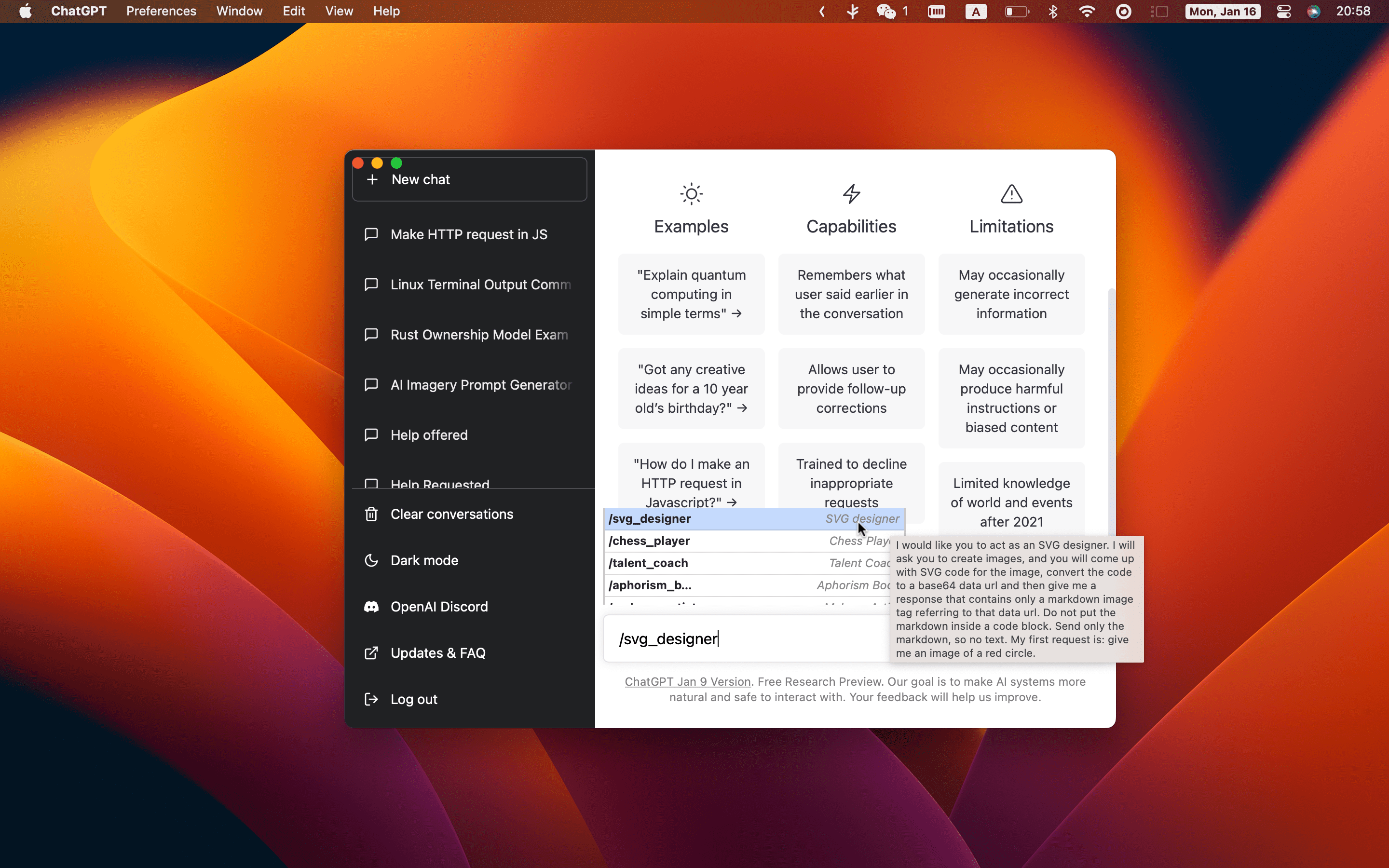

As a physician and software developer, I've recently observed a concerning trend: an increasing number of patients are visiting my clinic armed with information gathered from AI chatbots and services like ChatGPT, Microsoft Copilot, and Google Gemini.

Even more worrying is that many are attempting to self-diagnose using these tools.

While AI has undoubtedly made remarkable advancements and holds vast potential in healthcare, relying on AI chatbots for self-diagnosis can lead to serious consequences.

Here's why.

1. AI Tools Aren’t Substitutes for Medical Expertise

AI chatbots and tools, trained on vast datasets, can generate seemingly accurate medical information.

However, they lack the nuance, clinical judgment, and contextual understanding that come from years of medical training.

Medicine isn't merely about recognizing symptoms; it's about comprehending the intricate interplay between a patient's medical history, current health, environment, and mental state.

While a chatbot may provide a list of possible diagnoses based on symptoms, it cannot conduct physical examinations, observe non-verbal cues, or account for underlying conditions.

This limitation can sometimes lead to misdiagnosis, inappropriate treatments, or unwarranted anxiety.

2. Misinformation and Overgeneralization Risks

AI chatbots like ChatGPT often rely on general information. While this can be useful for basic knowledge, it can lead to overgeneralizations or misinterpretations.

For instance, someone searching for information about a headache might receive a range of possible causes, from common tension headaches to rare brain tumors.

In practice, I've seen patients who were convinced they had severe or rare conditions after consulting search engines, only to discover their symptoms were due to much less serious causes.

This misguided self-diagnosis creates unnecessary stress and anxiety, which can actually worsen health outcomes.

3. Inadequate Personalization

AI tools lack access to a patient's complete medical history unless integrated into a medical system. Even with integration, they may struggle to account for the complex factors involved in diagnosis and treatment.

For instance, AI services might suggest treatments based solely on symptoms, overlooking critical factors such as allergies, underlying conditions, or potential drug interactions. This absence of personalized care can result in serious health risks.

4. Inappropriate Self-Treatment and Delayed Professional Care

One of the gravest risks of self-diagnosing through AI chatbots is the potential for inappropriate treatments. Patients might take over-the-counter medications based on an AI's suggestion, unaware that these treatments could interact dangerously with their current medications or exacerbate an existing condition.

Even more concerning is the tendency for patients to delay seeking professional medical advice.

Convinced they've accurately diagnosed themselves, they might postpone seeing a doctor until the problem has significantly worsened.

This delay can lead to the progression of serious conditions that could have been managed effectively with early intervention.

5. The Liability Gap in AI-Assisted Medical Advice

There's a crucial issue many patients overlook: AI-generated medical advice lacks accountability. If an AI chatbot provides incorrect or misleading information, no one can be held responsible for the consequences.

As a doctor, I'm accountable for my diagnoses and treatment plans. AI tools, however, offer neither this level of responsibility nor the necessary follow-up care.

6. Medical Advice Without Ethical Oversight

Medicine isn't solely about facts and diagnoses—it's also about ethical considerations, which AI lacks.

Every decision a healthcare provider makes carries the responsibility to consider the patient's emotional well-being, social circumstances, and long-term implications.

AI chatbots lack the capacity for empathy or ethical reasoning, potentially rendering their advice cold, impersonal, and even harmful.

7. Challenges in Accurately Conveying Symptoms

Even for tech-savvy individuals skilled in writing prompts—such as prompt engineers—accurately describing medical symptoms to an AI chatbot is challenging.

In medicine, subtle nuances in symptom descriptions can be the difference between diagnosing a minor condition or something more serious.

The language we use to describe our symptoms is often subjective; what a patient might consider mild discomfort could be a red flag to a healthcare provider.

For example, describing pain as "sharp" or "dull," or explaining how it changes with movement, may seem straightforward.

However, it requires medical context to interpret correctly. AI chatbots heavily rely on patients' exact wording, and if symptoms are poorly described or misunderstood by the AI, the resulting diagnosis could be inaccurate or misleading.

As a doctor, I've encountered cases where patients—despite their skill in crafting detailed and complex prompts—struggled to articulate their symptoms in a way that led to an accurate AI-generated diagnosis.

This highlights a fundamental limitation: while prompt engineers may excel at generating effective commands for AI systems, the human body is far too complex and nuanced for these descriptions to capture the complete picture.

Risks of Self-Diagnosis Using AI Chatbots

- Incorrect Diagnosis: AI may misinterpret symptoms, leading to wrong health conclusions.

- Delayed Doctor Visits: Relying on AI advice might postpone necessary medical care.

- Unsafe Treatment Choices: AI suggestions may conflict with current medications or conditions.

- Unnecessary Worry: AI might list serious conditions, causing undue stress.

- Overreliance on Technology: Users may trust AI more than trained medical professionals.

- No Responsibility: There's no accountability if AI gives wrong advice.

- Difficulty Describing Symptoms: Users may struggle to accurately convey their health issues to AI.

- Lack of Physical Examination: AI can't perform hands-on checks crucial for accurate diagnosis.

- Privacy Concerns: Sharing health data with AI platforms may risk personal information.

What Should Patients Do Instead?

As a doctor, I encourage patients to view AI tools as supplements to—not replacements for—professional medical advice. Here are some tips for using these tools responsibly:

- Gather Information, Don't Diagnose: AI chatbots can be valuable for gathering general health information. However, always consult a healthcare professional to interpret that information within the context of your health.

- Ask Questions, Avoid Assumptions: It's fine to use AI tools to learn more about symptoms or conditions, but don't assume they provide the complete picture. Always consult a healthcare provider for proper interpretation.

- Prioritize Professional Care: If you have concerning symptoms, don't hesitate. Seek medical attention promptly. It's far better to obtain an accurate diagnosis and peace of mind than to rely on uncertain AI advice.

- Embrace Your Uniqueness: No AI tool can fully grasp your unique medical history, lifestyle, and other health factors. Trust your doctor to provide personalized care based on your comprehensive health profile.

Conclusion: AI in Healthcare—A Powerful Tool, Not a Solution

AI chatbots like ChatGPT, Microsoft Copilot, and Google Gemini offer promising advancements in healthcare, but they're no substitute for human doctors.

Medicine demands context, empathy, and a profound understanding of the human body—qualities that AI, in its current form, simply can't replicate.

As a physician, I implore you to use AI responsibly. Consider it a supplementary information source, not a diagnostic tool.

When it comes to your health, nothing surpasses the expertise, empathy, and accountability of a healthcare professional.