Exploring Try-on Tech: Our Journey with AR, WebXR, Best 7 Open-Source Try-on Projects

Table of Content

As me and my team are diving into an exciting AR-based virtual try-on demo project for a client, I’ve been reflecting on how this technology is rapidly evolving and the open-source communities driving innovation.

It's fascinating to see how AR, AI, and machine learning come together to offer an immersive and interactive shopping experience, especially when it comes to virtual clothing try-ons.

In this post, I’m going to talk about the tech behind Try-on technology, the challenges we’re encountering, and some of the open-source projects that have caught our attention during our development process.

What is Try-on Technology?

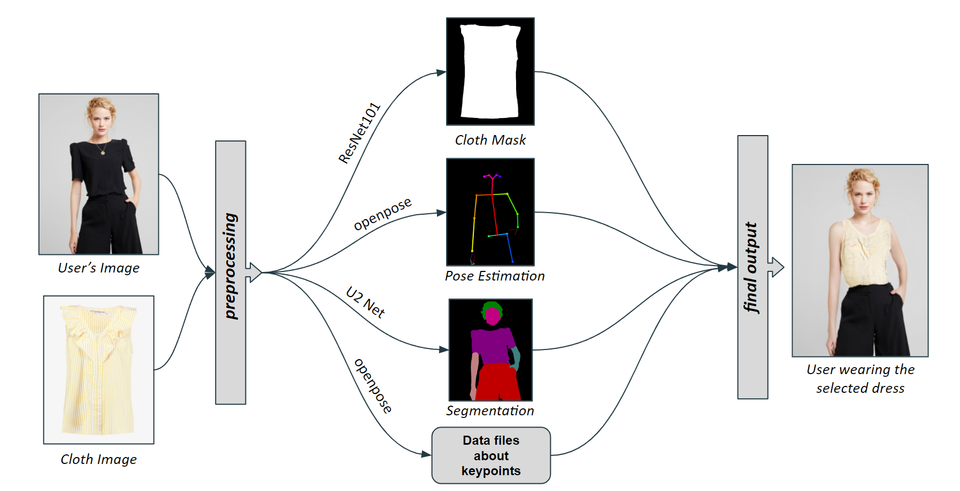

Try-on technology allows users to virtually "try on" products—clothes, shoes, glasses, etc.—via augmented reality (AR). Using AI and machine learning, it detects the user's body, poses, and movement, accurately placing virtual products on their image or avatar. At its core, this technology enables a more engaging and personalized online shopping experience, which we are now bringing to life with our project.

I should mention, though, that this isn’t an easy task. Even though tools like WebXR are advancing, integrating AR into a seamless try-on experience requires lots of work and real-time calculations. Our project focuses on WebXR technology, which is perfect for creating immersive web-based AR experiences. The goal is to enable users to try on clothes directly through a web browser—without needing any special app or headset.

Pose Detection: The Backbone of Try-On AR

For the AR to work correctly, we need to understand and track the user’s body, which is where pose detection comes in. Pose detection involves identifying key body points like the position of the head, arms, and legs, allowing us to place virtual clothing accurately on the user.

We’re using a combination of MediaPipe and TensorFlow to implement real-time pose detection in our demo. MediaPipe has been incredibly helpful, especially with its cross-platform capabilities and the ability to detect body and face landmarks in real time.

TensorFlow, with its powerful machine learning models, helps refine and optimize the entire process, ensuring that the virtual clothing fits and adjusts dynamically as the user moves.

Challenges We’re Facing

Like any innovative project, there are plenty of hurdles along the way. One major challenge is the accuracy of pose detection. When tracking a person’s body in motion, there can be occlusions, varying lighting conditions, and body types that make it hard to get precise results every time.

While tools like OpenPose have made significant strides, achieving flawless accuracy in different environments is still a work in progress.

Another challenge we’re encountering is the real-time video editing required to ensure that our virtual clothing fits the body naturally as it moves. This involves not only detecting the pose but also rendering 3D clothing accurately on the body in video streams.

Our team is experimenting with 3D video editing techniques to smooth out this process, but it's a complex task that demands high computational resources.

Additionally, ensuring that everything works seamlessly across various devices, browsers, and operating systems is crucial for the user experience. With the rise of WebXR, we're also looking at ways to integrate ARCore (for Android) and ARKit (for iOS) to support mobile devices effectively, but it's a bit tricky due to the varying specifications of these platforms.

We did a good work using Unity using AR Foundation and Unity Mars.

Open-Source Projects Making a Difference

There’s a great open-source community working on virtual try-on technology, and we’ve found some fantastic projects that are helping shape our approach. Here are a few notable ones we’ve been looking into:

- Clothes Virtual Try-On – This project caught our attention because it focuses on virtual clothing try-ons with deep learning techniques.

- Cloth Try-On with Style Transfer – Style transfer is an interesting method used in this project to seamlessly blend virtual clothing with the user’s image.

- Awesome Virtual Try-On – A curated list of virtual try-on projects, which has been super helpful for inspiration and understanding various approaches.

- Virtual Cloth TryOn – This repo focuses on creating virtual try-on systems using deep learning models for accurate clothing fitting.

- TryOn Adapter – A tool that aims to improve the efficiency of clothing try-on using machine learning and AI techniques.

- Virtual Clothes TryOn – This is another great project focusing on virtual fitting rooms and providing a good starting point for those looking to develop AR-based clothing try-ons.

- Cmate Virtual Tryon – A project that integrates AR with virtual try-on technology, useful for our current demo as well.

These projects are not only inspiring but also practical in terms of providing codebases that we can tweak and experiment with.

Open-source projects like these allow us to cut down on development time and focus on improving the unique aspects of our project.

Where We Are Going with This

We’re still in the early stages of the AR try-on demo project, but we’re making steady progress. The integration of 3D video editing, WebXR, and pose detection is coming together nicely, but there’s still a lot of work ahead in terms of polishing the user experience and refining the models.

We’re excited to see where this journey takes us, and we’re hopeful that this demo will set the stage for more interactive, personalized online shopping experiences in the future. It’s a blend of cutting-edge technology and creativity, and being part of this development has been both challenging and rewarding.

As always, there’s a lot more to explore, and we’re just scratching the surface. Stay tuned for updates on our project!