Web LLM: Run Large Language Models Directly in Your Browser with GPU Acceleration

Table of Content

No servers. No clouds. Just your browser and your GPU. That's what Web LLM brings to the table. Imagine chatting with a large language model (LLM) directly in your browser without depending on any backend server.

Sounds like sci-fi? It's not. Web LLM by MLC AI is making this a reality.

What is Web LLM?

Web LLM is an open-source project that allows you to run large language models in the browser using WebGPU for hardware acceleration.

This means the computation is done on your local GPU, keeping everything fast, efficient, and most importantly—private.

Why Is This a Big Deal?

- No Server Required: Traditional LLM applications depend on server infrastructure, which can be costly and compromise privacy. With Web LLM, everything runs locally in your browser.

- Privacy-Friendly: Since the model runs on your device, your data stays with you. No more worrying about your prompts being logged by third parties.

- Performance Boost with WebGPU: WebGPU support means the LLM leverages your device's GPU, making it faster and more efficient compared to CPU-based inference.

- Cross-Platform: If your device has a modern browser, you can use Web LLM, whether you're on Windows, Linux, macOS, or even a high-end Android tablet.

Key Features

- In-Browser Inference: Run high-performance LLMs directly in the browser using WebGPU for hardware acceleration. No server required.

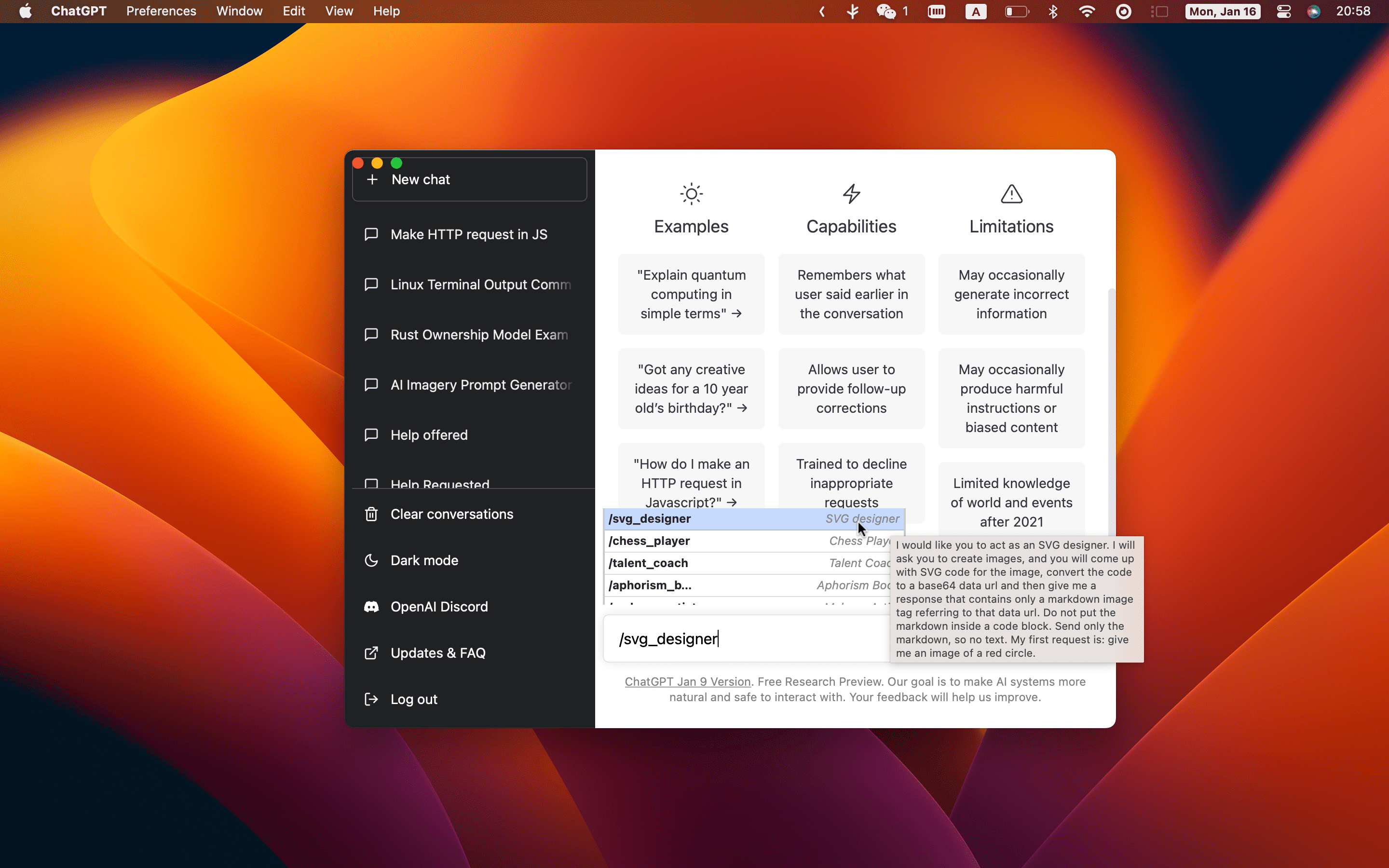

- OpenAI API Compatibility: Integrate with OpenAI API for features like streaming, JSON-mode, seeding, and logit-level control.

- Structured JSON Generation: Generate structured JSON output efficiently using WebAssembly-based processing. Try the WebLLM JSON Playground on HuggingFace.

- Extensive Model Support: Native support for models like Llama 3, Phi 3, Gemma, Mistral, and more. See the full list on MLC Models.

- Custom Model Integration: Deploy your own models in MLC format for tailored AI solutions.

- Plug-and-Play Integration: Easy setup via NPM, Yarn, or CDN with modular UI examples.

- Streaming & Real-Time Output: Supports streaming chat completions for interactive applications.

- Web Worker Support: Offload computations to web or service workers for optimized UI performance.

- Chrome Extension Support: Build powerful Chrome extensions with WebLLM, complete with example projects.

Use Cases

- Private AI Chat: Use LLMs without exposing your conversations to cloud servers, you are completely safe.

- Education: Teach AI concepts with a hands-on browser demo. That's a cool idea aint it?

- Offline Access: Need AI capabilities without an internet connection? Web LLM has you covered.

Supported Models - You name it!

WebLLM supports a variety of pre-built models from popular LLM families.

Here's the list of primary models currently available:

- Llama Family

- Llama 3

- Llama 2

- Hermes-2-Pro-Llama-3

- Phi Family

- Phi 3

- Phi 2

- Phi 1.5

- Gemma Family

- Gemma-2B

- Mistral Family

- Mistral-7B-v0.3

- Hermes-2-Pro-Mistral-7B

- NeuralHermes-2.5-Mistral-7B

- OpenHermes-2.5-Mistral-7B

- Qwen Family (通义千问)

- Qwen2 0.5B

- Qwen2 1.5B

- Qwen2 7B

Need More Models?

- Request New Models: Open an issue on the WebLLM GitHub repository.

- Custom Models: Follow the Custom Models guide to compile and deploy your own models with WebLLM.

For the full list of available models, check the MLC Models page.

How to Get Started

Head over to the Web LLM GitHub repository, where you'll find installation instructions and demos. Ensure your browser supports WebGPU (modern versions of Chrome, Edge, and Firefox are good bets).

This is a game-changer for privacy-focused developers, tech enthusiasts, and anyone who loves keeping things local. Give Web LLM a try and experience the future of AI, right in your browser.