When AI Misdiagnoses: Can Health IT Be Held Accountable for Malpractice?

Table of Content

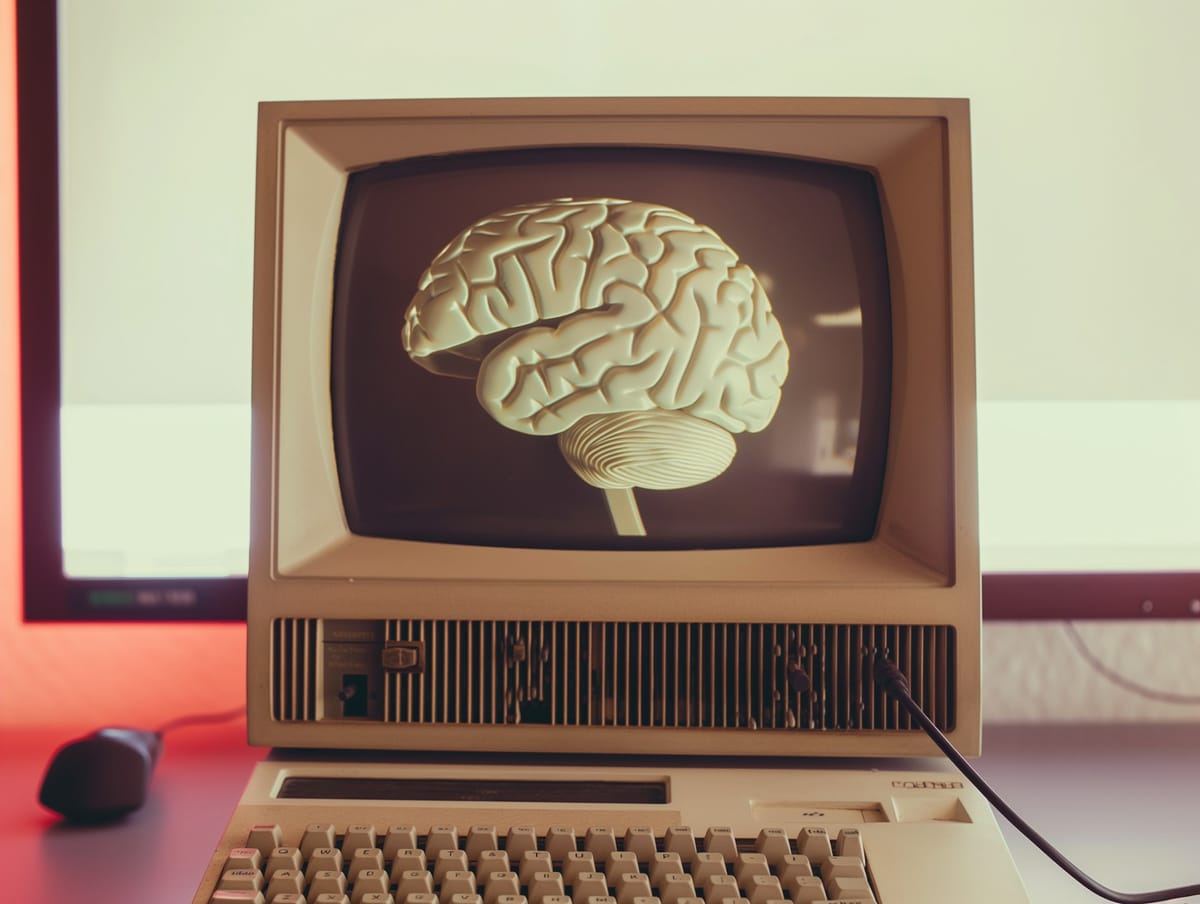

Artificial Intelligence (AI) is transforming healthcare diagnostics by improving accuracy and efficiency. However, AI-driven diagnostics raise serious legal and ethical concerns, especially when misdiagnoses occur or when individuals rely on AI for self-diagnosis.

As someone who bridges medicine, development, and AI use, I will explore the legal implications, potential liabilities, and the challenges of balancing innovation with patient safety.

AI in Medical Diagnostics: A Double-Edged Sword

AI's capacity to analyze vast datasets and recognize patterns has led to its adoption in various diagnostic tools.

For instance, a UCLA study revealed that an AI tool detected prostate cancer with 84% accuracy, surpassing the 67% accuracy rate of human doctors.Despite such promising outcomes, AI systems are not infallible.

Errors can arise from programming glitches, outdated data, or inherent biases in training datasets, potentially leading to incorrect diagnoses.

Case Study: The Perils of AI Misdiagnosis

Consider the case of a patient who relied on an AI-powered chatbot for medical advice.

The chatbot, designed to assist with eating disorders, provided harmful guidance, exacerbating the patient's condition.

This incident underscores the potential dangers of unregulated AI applications in healthcare and the dire consequences of erroneous AI-generated advice.

Legal Implications: Navigating Uncharted Waters

The legal landscape surrounding AI-induced misdiagnoses is intricate. Traditional medical malpractice laws hold healthcare professionals accountable for diagnostic errors.

However, when an AI system contributes to a misdiagnosis, determining liability becomes convoluted.

Key legal considerations include:

- Product Liability: If the AI system is deemed a medical device, manufacturers could be held liable for defects leading to patient harm.

- Standard of Care: The integration of AI alters the established standard of care.

Physicians may face legal scrutiny for either over-reliance on AI or for disregarding AI recommendations, especially if such actions result in patient injury. - Informed Consent: Patients must be informed about the use of AI in their diagnostic process. Failure to disclose this information could lead to legal challenges, particularly if the AI's involvement is linked to a misdiagnosis.

Self-Diagnosis Using AI: A Growing Concern

The accessibility of AI-driven health chatbots and symptom checkers has empowered individuals to engage in self-diagnosis.

While this can promote health awareness, it also poses significant risks:

- Inaccuracy: Studies have shown that online symptom checkers provide correct diagnoses only about 34% of the time, highlighting the potential for misinformation.

- Delayed Treatment: Reliance on AI for self-diagnosis can lead to delays in seeking professional medical care, resulting in worsened health outcomes.

- Legal Ambiguity: When self-diagnosis leads to harm, attributing legal responsibility is challenging, especially if the AI platform lacks proper disclaimers or operates without regulatory oversight.

Mitigating Risks: A Collaborative Approach

To harness AI's benefits while minimizing risks, a collaborative approach is essential:

- Regulation and Oversight: Implementing stringent regulatory frameworks to govern AI applications in healthcare can ensure safety and efficacy.

- Education and Training: Equipping healthcare professionals with the knowledge to effectively integrate AI into clinical practice can enhance decision-making and patient care.

- Patient Awareness: Educating patients about the limitations of AI-driven self-diagnosis tools can encourage informed health decisions and prompt consultation with medical professionals when necessary.

Conclusion

AI holds immense potential to transform healthcare, offering tools that can enhance diagnostic accuracy and patient outcomes.

However, the risks associated with AI misdiagnoses and self-diagnosis are significant and multifaceted, encompassing legal, ethical, and clinical dimensions.

As we navigate this evolving landscape, it is imperative to establish clear legal frameworks, promote interdisciplinary collaboration, and prioritize patient safety to ensure that AI serves as an asset rather than a liability in healthcare.

Further Readings

Here is a list of resources referenced in the article:

- AI detects cancer with 17% more accuracy than doctors: UCLA study

- GenderGP clinic has betrayed us with AI rip-off, say trans patients

- AI Misdiagnosis and Legal Implications

- Liability for Incorrect AI Diagnosis

- Machine Vision, Medical AI, and Malpractice

- The Dangers of Digital Self-Diagnosis