BricksLLM: AI Gateway For Putting LLMs In Production, Written in Golang

Table of Content

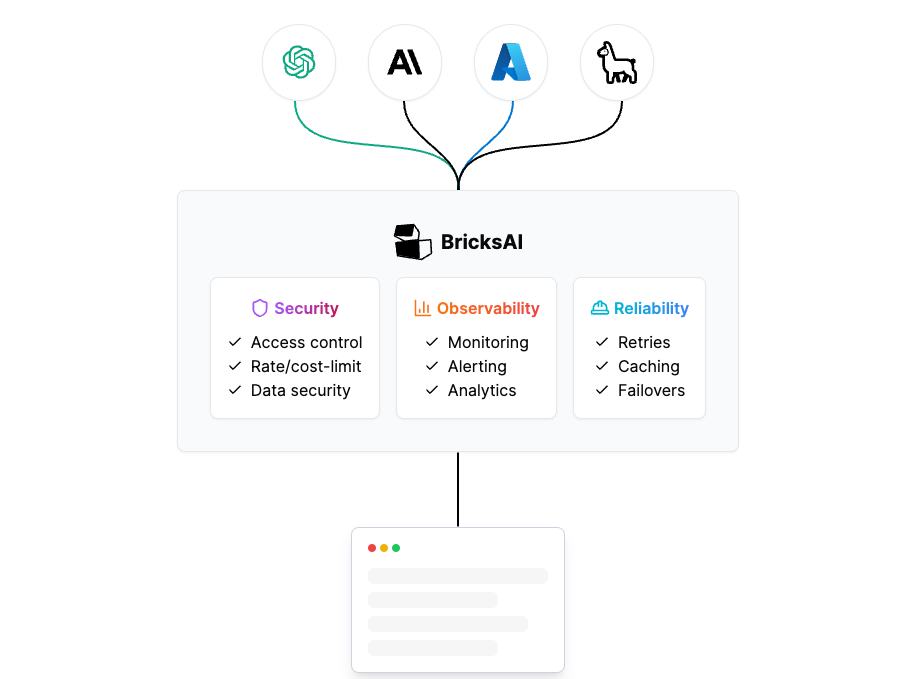

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM.

BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases.

Use-cases BricksLLM

Here are some use cases for BricksLLM:

- Set LLM usage limits for users on different pricing tiers

- Track LLM usage on a per user and per organization basis

- Block or redact requests containing PIIs

- Improve LLM reliability with failovers, retries and caching

- Distribute API keys with rate limits and cost limits for internal development/production use cases

- Distribute API keys with rate limits and cost limits for students

Features

- PII detection and masking

- Rate limit

- Cost control

- Cost analytics

- Request analytics

- Caching

- Request Retries

- Failover

- Model access control

- Endpoint access control

- Native support for all OpenAI endpoints

- Native support for Anthropic

- Native support for Azure OpenAI

- Native support for vLLM

- Native support for Deepinfra

- Support for custom deployments

- Integration with custom models

- Datadog integration

- Logging with privacy control

License

MIT License.