CrawleeAI: Transforming Web Scraping with AI into Intelligent Data Symphony

Table of Content

Ever found yourself wishing there was an easier way to gather all that amazing information floating around the web?

Well, grab a coffee and let me tell you about something that's been making waves in the developer community - Crawlee!

What is Crawlee?

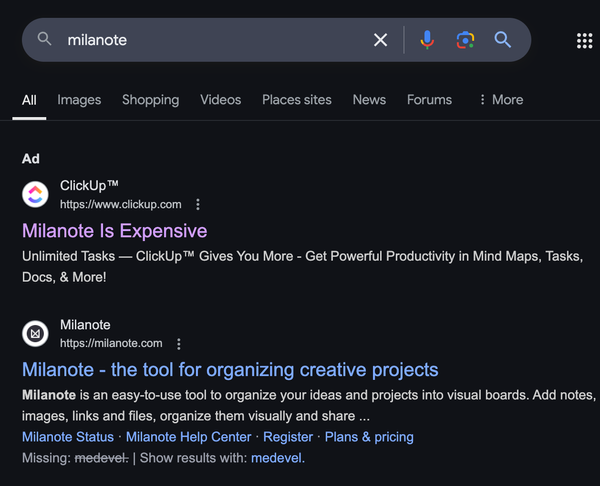

Crawlee isn’t just another scraping library—it’s a comprehensive tool designed for efficiency, scalability, and developer satisfaction. Whether you're dealing with single-page applications (SPAs), complex websites, or APIs, Crawlee adapts to your needs.

Plus, it’s built on modern web technologies, making it reliable, fast, and easy to integrate into your existing workflow.

Why You'll Love Crawlee

Let me break it down in a way that'll make you smile:

- It's Like a Swiss Army Knife: Whether you're dealing with fancy single-page apps or good old-fashioned websites, Crawlee's got your back. It's like having different tools for different jobs, but all in one neat package!

- It Plays Nice with Others: Remember those times when tools just wouldn't work together? Crawlee gets along with everyone - Puppeteer, Playwright, Cheerio - you name it! It's like having a friend who's friends with everyone. 😊

- It Grows with You: Starting small? Perfect! Going big? Even better! Crawlee scales up or down like a champion, no sweat.

The Cool Features That'll Make Your Life Easier

Let's talk about the fun stuff! Crawlee comes packed with features that'll make you feel like a web scraping superhero:

1- Smart Browsing 🌐

Got different scraping needs? Crawlee's got different crawlers for you! Whether you need the heavy-duty Browser Crawler for complex sites or the lightweight Cheerio Crawler for simpler stuff, you're covered.

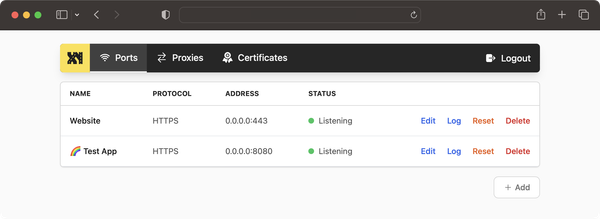

2- Proxy Power 🛡️

Worried about getting blocked? Crawlee's got some neat tricks up its sleeve with automatic proxy rotation and smart rate limiting. It's like having a personal bouncer for your web scraping!

3- Storage Simplified 📦

No more headaches about where to store your data - Crawlee makes it super easy to save and manage everything you collect. It's like having a personal filing system that just works!

Features

- Single interface for HTTP and headless browser crawling

- Persistent queue for URLs to crawl (breadth & depth first)

- Pluggable storage of both tabular data and files

- Automatic scaling with available system resources

- Integrated proxy rotation and session management

- Lifecycles customizable with hooks

- CLI to bootstrap your projects

- Configurable routing, error handling and retries

- Dockerfiles ready to deploy

- Written in TypeScript with generics

👾 HTTP crawling

- Zero config HTTP2 support, even for proxies

- Automatic generation of browser-like headers

- Replication of browser TLS fingerprints

- Integrated fast HTML parsers. Cheerio and JSDOM

- Yes, you can scrape JSON APIs as well

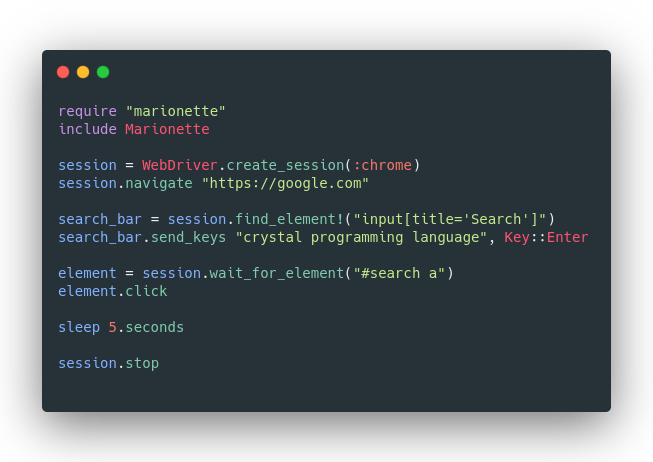

💻 Real browser crawling

- JavaScript rendering and screenshots

- Headless and headful support

- Zero-config generation of human-like fingerprints

- Automatic browser management

- Use Playwright and Puppeteer with the same interface

- Chrome, Firefox, Webkit and many others

Install

npm install crawleeOr

npx crawlee create my-crawlerUsage

import { PuppeteerCrawler } from 'crawlee';

const crawler = new PuppeteerCrawler({

async requestHandler({ page, request }) {

console.log(`Checking out: ${request.url}`);

const title = await page.title();

console.log(`Found this cool title: ${title}`);

},

});

await crawler.run(['https://example.com']);License

This project is licensed under the Apache License 2.0.

Resources & Downloads