DeepSeek’s Database Leak: Why We’re No Longer Rooting for It

Table of Content

We were big fans of DeepSeek. When DeepSeek V3 and DeepSeek R1 came out, we rooted for them as solid open-source AI models that could challenge the big players. Unlike many proprietary models, DeepSeek offered transparency and could run locally, making it a great alternative for privacy-conscious users. We even wrote guides on how to run DeepSeek R1 locally on Linux and WebGPU:

But now, after the latest security disaster, we’re not so sure anymore.

What Went Wrong?

According to Wiz Research (source), DeepSeek had a massive security blunder—their entire database was publicly accessible. Anyone could peek into their training data, user queries, infrastructure details, and possibly even proprietary AI research. This wasn’t some sophisticated hack; it was pure negligence—a database left wide open without any authentication.

This raises serious concerns about DeepSeek’s infrastructure, security practices, and whether they even take AI safety seriously.

Why This is a Big Deal

As a developer, I know that database misconfigurations happen, but when you’re running an AI model at this scale, there’s no excuse for sloppy security.

As a doctor, I see AI models becoming increasingly important in medicine, from diagnostic assistance to medical research. If an AI company can’t even secure its infrastructure, how can we trust it with sensitive medical data or any user interactions?

As an AI user, I want to believe in open-source AI and its potential to challenge the tech giants. But if the developers behind these models are careless, it makes it hard to trust them in the long run.

DeepSeek's Future: Can It Recover?

This security failure is more than just an embarrassing mistake—it’s a major red flag. Here’s why:

- Reputation Damage: Users and developers lose trust in AI models that don’t take security seriously.

- Privacy Concerns: If they were this careless with their own data, how can we trust them with ours?

- Regulatory Scrutiny: With AI regulations tightening, incidents like this make companies a target for restrictions.

- Competitive Disadvantage: OpenAI, Mistral, and Llama 3 competitors now look more appealing, even if they're not fully open-source.

What Can You Do?

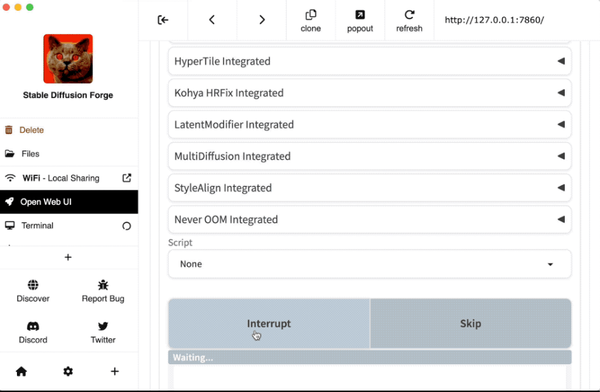

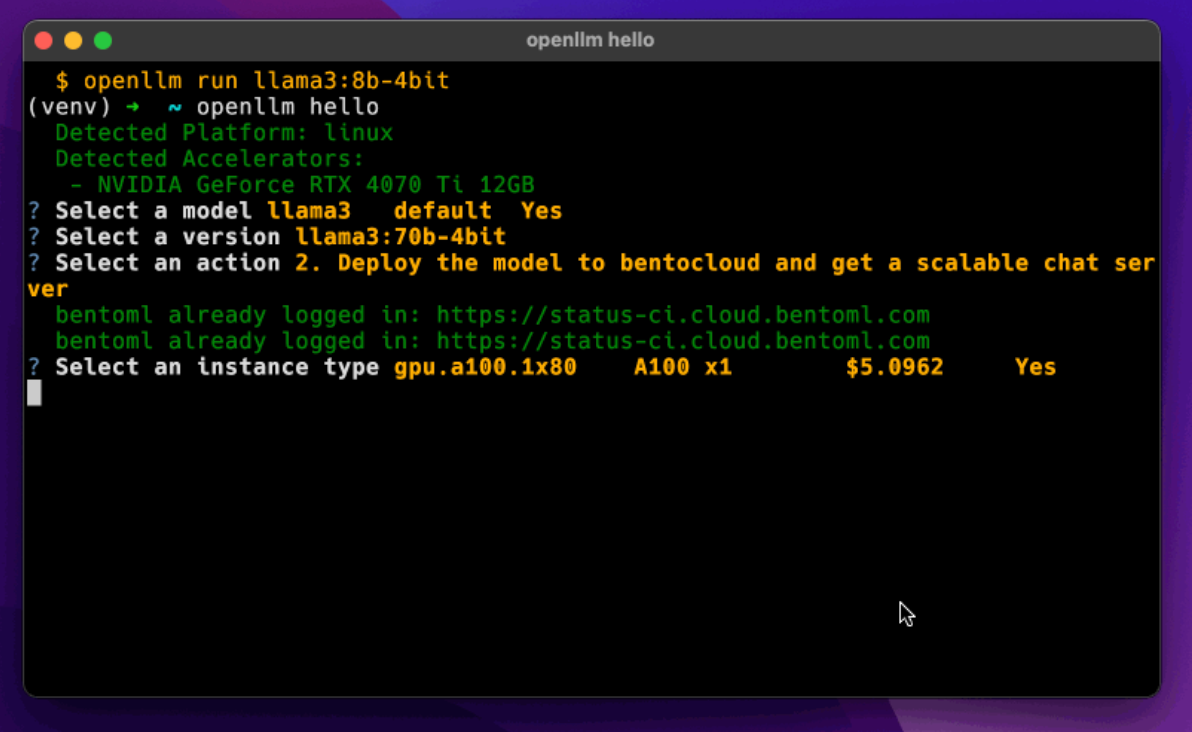

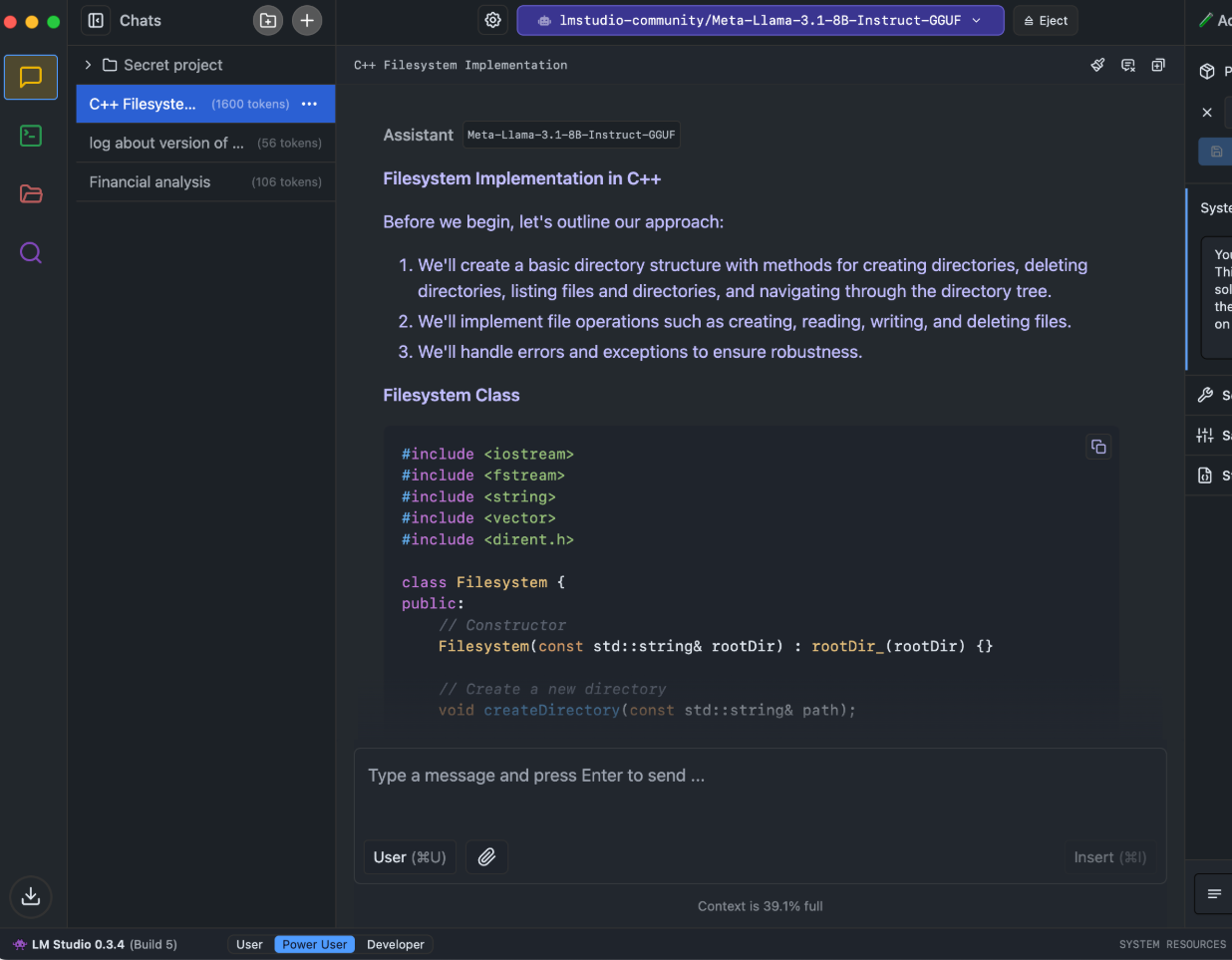

Here’s the good news—you don’t have to rely on their cloud infrastructure to use DeepSeek. One of its biggest advantages is that you can run it locally on your machine:

- No data leaks. Your queries stay private.

- No reliance on their servers. Security issues on their end won’t affect you.

- Better performance on your hardware. WebGPU and local installs can make AI chatbots faster and more responsive.

If you’re interested in using DeepSeek safely, check out our previous guides:

Final Thoughts

We still believe in open-source AI, but security matters. DeepSeek has a long way to go to rebuild trust, and until they prove they take security seriously, we’re not recommending their cloud-based models anymore.

The takeaway? Run it locally, own your data, and never blindly trust any AI provider—open-source or not. 🚀