LangWatch is an Open-source LLM Monitoring & Optimization Studio

Table of Content

What is LangWatch?

Tired of wrestling with LLM pipelines? Say hello to LangWatch —the tool that turns chaos into clarity. Whether you’re building chatbots, automating workflows, or just trying to stop your LLM from hallucinating your grocery list, LangWatch is here to save the day.

Imagine a world where optimizing prompts is as easy as dragging blocks, debugging is actually fun, and your LLM costs don’t give you nightmares. That’s LangWatch. Built on Stanford’s DSPy framework, it’s like giving your AI a supercharged brain and a dashboard to keep it in check.

Use-cases

- Startups : Optimize your chatbot’s responses without hiring an army of prompt engineers.

- Enterprises : Ensure your AI stays compliant while scaling across teams.

- Solo Devs : Experiment fearlessly—LangWatch has your back if things go sideways.

Features

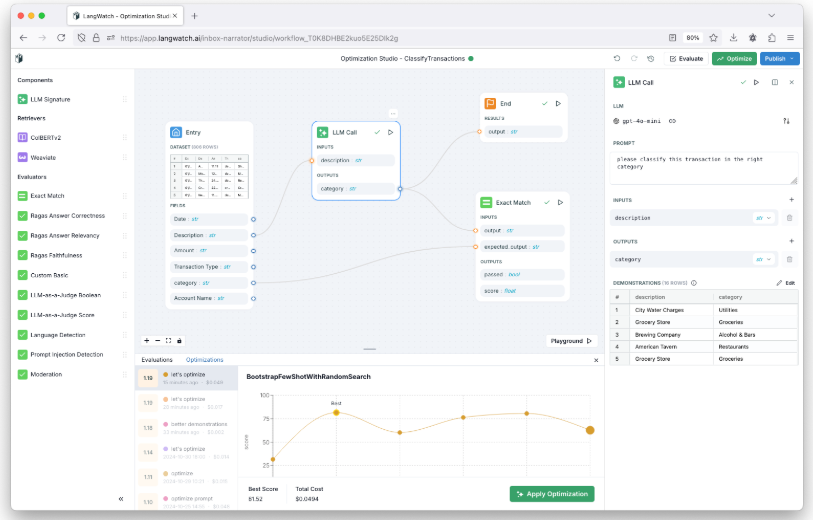

1. Drag-and-Drop Optimization Studio

Build and tweak LLM pipelines like you’re solving a puzzle. No PhD required.

- Auto-generate prompts and examples (because writing 50 variations is so 2023).

- Track experiments visually—no more “Wait, which version worked again?”

2. Quality Control That’s Actually Fun

- 30+ evaluators pre-loaded: Test for bias, accuracy, even vibes (okay, maybe not vibes… yet).

- Build your own custom checks for those “specifically weird” edge cases.

- Compliance scans to keep your AI from accidentally becoming a meme.

3. Real-Time Monitoring & Analytics

- Track costs, performance, and user happiness in one place.

- Debug like a wizard with traceable LLM “thoughts” (yes, you can finally see why it thought “pineapple on pizza” was a great answer).

- Create custom dashboards to impress your boss (or just stalk your metrics).

4. Cloud Magic

Deploy pipelines faster than you can say “serverless.” Focus on building, not infrastructure.

License

Apache License, Version 2.0

Resources

- 2