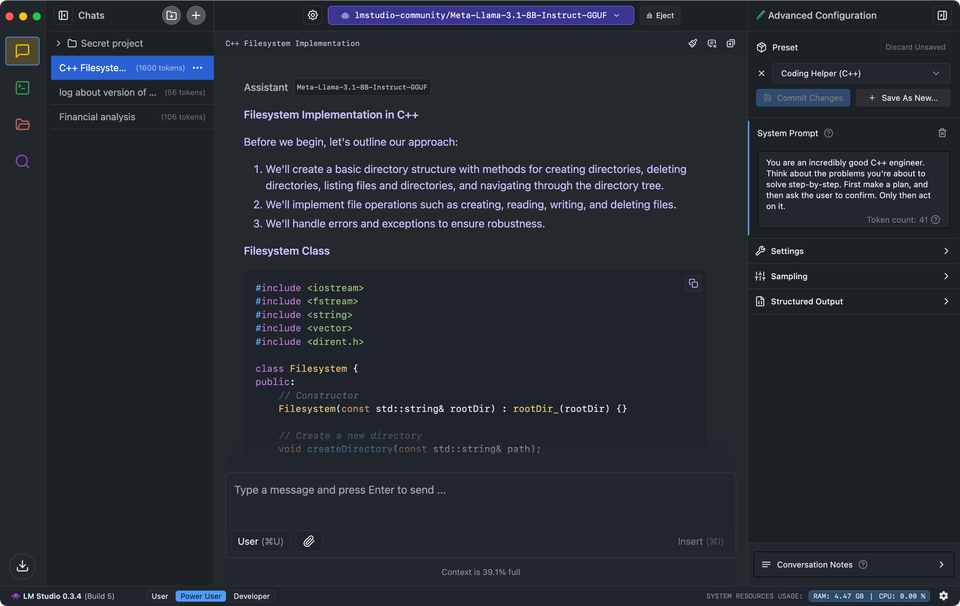

Why Choose LMStudio.AI for Local AI Model Hosting?

Running Language Models on Your Computer: The Ultimate Guide to LMStudio.AI

Are You Truly Ready to Put Your Mobile or Web App to the Test?

Don`t just assume your app works—ensure it`s flawless, secure, and user-friendly with expert testing. 🚀

Why Third-Party Testing is Essential for Your Application and Website?We are ready to test, evaluate and report your app, ERP system, or customer/ patients workflow

With a detailed report about all findings

Contact us nowTable of Content

Want to harness cutting-edge text generation technology without relying on cloud services?

Then, LMStudio.AI might be exactly what you're looking for!

This friendly guide will walk you through everything you need to know about this game-changing tool that puts powerful language technology right on your personal computer.

Why LMStudio.AI Is Making Waves

Picture having a sophisticated text generation system running smoothly on your own device - that's exactly what LMStudio.AI delivers! This clever tool lets you work with advanced language technology without depending on internet connections or external servers.

Whether you're a curious beginner or a seasoned tech enthusiast, LMStudio.AI opens up exciting possibilities for local language model deployment.

Top Reasons to Give LMStudio.AI a Try

1- Keep Your Information Private, Safe and Sound

Running everything locally with LMStudio.AI ensures your sensitive data stays exactly where it belongs—on your own device. This means no exposure to cloud services, reducing risks of data breaches while maintaining full control and privacy. Experience unparalleled security and peace of mind.

2- Offline-first: Work Anywhere, Anytime

Say farewell to internet dependency with LMStudio. AI! This powerful tool operates seamlessly offline, making it an ideal solution for remote work environments or areas with unreliable or limited internet connectivity.

3- Save Your Hard-Earned Money

Why pay for expensive subscriptions like ChatGPT and similar cloud-based services when you can run models locally with LMStudio.AI? By skipping costly monthly fees, you'll save significantly while retaining complete control over your projects and data.

4- Make It Your Own

Love to tinker? LMStudio.AI lets you adjust and customize language models to match your exact needs. The sky's the limit when it comes to experimentation!

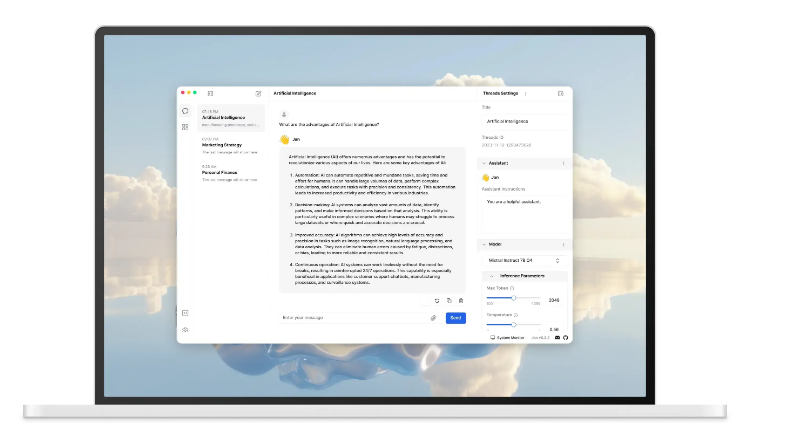

5- User-Friendly Design

No need to be a tech genius! LMStudio.AI is designed to be simple and straightforward, so even beginners can dive right in and start working with language models effortlessly.

Exciting Features That Set LMStudio.AI Apart

Local Power, Global Potential

- 💻 Run sophisticated language models right on your computer

- 🔓 Access open-source innovations without restrictions

- 🎯 Shape the technology to fit your unique goals

- 🌐 Enjoy smooth performance across Windows, Mac, and Linux

- 🎨 Navigate easily with a clean, intuitive interface

- 🔧 Fine-tune models for specialized tasks

- 💪 Get great results even on standard hardware

Supported LLMs

LMStudio.AI works with a variety of amazing large language models that you can easily download and run right on your device! Some of the supported models include:

- Llama 3.2

- Mistral

- Phi 3.1

- Gemma 2

- DeepSeek 2.5

- Qwen 2.5

Works Great On...

- Windows: Quick setup, reliable performance

- macOS: Smooth Mac integration with amazing M series support for Apple Silicon

- Linux: Perfect for open-source enthusiasts

Ready to Transform Your Text Generation Projects?

LMStudio.AI is revolutionizing how we work with language technology. By bringing powerful text generation capabilities to your local machine, it offers unmatched privacy, value, and flexibility.

Whether you're creating content, conducting research, or building innovative applications, LMStudio.AI provides the tools you need to succeed.

The best part? You don't need to be a tech wizard to get started. LMStudio.AI's friendly interface welcomes everyone from curious beginners to experienced developers. It's time to discover what you can create with language technology that works for you.

Start Your Journey Today!

Ready to explore the possibilities? Visit LMStudio.AI to begin your adventure with local language model deployment. Join the growing community of creators, researchers, and innovators who are discovering the freedom of running language models on their own terms.

License

Free and open-source

Remember: Your creativity + LMStudio.AI's capabilities = Endless possibilities! 🚀

Resources and Downloads

Looking for more AI, LLMs and Machine Learning Resources?

Check our our following articles: