Messing Around with AI Models This Week – What We Learned in Our AI Club

Table of Content

So, this past week in our local AI Club, we went down a rabbit hole of LLM models, parameters, vector databases, and a whole bunch of AI-related experiments. Honestly, I thought I knew quite a bit about models, but once we started comparing them side by side, things got real interesting.

Some models felt snappy and efficient, others felt bloated, and some just straight-up hallucinated nonsense (I see you, cheap open-weight models 🙄).

I’m just gonna dump my thoughts here, the way we talked about it in the club—no fancy corporate AI jargon, just raw experience.

1. Model Parameters – Bigger Ain’t Always Better

One of the first things we tackled was parameters. You hear this all the time:

- "X model has a trillion parameters, so it's smarter!"

- "More parameters = better performance!"

Well, yeah, but also nah. We actually ran some real-world comparisons, and sometimes a well-optimized smaller model outperforms a bloated trillion-parameter one—especially when it comes to speed and efficiency.

🔹 Example: We tested Mistral, which is relatively lightweight, against GPT-4 Turbo on specific reasoning tasks.

Guess what? Mistral kept up surprisingly well for basic tasks, while GPT-4 Turbo took its sweet time thinking too hard about everything. Made me wonder—do we really need these massive models for simple tasks?

Lesson learned: Don’t get distracted by the numbers. A smaller, well-optimized model might be the better choice if all you need is quick, solid responses.

2. Choosing the Right Model – More of an Art Than Science

Okay, this is where things got chaotic in our discussions.

Everyone had their favorite model, and no one could agree.

Some of us liked Claude 3 for its speed, others preferred Llama 3 because it’s open-source and easier to self-host, and a few were die-hard GPT-5 fans.

So we asked: How do you actually CHOOSE the right AI model?

Turns out, it depends on what you’re using it for:

- Need something snappy for customer interactions? → Use a smaller, fast-response model.

- Need solid reasoning and high-quality outputs? → GPT-5 or Claude 3.

- Running your own stuff locally? → Llama 3, Falcon, or Mistral.

👉 Personal take: I really wanted to like GPT-5, but the more we tested, the more I realized it’s overkill for most tasks. For anything lightweight, Mixtral or even an optimized Llama model does just fine.

Why burn GPUs for no reason? 🤷

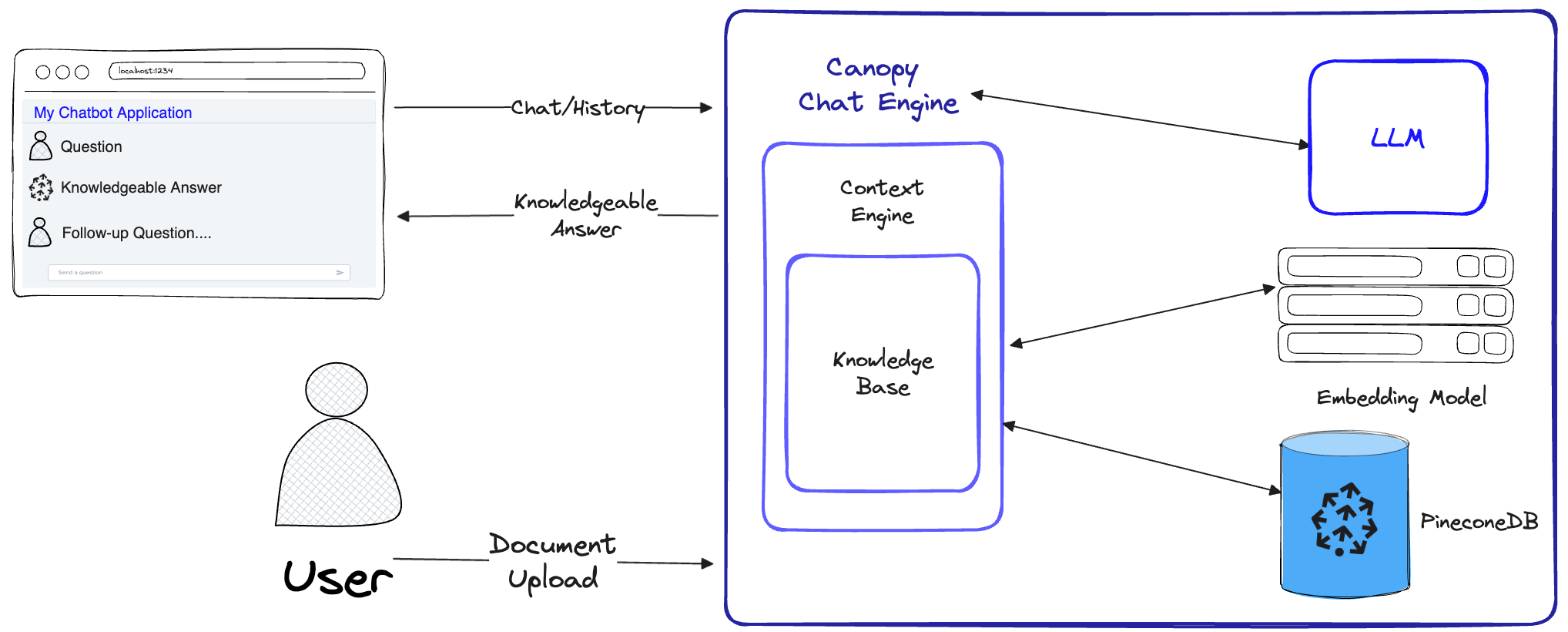

3. Vector Databases – The Secret Sauce of Smart AI

This part caught a lot of us off guard. Before this, I knew what vector databases were, but actually working with them made me realize just how much they change the game.

🔹 What’s a Vector Database?

Instead of storing data like a normal database (where you just do keyword searches), a vector database stores knowledge in a way that AI can "understand" meaning.

🔹 Why does this matter?

Ever asked an LLM a question and it confidently hallucinates some made-up facts? Yep, that’s because standard AI models rely on whatever they were trained on, not fresh data.

Vector databases fix this by allowing AI to retrieve real-time, context-aware information.

We played around with FAISS, Pinecone, and Weaviate, feeding them our own custom data to see how well they’d respond. The difference? Mind-blowing.

A regular LLM would just make stuff up, but when paired with a vector database, it fetched ACTUAL, relevant knowledge instead of guessing.

👉 Takeaway: If you want an AI that doesn’t just confidently lie, integrating it with a vector database is the way forward.

4. The Fun Part – Actually Playing with AI Models

This was the best part of the week—just throwing random stuff at different AI models and seeing how they respond.

Here’s how some of them did:

- ✅ Claude 3 – Surprisingly good at nuanced reasoning, but slow at times.

- ✅ GPT-5 – Insanely powerful, but overkill for a lot of tasks.

- ✅ Mistral – Fast, efficient, and just “good enough” for most cases.

- ✅ Llama 3 – Best for local self-hosting; if you like control, it’s solid.

- ✅ Mixtral – Balanced, super efficient, and the best for multilingual tasks.

- ✅ Falcon – A little underwhelming tbh, but okay for specific use cases.

Also, RAG-powered models with vector search?

🛑 Absolute game-changers. 🛑

Once we set up RAG (Retrieval-Augmented Generation), it just crushed all the other models on real-time knowledge tasks. If you’re building AI for anything research-heavy, this is the future.

5. The Good, The Bad & The Ugly

Let’s be real—AI is amazing, but also kinda annoying. Some moments from this week:

😤 The Frustrating Part:

- Setting up FAISS for the first time? Nightmare. I forgot dependencies, broke my environment twice, and almost rage-quit.

- Watching LLMs confidently lie about facts we knew were wrong. (Why do models love making up book citations?)

😍 The Exciting Part:

- Seeing a properly set up RAG system fetch ACTUAL real-time data instead of guessing.

- Watching smaller models like Mistral compete with GPT-5 in many tasks (David vs. Goliath moment 💪).

- Discovering that not every AI problem needs a giant trillion-parameter model.

Final Thoughts – What We Took Away From This Week

After spending days testing, comparing, and occasionally breaking things, we realized a few things:

- Size isn’t everything – A well-optimized model can outshine a bigger one.

- Vector databases make AI smarter – No more hallucinations, real facts only.

- Different models for different jobs – There’s no "one best AI," just the best for your specific use case.

If you’re diving into LLMs and AI in 2025, don’t just assume bigger = better. Experiment, break things, and actually test models for yourself. It’s the only way to really see what works and what doesn’t.

This was one of the best learning weeks we’ve had in our AI Club—excited to see where this tech goes next. 🚀