19 Open-source Free RAG Frameworks and Solution for AI Engineers and Developers - Limit AI Hallucinations

Table of Content

After covering dozens of AI tools over the years—from simple chatbots to sophisticated enterprise solutions—I'm excited to share what might be the most significant advancement in AI development: RAG systems. Whether you're a developer looking to build better AI solutions or an end-user wondering why your AI tools are getting smarter, this post is for you.

RAG Systems: Why They're the Next Big Thing in AI (And Why You Should Care)

What's RAG, and Why Should You Care?

First off, let's break down RAG (Retrieval-Augmented Generation) in plain English. You know how sometimes AI chatbots make stuff up or give outdated information? RAG systems are like giving your AI a reliable research assistant who fact-checks everything before speaking. Pretty cool, right?

Having tested countless AI tools over the years, I can tell you that RAG is a game-changer. It combines the best of two worlds:

- The ability to search through massive amounts of data (the retrieval part)

- The smart, human-like responses we love from AI (the generation part)

As someone who's watched the AI space evolve, I can tell you that RAG is different. It's not just another buzzword—it's solving real problems:

- No more AI hallucinations (those frustrating made-up answers)

- Always up-to-date information

- Customizable for specific industries

- More reliable and trustworthy responses

How Does RAG Actually Work?

Think of RAG as a three-step dance:

- Find: It searches through your data (like a super-powered Ctrl+F)

- Think: It processes what it found (like a smart analyst)

- Respond: It creates a helpful answer (like a knowledgeable colleague)

Real-World Magic: Where RAG Shines

After years of reviewing AI tools, here are some of the most impressive uses we've seen:

🏥 Healthcare and Medical Sector

Remember those medical chatbots that used to give generic answers? With RAG, they're now pulling from actual medical journals and patient histories. Doctors are using these systems to stay up-to-date with the latest research while treating patients.

⚖️ Legal Work

Law firms we've worked with are using RAG-powered tools to analyze thousands of cases in seconds. Imagine having a legal assistant who's read every case law ever written!

💰 Finance & Accounting

Remember when financial analysis meant endless spreadsheet diving? RAG systems are now helping analysts by pulling relevant data and generating reports automatically. One of our finance readers called it their "personal financial wizard."

🔬 Medical Research

We've seen researchers use RAG systems to analyze decades of medical papers in hours instead of months. It's like having a research team that never sleeps!

What's Next?

Having covered everything from basic chatbots to sophisticated AI platforms, I'm particularly excited about RAG's potential. We're seeing developers create solutions that would have seemed like science fiction just a few years ago.

Whether you're building the next great AI application or just trying to make sense of all these new tools, RAG systems are worth watching. They're making AI not just smarter, but more reliable and useful in the real world.

What do you think about RAG systems? Have you used any RAG-powered tools? Drop a comment below—I'd love to hear your experiences!

P.S. Stay tuned for our upcoming series on practical RAG implementations. We'll be showcasing some of the most innovative tools we've reviewed and sharing tips on how to get started with RAG in your own projects!

In the following list, you will find 19 open-source RAG solutions that benefits AI developers while crafting their next AI apps.

1- RAGFlow

RAGFlow is a self-hosted open-source RAG (Retrieval-Augmented Generation) engine based on deep document understanding.

The platform offers a streamlined RAG workflow for businesses of any scale, combining LLM (Large Language Models) to provide truthful question-answering capabilities, backed by well-founded citations from various complex formatted data.

Features

- Advanced Knowledge Extraction: Handles unstructured data, unlimited tokens.

- Template Chunking: Customizable, explainable templates.

- Grounded Citations: Traceable references, visualized chunking.

- Data Compatibility: Supports diverse formats (Word, Excel, images, web, etc.).

- Automated RAG Workflow: Configurable LLMs, multi-recall, seamless APIs.

2- Dify

Dify is an open-source LLM app development platform. Its intuitive interface combines agentic AI workflow, RAG pipeline, agent capabilities, model management, observability features and more, letting you quickly go from prototype to production.

Features

- Visual Workflows: Build AI workflows easily on a visual canvas.

- Model Integration: Supports hundreds of LLMs, including GPT, Mistral, and OpenAI-compatible models.

- Prompt IDE: Craft, compare, and enhance prompts with extra features.

- RAG Pipeline: Seamlessly handle PDFs, PPTs, and document retrieval.

- AI Agents: Create agents with 50+ built-in tools like Google Search and DALL·E.

- LLMOps: Monitor and optimize performance with production insights.

- APIs: Ready-to-use APIs for effortless business integration.

3- Verba

Vebra or in another word, The Golden RAGtriever is a self-hosted open-source application designed to offer an end-to-end, streamlined, and user-friendly interface for Retrieval-Augmented Generation (RAG) out of the box.

Verba Features

- Fully Customizable: Personal assistant powered by RAG for querying local or cloud data.

- Data Interaction: Resolve questions, cross-reference data, and gain insights from knowledge bases.

- RAG Frameworks: Choose frameworks, data types, chunking, retrieval, and LLM providers.

- UnstructuredIO: Import unstructured data.

- Firecrawl: Scrape and crawl URLs.

- PDF, DOCX, CSV/XLSX: Import documents and table data.

- GitHub/GitLab: Import repository files.

- Multi-Modal: Transcribe audio via AssemblyAI.

- Hybrid Search: Combine semantic and keyword search.

- Autocomplete Suggestions: Intelligent query autocompletion.

- Filters: Filter by document type or metadata.

- Custom Metadata: Full control over metadata.

- Async Ingestion: Speed up data processing.

- Planned Enhancements: Advanced querying, reranking, and RAG evaluation tools.

- Token & Sentence-Based: Powered by spaCy.

- Semantic: Group by sentence similarity.

- Recursive: Rule-based chunking.

- File-Specific: Chunk HTML, Markdown, Code, and JSON files.

- Docker Support: Easy deployment with Docker.

- Customizable Frontend: Fully adaptable interface.

- Vector Viewer: 3D data visualization.

- Supports leading RAG libraries for seamless integration.

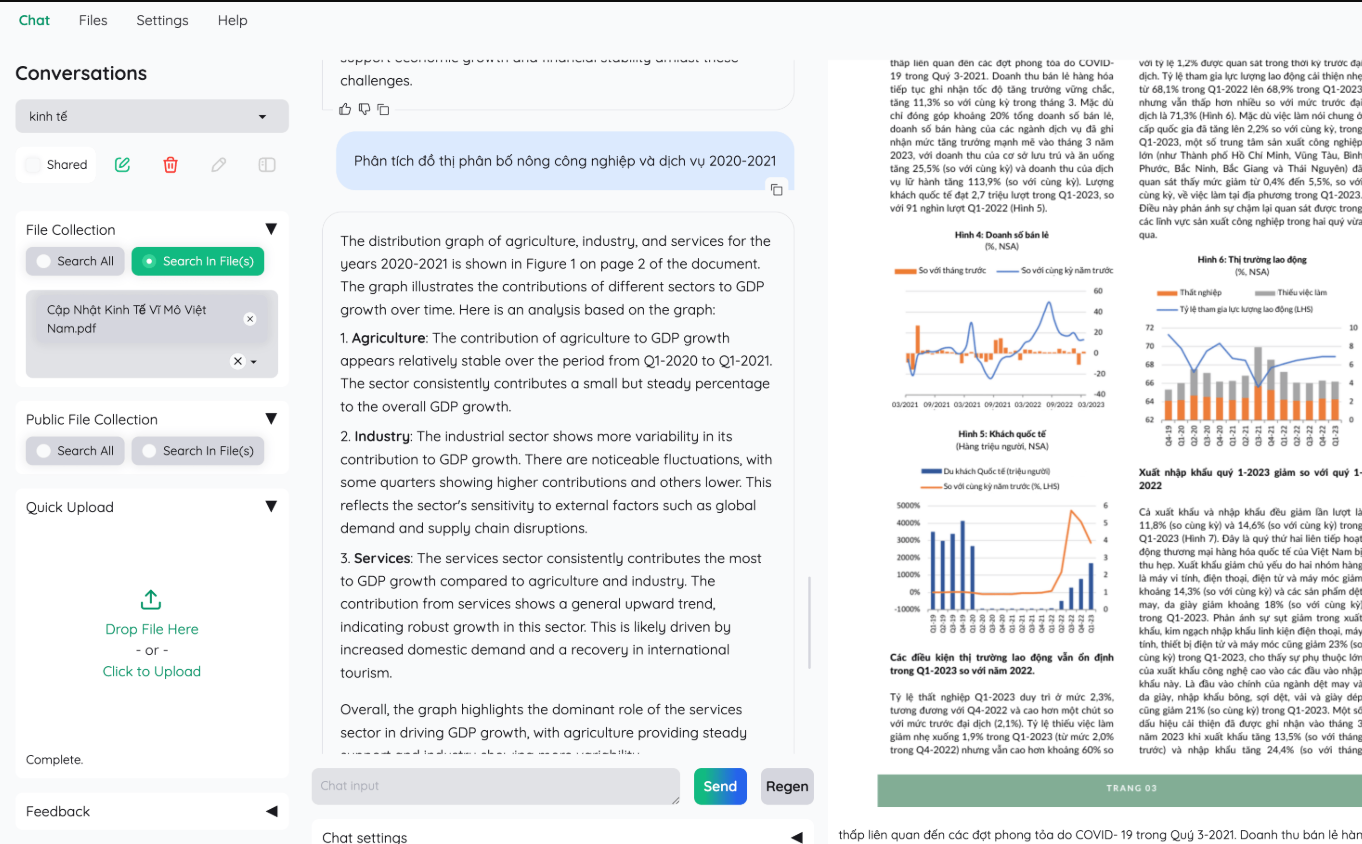

4- kotaemon: The RAG UI

This open-source Python app is an open-source clean & customizable RAG UI for chatting with your documents.

This framework is built with both end users and developers in mind. It serves as a functional RAG UI for both end users who want to do QA on their documents and developers who want to build their own RAG pipeline.

Features

- Minimalistic UI: Clean, user-friendly RAG-based QA interface.

- LLM Compatibility: Supports API providers (OpenAI, Azure) and local models (Ollama, llama-cpp).

- Easy Setup: Quick installation with simple scripts.

- RAG Pipeline Framework: Tools to build and customize document QA pipelines.

- Customizable Gradio UI: Adaptable interface with extensible elements and a Gradio theme option.

- Host Document QA: Multi-user login, private/public file collections, and chat sharing.

- Hybrid RAG Pipeline: Combines full-text and vector retrieval with re-ranking.

- Multi-Modal QA: Supports figures, tables, and multi-modal document parsing.

- Citations & Previews: Detailed citations with PDF highlights and relevance scoring.

- Complex Reasoning: Handles multi-hop questions with ReAct, ReWOO, and agent-based reasoning.

- Configurable Settings: Adjust retrieval and generation processes via the UI.

- Extensibility: Fully customizable framework with GraphRAG indexing example.

5- Cognita

Cognita is an open-source framework for building, customizing, and deploying RAG systems with ease.

It offers a UI for experimentation, supports multiple RAG configurations, and enables scalable deployment for production environments. Compatible with Truefoundry for enhanced testing and monitoring.

Features

- Central Repository: Parsers, loaders, embedders, and retrievers in one place.

- Interactive UI: Non-technical users can upload documents and perform Q&A.

- API-Driven: Seamless integration with other systems.

- Advanced Retrievals: Similarity search, query decomposition, document reranking.

- SOTA Embeddings: Supports open-source embeddings and reranking (mixedbread-ai).

- LLM Integration: Works with ollama and other LLMs.

- Incremental Indexing: Efficient batch document ingestion, preventing re-indexing.

- Truefoundry Compatibility: Logging, metrics, and feedback for user queries.

6- Local RAG

Local RAG is an offline, open-source tool for Retrieval Augmented Generation (RAG) using open-source LLMs—no 3rd parties or data leaving your network. It supports local files, GitHub repos, and websites for data ingestion. Features include streaming responses, conversational memory, and chat export, making it a secure, privacy-friendly solution for personalized AI interactions.

7- Haystack

Haystack is an end-to-end LLM framework that allows you to build applications powered by LLMs, Transformer models, vector search and more.

Whether you want to perform retrieval-augmented generation (RAG), document search, question answering or answer generation, Haystack can orchestrate state-of-the-art embedding models and LLMs into pipelines to build end-to-end NLP applications and solve your use case.

8- fastRAG

fastRAG is a research framework for efficient and optimized retrieval augmented generative pipelines, incorporating state-of-the-art LLMs and Information Retrieval.

fastRAG is designed to empower researchers and developers with a comprehensive tool-set for advancing retrieval augmented generation.

9- R2R (RAG to Riches), the Elasticsearch for RAG

R2R (RAG to Riches), the Elasticsearch for RAG, bridges the gap between experimenting with and deploying state of the art Retrieval-Augmented Generation (RAG) applications.

It's a complete platform that helps you quickly build and launch scalable RAG solutions. Built around a containerized RESTful API, R2R offers multimodal ingestion support, hybrid search, GraphRAG capabilities, user management, and observability features.

Features

- 📁 Multimodal Ingestion: Parse

.txt,.pdf,.json,.png,.mp3, and more. - 🔍 Hybrid Search: Combine semantic and keyword search with reciprocal rank fusion for enhanced relevancy.

- 🔗 Graph RAG: Automatically extract relationships and build knowledge graphs.

- 🗂️ App Management: Efficiently manage documents and users with full authentication.

- 🔭 Observability: Observe and analyze your RAG engine performance.

- 🧩 Configurable: Provision your application using intuitive configuration files.

- 🖥️ Dashboard: An open-source React+Next.js app with optional authentication, to interact with R2R via GUI.

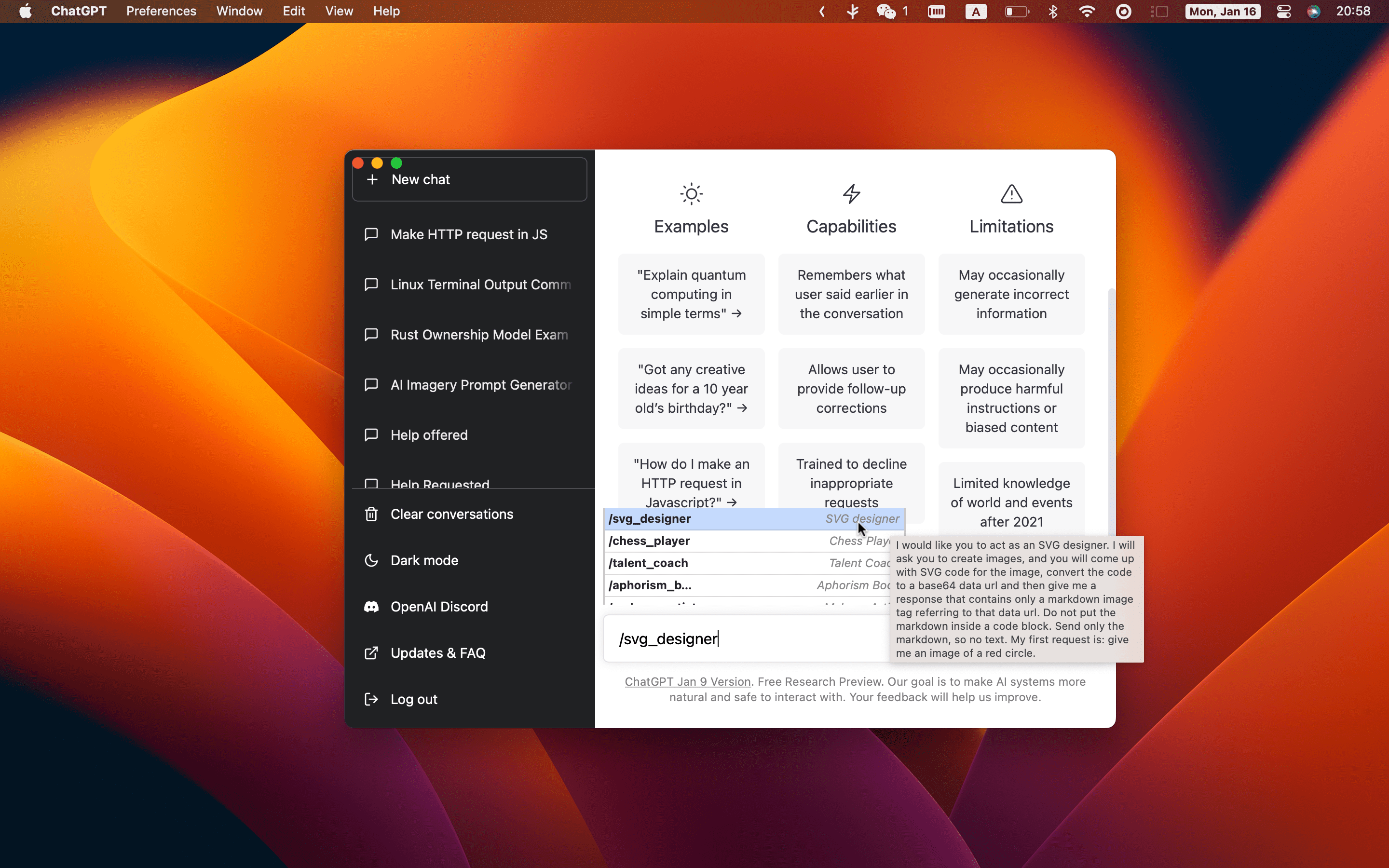

10- Lobe Chat

Lobe Chat An open-source, modern-design ChatGPT/LLMs UI/Framework.

Supports speech-synthesis, multi-modal, and extensible (function call) plugin system.

This app features a flexible friendly RAG framework, that works as an advanced built in knowledge management system that interact with files and dozens of external sources.

11- Quivr

Quiver is a self-hosted onpiniated RAG solution for integrating GenAI in your apps.

It Focuses on your product rather than the RAG. Easy integration in existing products with customisation! Any LLM: GPT4, Groq, Llama. Any Vectorstore: PGVector, Faiss. Any Files. Anyway you want.

Features

- Opiniated RAG: We created a RAG that is opinionated, fast and efficient so you can focus on your product

- LLMs: Quivr works with any LLM, you can use it with OpenAI, Anthropic, Mistral, Gemma, etc.

- Any File: Quivr works with any file, you can use it with PDF, TXT, Markdown, etc and even add your own parsers.

- Customize your RAG: Quivr allows you to customize your RAG, add internet search, add tools, etc.

- Integrations with Megaparse: Quivr works with Megaparse, so you can ingest your files with Megaparse and use the RAG with Quivr.

12- Anything LLM

AnythingLLM is a full-stack application where you can use commercial off-the-shelf LLMs or popular open source LLMs and vectorDB solutions to build a private ChatGPT with no compromises that you can run locally as well as host remotely and be able to chat intelligently with any documents you provide it.

While it is a complete LLM solution and ChatGPT alternative, it comes with a its own powerful built RAG system, which can serve as a an example or a base to build apps upon.

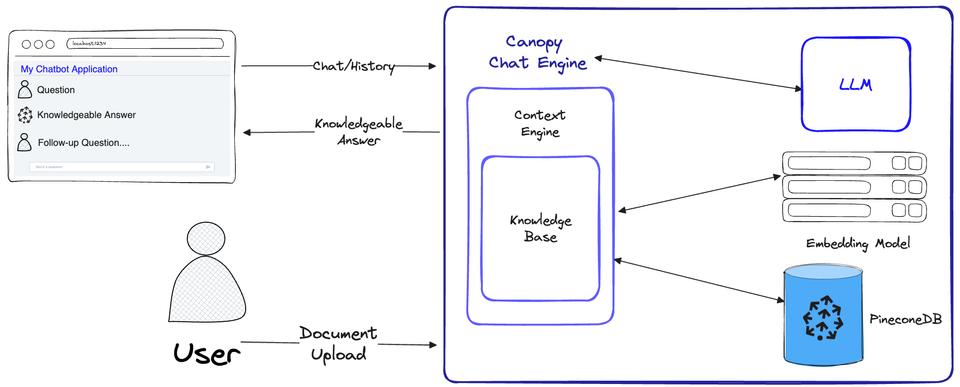

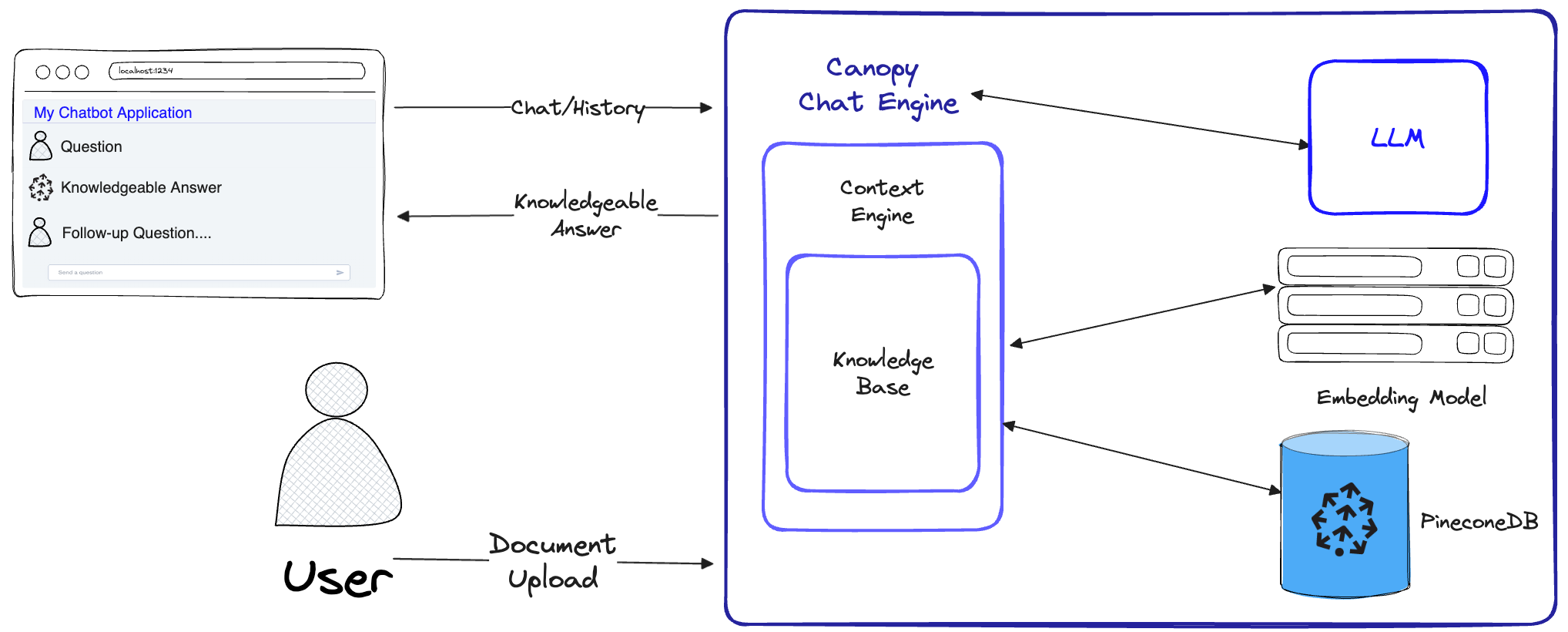

13- Canopy

Canopy is an open-source Retrieval Augmented Generation (RAG) framework and context engine built on top of the Pinecone vector database. Canopy enables you to quickly and easily experiment with and build applications using RAG. Start chatting with your documents or text data with a few simple commands.

Canopy provides a configurable built-in server so you can effortlessly deploy a RAG-powered chat application to your existing chat UI or interface. Or you can build your own, custom RAG application using the Canopy library.

Canopy lets you evaluate your RAG workflow with a CLI based chat tool. With a simple command in the Canopy CLI you can interactively chat with your text data and compare RAG vs. non-RAG workflows side-by-side.

14- RAGs (Streamlit App)

RAGs is a Streamlit app that lets you create a RAG pipeline from a data source using natural language.

15- Mem0

Mem0 ("mem-zero") enhances AI assistants with an intelligent memory layer, enabling personalized, adaptive interactions.

It supports multi-level memory (user, session, agent), cross-platform consistency, and a developer-friendly API.

It uses a hybrid database (vector, key-value, graph) approach, as it efficiently stores, scores, and retrieves memories based on relevance and recency. Ideal for chatbots, AI assistants, and autonomous systems, it ensures seamless personalization.

16- FlashRAG

FlashRAG is a Python toolkit for the reproduction and development of Retrieval Augmented Generation (RAG) research. Our toolkit includes 36 pre-processed benchmark RAG datasets and 15 state-of-the-art RAG algorithms.

With FlashRAG and provided resources, you can effortlessly reproduce existing SOTA works in the RAG domain or implement your custom RAG processes and components.

Features

- Extensive and Customizable Framework: Includes essential components for RAG scenarios such as retrievers, rerankers, generators, and compressors, allowing for flexible assembly of complex pipelines.

- Comprehensive Benchmark Datasets: A collection of 36 pre-processed RAG benchmark datasets to test and validate RAG models' performances.

- Pre-implemented Advanced RAG Algorithms: Features 15 advancing RAG algorithms with reported results, based on our framework. Easily reproducing results under different settings.

- Efficient Preprocessing Stage: Simplifies the RAG workflow preparation by providing various scripts like corpus processing for retrieval, retrieval index building, and pre-retrieval of documents.

- Optimized Execution: The library's efficiency is enhanced with tools like vLLM, FastChat for LLM inference acceleration, and Faiss for vector index management.

- Supports OpenAI Models

17- RAG Me Up

RAG Me Up is a generic framework (server + UIs) that enables you do to RAG on your own dataset easily. Its essence is a small and lightweight server and a couple of ways to run UIs to communicate with the server (or write your own).

RAG Me Up can run on CPU but is best run on any GPU with at least 16GB of vRAM when using the default instruct model.

18- RAG-FiT

RAG-FiT is a library designed to improve LLMs ability to use external information by fine-tuning models on specially created RAG-augmented datasets. The library helps create the data for training, given a RAG technique, helps easily train models using parameter-efficient finetuning (PEFT), and finally can help users measure the improved performance using various, RAG-specific metrics.

The library is modular, workflows are customizable using configuration files. Formerly called RAG Foundry.

19- RAG orchestration framework

This is a free and open-source RAG orchestration framework for AI developers.

Interested in more open-source AI tools?

We got you covered here!