Visual Tracking in Action: Exploring 11 Open-Source Libraries and Their Real-World Applications

Table of Content

Visual tracking technology has revolutionized numerous fields, enabling applications to detect, follow, and analyze objects within video streams in real-time. From augmented reality and robotics to surveillance and sports analytics, visual tracking is a cornerstone of modern computer vision applications.

In this post, we will delve into the benefits and practical use-cases of open-source visual tracking libraries and projects, showcasing how they can be integrated into various applications to enhance functionality and performance.

Benefits of Open-source Visual Tracking Libraries

1. Cost-Effective Development

Open-source visual tracking libraries provide powerful tools and frameworks without the need for expensive licenses, making advanced tracking technology accessible to individual developers, startups, and small businesses.

2. Community Support and Collaboration

These libraries benefit from active communities of developers who contribute to continuous improvements, bug fixes, and feature enhancements. Collaboration within these communities accelerates innovation and problem-solving.

3. Flexibility and Customization

Open-source projects offer the flexibility to modify and customize the code to meet specific requirements. This adaptability is crucial for developers who need to tailor tracking solutions to unique application needs.

4. Rapid Prototyping

With readily available libraries, developers can quickly prototype and test their ideas, significantly reducing the time-to-market for new products and features. This rapid development cycle fosters innovation and competitive advantage.

5- Integration with Existing Ecosystems

Many visual tracking libraries are designed to integrate seamlessly with other popular tools and frameworks in the computer vision and machine learning ecosystems, such as OpenCV, TensorFlow, and PyTorch.

Use-Cases of Visual Tracking Libraries

- Augmented Reality (AR)

Visual tracking libraries are essential in AR applications for tracking real-world objects and overlaying digital content. This creates immersive experiences in gaming, education, and retail. - Robotics and Automation

In robotics, visual tracking is used for navigation, object manipulation, and interaction with dynamic environments. This enhances the capabilities of autonomous systems in manufacturing, delivery, and service robots. - Surveillance and Security

Security systems utilize visual tracking to monitor and analyze movements within a specified area. This helps in detecting suspicious activities, ensuring safety, and automating surveillance tasks. - Sports Analytics

Visual tracking technologies are employed in sports to track players and objects, providing detailed performance analysis and insights. This data is invaluable for coaching, broadcasting, and fan engagement. - Healthcare and Biomedical Applications

In healthcare, visual tracking aids in monitoring patient movements, analyzing medical procedures, and conducting research in areas like neurology and physiotherapy. - Human-Computer Interaction (HCI)

Visual tracking enhances HCI by enabling gesture recognition and tracking user movements, which can be applied in interactive installations, virtual reality (VR), and advanced user interfaces.

By integrating open-source visual tracking libraries into their projects, developers can harness the power of real-time object tracking to create innovative solutions across a wide range of industries. This post will explore some of the top open-source visual tracking libraries and projects, highlighting their unique features and real-world applications.

1. OpenTracker

OpenTracker is an open sourced repository for Visual Tracking. It's written in C++, high speed, easy to use, and easy to be implemented in embedded system.

It works on Windows 10, Linux (Ubuntu), macOS (Sierra), NVIDIA, Raspberry PI 3, and several other Linux systems.

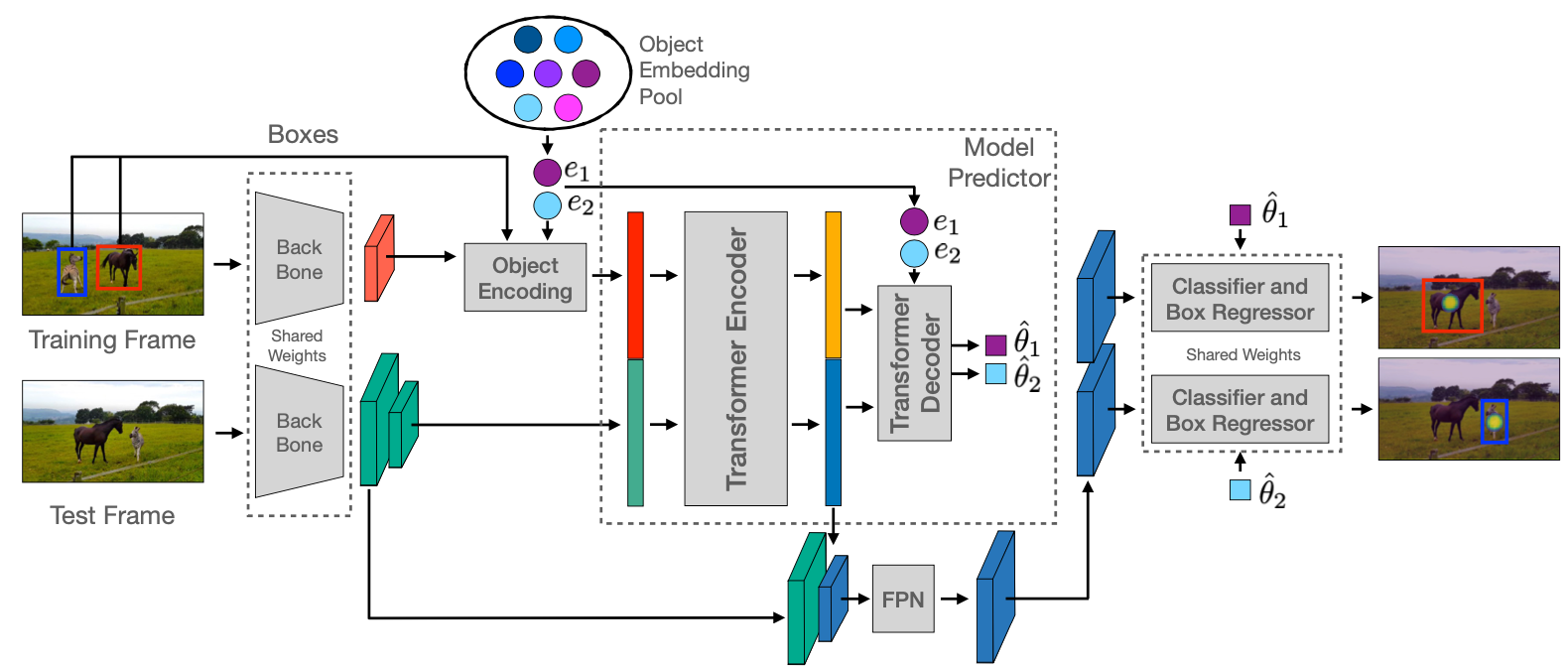

2. PyTracking

This is a general Python framework for visual object tracking and video object segmentation, based on PyTorch.

It includes dozens of trackers, advanced tracking library for video and animated objects, training frameworks, and full Model zoo support.

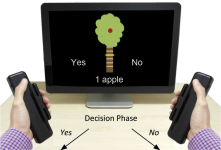

3. PyGaze

PyGaze is an open-source Python library designed for eye-tracking experiments and visual behavior research. Developed to provide a versatile and user-friendly platform, PyGaze simplifies the implementation of eye-tracking studies by offering a range of tools for experiment creation, data collection, and analysis.

It supports various eye-tracking hardware, making it accessible to researchers and developers across different fields. PyGaze is highly customizable and integrates seamlessly with other Python libraries, allowing for sophisticated experimental designs and precise control over visual stimuli.

This flexibility and ease of use make PyGaze a valuable resource for advancing research in psychology, neuroscience, human-computer interaction, and more.

4. ViSP

ViSP (Visual Servoing Platform) is an open-source software library designed for real-time visual tracking and control applications. Developed by Inria, it provides a robust set of tools for visual servoing, which involves controlling robotic systems using visual information. ViSP supports a wide range of tracking techniques, including model-based and model-free methods, and can handle various types of visual data from 2D images to 3D point clouds.

Features

Key features include the ability to track objects, estimate pose, and control robotic movements in real-time. ViSP is highly modular, allowing developers to customize and extend its capabilities to suit specific research and industrial applications. Its versatility and performance make it ideal for robotics, augmented reality, and computer vision projects.

5. MMTracking

MMTracking is an open source video perception toolbox by PyTorch. It is a part of OpenMMLab project. All operations run on GPUs, offering fast training and inference speeds

It Unifies versatile video perception tasks: video object detection, multiple object tracking, single object tracking, and video instance segmentation.

it also allows to decompose the video perception framework into different components for easy customization by combining different modules.

New features

- Built on a new training engine, unifying interfaces of datasets, models, evaluation, and visualization.

- Supports additional methods such as StrongSORT for MOT, Mask2Former for VIS, and PrDiMP for SOT.

6. Object Tracking

This project focuses on enabling the tracking of objects in video streams, offering a collection of scripts and tools that facilitate the understanding and application of these tracking techniques. It is designed to be accessible and useful for both researchers and developers who are working on computer vision and machine learning projects.

The repository includes well-documented code, examples, and resources to help users integrate object tracking capabilities into their own projects, making it a valuable asset for advancing research and development in the field of visual tracking.

7. 3D Multi-Object Tracker

This is a free and open-source project that enables you to track multiple objects in 3D scene.

Features

- Fast: currently, the codes can achieve 700 FPS using only CPU (not include detection and data op), can perform tracking on all kitti val sequence in several seconds.

- Support online, near online and global implementation.

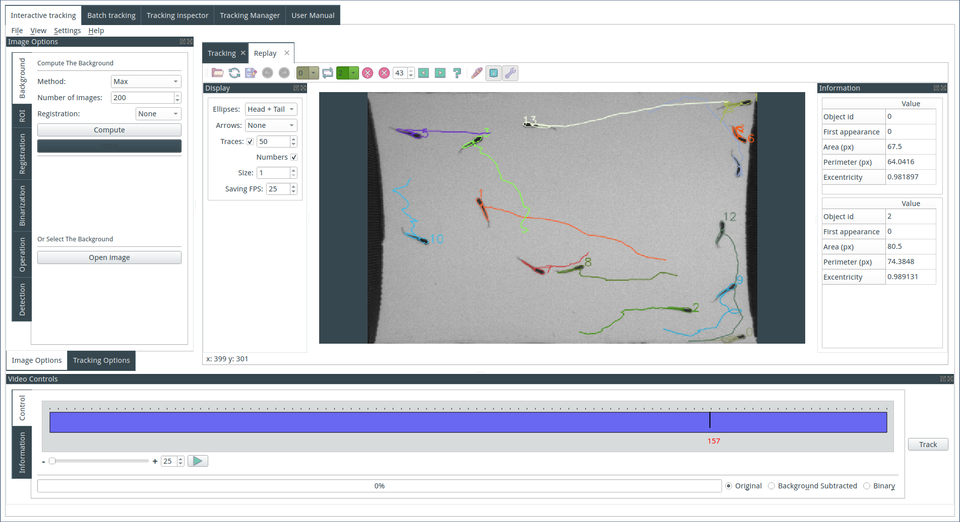

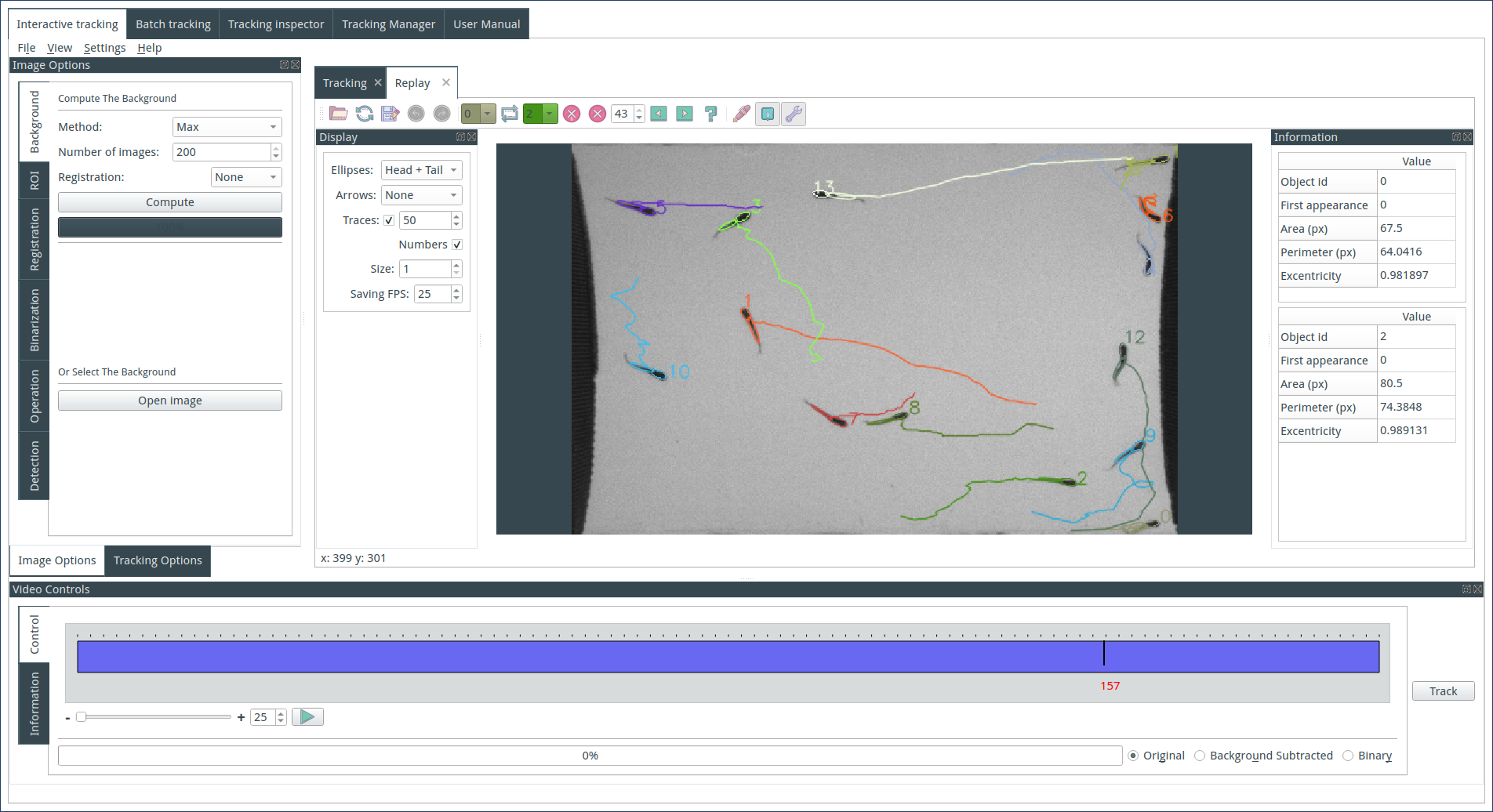

8. FastTrack

FastTrack is a free and open-source desktop tracking software that aims to address these issues. It offers ease of installation, user-friendly interface, and high performance. The software is compatible with Linux, macOS, and Windows operating systems. Additionally, a public API is available, allowing users to embed the core tracking functionality into any C++/Qt project.

FastTrack offers two main features:

- Automatic tracking algorithm: This algorithm can efficiently detect and track objects while preserving their identities throughout the entire video recording.

- Manual review of tracking: Users have the option to review and correct tracking errors swiftly, ensuring 100% accuracy.

9. trackR - Multi-object tracking with R

trackR is an object tracker for R based on OpenCV. It provides an easy-to-use (or so we think) graphical interface allowing users to perform multi-object video tracking in a range of conditions while maintaining individual identities.

trackR uses RGB-channel-specific background subtraction to segment objects in a video. A background image can be provided by the user or can be computed by trackR automatically in most situations. Overlapping objects are then separated using cross-entropy clustering, an automated classification method that provides good computing performance while being able to handle various types of object shapes. Most of the tracking parameters can be automatically estimated by trackR or can be set manually by the user.

trackR also allows users to exclude parts of the image by using masks that can be easily created and customized directly within the app.

10. Open-source free Object Detection and Tracking Project

This is an open-source project, for object detection and tracking.

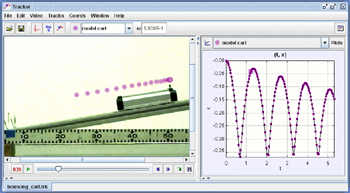

11. Physlets.org's Tracker

The Tracker software from Physlets.org is a powerful tool designed for video analysis and motion tracking. It enables users to analyze videos frame-by-frame, track objects, measure distances, velocities, and accelerations, and perform detailed quantitative analysis of motion phenomena.

Tracker is widely used in education and research for physics, engineering, biology, and other sciences due to its intuitive interface and robust features. While Tracker is free to use, it is not open-source, meaning its source code is not publicly available for modification or redistribution. It remains a valuable tool for visualizing and analyzing motion in educational and scientific contexts.