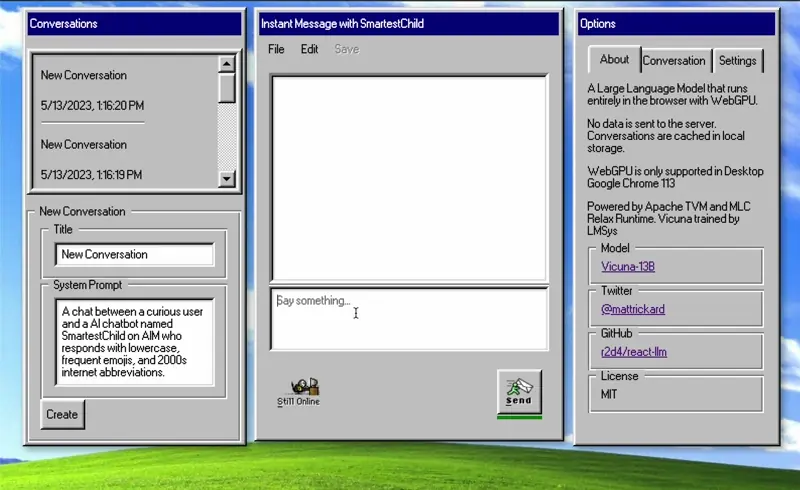

React-LLM: Open-Source Browser-Based LLM for React Developers with Retro Win 98 Style

Discover React-LLM, an open-source library that enables LLMs in the browser using WebGPU. Learn how to integrate it into React and Next.js for private, serverless AI-powered applications.

Table of Content

As a React developer, you’re probably always on the lookout for tools that can make your applications smarter, faster, and more efficient. What if I told you there’s a way to run large language models (LLMs) directly in the browser—no servers, no APIs, just pure client-side magic?

Enter React-LLM, an open-source library that brings the power of LLMs to your React and Next.js apps using WebGPU. Let’s dive into why this is a game-changer and how you can start using it today.

What is React-LLM?

React-LLM is a lightweight library that allows you to run LLMs directly in the browser. It leverages WebGPU, a modern graphics API, to handle the heavy lifting of machine learning computations. This means you can perform LLM inference entirely on the client side, without needing to send data to a server or rely on external APIs.

For React developers, this opens up a world of possibilities for building AI-powered features that are private, secure, and cost-effective.

Why Should You Care?

As a React developer, especially one working with Next.js, you’re likely building applications that need to be fast, scalable, and user-friendly. Here’s why React-LLM matters:

- In-Browser LLM Inference: No more waiting for server responses or dealing with API rate limits. Everything happens locally in the browser.

- Privacy by Design: Since all the processing happens on the client side, sensitive data never leaves the user’s device. This is a huge win for privacy and compliance.

- Cost Efficiency: Forget about paying for expensive API calls or maintaining server infrastructure. React-LLM lets you run LLMs for free in the browser.

- Seamless Integration: React-LLM is designed with React developers in mind. It fits naturally into your existing workflow, whether you’re using React or Next.js.

Key Features

- WebGPU Support: WebGPU is the next-gen graphics API that makes high-performance machine learning in the browser possible. React-LLM is optimized to take full advantage of it.

- Open Source: The library is fully open-source, so you can customize it to fit your needs or contribute to its development.

- React-Friendly: Comes with hooks and components that make it easy to add LLM functionality to your React apps.

- Model Flexibility: Supports a variety of models, so you can choose the one that best fits your use case.

What Can You Build with React-LLM?

The possibilities are endless, but here are a few ideas to get your creative juices flowing:

Chatbots

Build chatbots that process and respond to user input entirely in the browser.

AI-Assisted Features:

Add AI-powered text suggestions, autocomplete, or content generation to your apps.

Education Tools:

Create apps that offer local language translation, tutoring, or interactive learning experiences.

Data Analysis:

Let users perform natural language queries on datasets without sending data to a server.

Using React-LLM with Next.js

If you’re a Next.js developer, you’ll be happy to know that React-LLM works beautifully with Next.js. While Next.js excels at server-side rendering (SSR), React-LLM’s in-browser capabilities mean you can offload AI tasks to the client side. This is perfect for building serverless apps with AI features that don’t rely on backend infrastructure.

Getting Started with React-LLM

Ready to give it a try? Here’s how to set up React-LLM in your React or Next.js project.

Step 1: Install the Library

npm install react-llm

Step 2: Ensure WebGPU Support

Make sure your target browser supports WebGPU (e.g., latest versions of Chrome or Edge). React-LLM relies on WebGPU for its performance, so this is a must.

Step 3: Import and Use

Here’s a simple example to get you started:

import { useLLM } from 'react-llm';

function App() {

const { generateText, isLoading } = useLLM({

model: 'gpt-neo', // Choose your preferred model

});

const handleGenerate = async () => {

const response = await generateText("What is WebGPU?");

console.log(response);

};

return (

<div>

<button onClick={handleGenerate} disabled={isLoading}>

{isLoading ? 'Generating...' : 'Generate Text'}

</button>

</div>

);

}

export default App;

Step 4: Run Your App

Fire up your React or Next.js app, and you’re ready to go. You’ll see React-LLM in action, generating text directly in the browser.

Why This is a Big Deal

React-LLM represents a shift in how we think about AI in web development. For React and Next.js developers, it means you can build applications that are not only smarter but also more private and cost-effective.

Instead of relying on token-based billing or external APIs, you can harness the power of WebGPU to run LLMs locally.

This aligns perfectly with the growing trend of decentralized, privacy-first applications.

Final Thoughts

React-LLM is more than just a library—it’s a glimpse into the future of web development. By bringing LLMs to the browser, it empowers React and Next.js developers to create innovative, AI-powered applications without the usual headaches of server-side infrastructure. Whether you’re building a chatbot, an AI-assisted tool, or just experimenting with browser-based LLMs, React-LLM is a tool you’ll want in your arsenal.

So, what are you waiting for? Dive into the React-LLM GitHub repo, give it a spin, and see how it can transform your next project. Happy coding! 🚀