10 Free Apps to Run Your Own AI LLMs on Windows Offline – Create Your Own Self-Hosted Local ChatGPT Alternative

Table of Content

Ever thought about having your own AI-powered large language model (LLM) running directly on your Windows machine? Now’s the perfect time to get started. Imagine setting up a self-hosted ChatGPT that’s fully customized for your needs, whether it’s content generation, code writing, project management, marketing, or healthcare tasks.

The good news is, there are several free, open-source tools that allow you to run AI models offline on Windows, whether you’re using Windows 8, 9, 10, or the latest Windows 11. In fact, some of these apps also support macOS.

Let’s break down why running LLMs locally is beneficial and take a look at some of the best tools to make it happen.

Why Run LLMs Locally on Your Windows Machine?

Running large language models locally on your computer comes with several perks. First and foremost, you don’t have to rely on the cloud, which means all of your data stays on your machine.

This can be especially important if you’re working in fields that require strict privacy, such as healthcare or project management. Keeping your data local also allows you to avoid any cloud service fees or the risk of a server outage.

Another advantage is speed. With an LLM running on your own machine, you don’t have to wait for cloud servers to process requests, which means you’ll see faster results.

Plus, local models give you the flexibility to customize them however you want.

Whether you’re working on personalized content generation, marketing strategies, or even coding automation, running LLMs offline gives you complete control over how the models are fine-tuned to your needs.

Lastly, from a financial perspective, running LLMs on your own machine eliminates the ongoing costs associated with cloud-based AI services, making it a more budget-friendly option in the long run.

Whether you're a solo developer or managing a small business, it’s a smart way to get AI power without breaking the bank.

Now, let’s look at some free tools you can use to run LLMs locally on your Windows machine—and in many cases, on macOS too.

1- GPT4ALL

GPT4All is a free project that enables you to run 1000+ Large Language Models locally, without worrying about your privacy.

It allow you to install and use dozens of free models that can be used for content generation, code writing, and testing.

The app also supports, OpenAI API, among other LLMs API services.

GPT4All runs on Windows, Linux and macOS. We have been using it on macOS and Linux (Manjaro) as it proven to be reliable and fast.

2- Jan

Jan is an open source alternative to ChatGPT that runs 100% offline on your computer. Multiple engine support (llama.cpp, TensorRT-LLM)

As it works on all popular platforms, we are using it on macOS Intel and macOS M1,M2, and M3 Macbooks.

Jan also works flawlessly on our Linux Manjaro setup with NVIDIA GPUs enabled.

3- OfflineAI

OfflineAI is an artificial intelligence that operates offline and uses machine learning to perform various tasks based on the code provided. It is built using two powerful AI models by Mistral AI.

OfflineAI Features

- Uses advanced machine learning techniques to generate responses

- Operates offline for privacy and convenience

- Built using the powerful Phi-3-mini-4k-instruct.Q4_0 model trained by Microsoft

The default model requires only 2GB storage and 4GB RAM.

The downside of this solution is that you have to know Python to be able to run the models.

4- Follamac

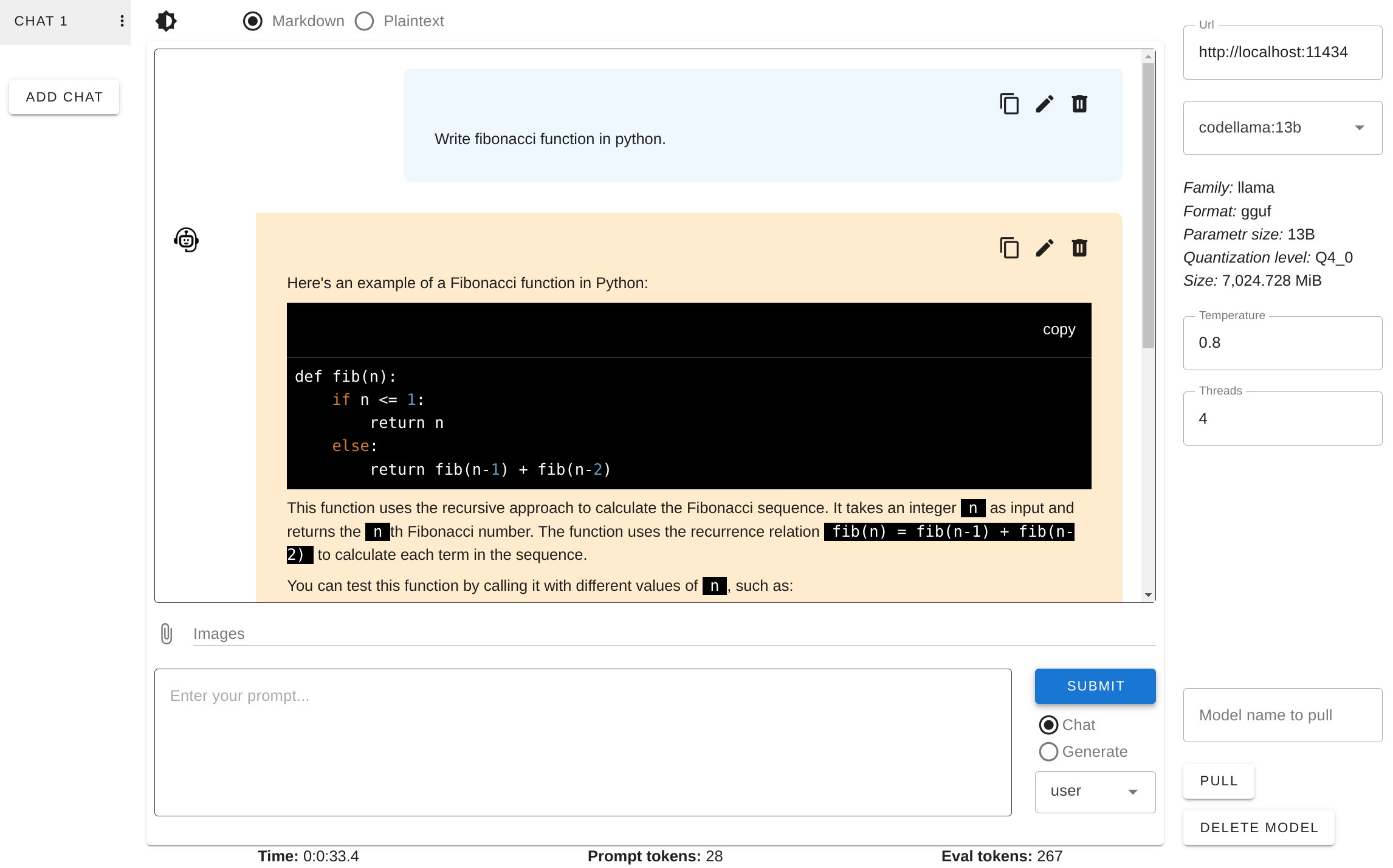

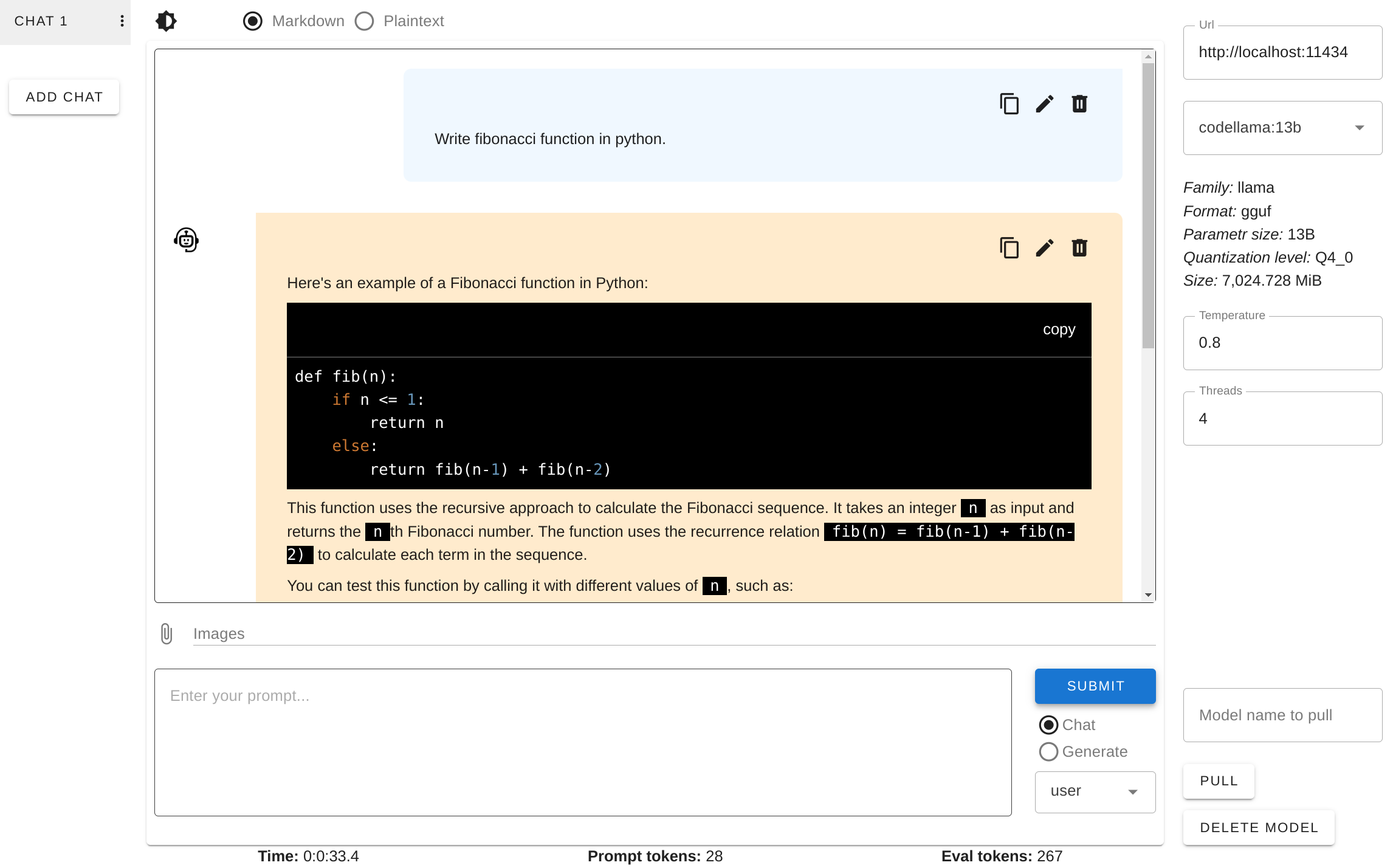

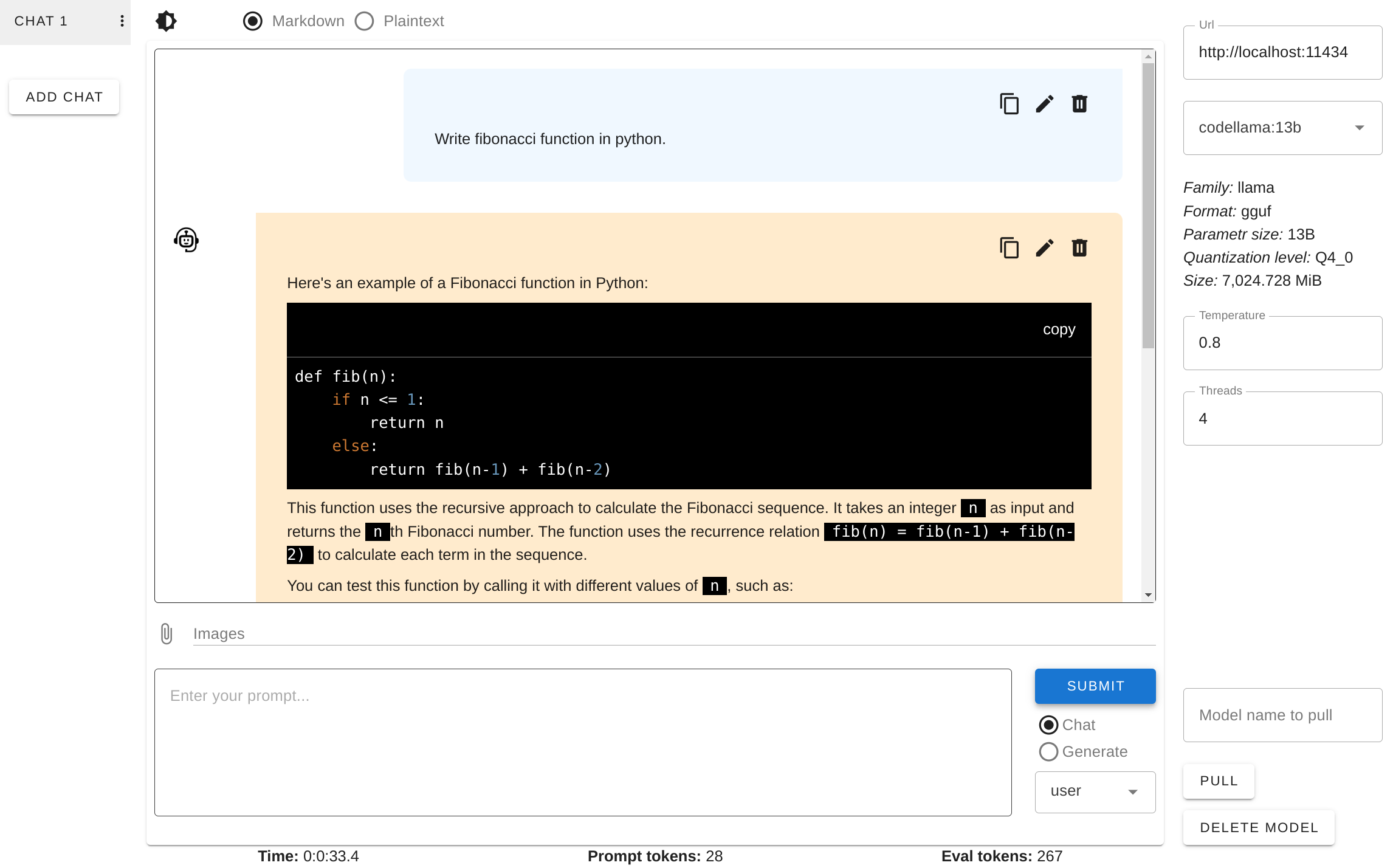

Follamac is a free desktop application that provides convenient and easy way to work with Ollama and large language models (LLMs).

5- Local.ai

Local.ai is an open-source platform that enables users to run AI models locally on their own machines without relying on cloud services.

It supports a variety of machine learning models and frameworks, offering privacy-focused, offline AI capabilities.

Local.ai is an ideal solution for developers who need to process data securely, it empowers users to build and test AI models in a local environment, ensuring greater control and flexibility over their projects.

6- CodeProject.AI Server

CodeProject.AI Server is an open-source AI server that provides computer vision and machine learning services. It is designed to run locally, offering features like object detection, facial recognition, and image classification.

With easy integration into various applications, it helps developers add AI-powered capabilities without the need for cloud-based AI solutions.

It works on Windows, macOS, Linux (Ubuntu, Debian), Raspberry Pi arm64, Docker and supports VS Code.

Its features include:

- Generative AI: LLMs for text generation, Text-to-image, and multi-modal LLMs (eg "tell me what's in this picture")

- Object Detection in images, including using custom models

- Faces detection and recognition images

- Scene recognition represented in an image

- Remove a background from an image

- Blur a background from an image

- Enhance the resolution of an image

- Pull out the most important sentences in text to generate a text summary

- Prove sentiment analysis on text

- Sound Classification

7- LM Studio

LM Studio lets users build and deploy custom language models for different projects. It provides tools for training, fine-tuning, and running models, all while keeping data secure. It’s designed for developers looking to create personalized solutions with full control over their models.

Beyond Windows, LM studio also supports Linux and macOS M1, M2, and M3.

The supported LLMs models include LIAMA, Mistral, Phi, Gemma 2, DeepSeek, and Qwen.

LM Studio Features include:

- Run LLMs on your laptop, entirely offline

- Chat with your local documents (new in 0.3)

- Use models through the in-app Chat UI or an OpenAI compatible local server

- Download any compatible model files from Hugging Face 🤗 repositories

- Discover new & noteworthy LLMs right inside the app's Discover page

8- Transormers

🤗 Transformers provides thousands of pretrained models to perform tasks on different modalities such as text, vision, and audio.

These models can be applied on:

- 📝 Text, for tasks like text classification, information extraction, question answering, summarization, translation, and text generation, in over 100 languages.

- 🖼️ Images, for tasks like image classification, object detection, and segmentation.

- 🗣️ Audio, for tasks like speech recognition and audio classification.

Transformer models can also perform tasks on several modalities combined, such as table question answering, optical character recognition, information extraction from scanned documents, video classification, and visual question answering.

It is an ideal solution for developers who wanna build AI apps.

9- Alpaca.cpp

With Alpaca.ccp you can run a fast ChatGPT-like model locally on your device. This combines the LLaMA foundation model with an open reproduction of Stanford Alpaca a fine-tuning of the base model to obey instructions (akin to the RLHF used to train ChatGPT) and a set of modifications to llama.cpp to add a chat interface.

10- Hugging Face Optimum

🤗 Optimum is an extension of 🤗 Transformers and Diffusers, providing a set of optimization tools enabling maximum efficiency to train and run models on targeted hardware, while keeping things easy to use.