Why Data Geeks Love These 16 Free AI Scraping Solutions

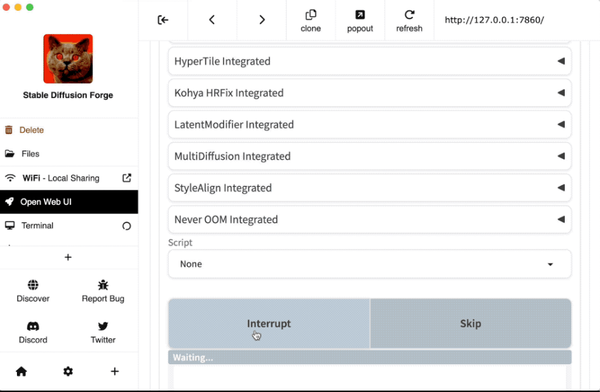

AI Scrapping Made Easy - 16 Open-source Free Solutions with LLMs support

Table of Content

What Really is AI/LLM Scraping?

Imagine web scraping on steroids. AI-powered scraping isn't just about pulling data—it's about understanding it. Unlike traditional scraping tools that mechanically extract information, AI scraping uses advanced machine learning models to interpret web content dynamically, almost like a human would.

The Game-Changing Benefits of AI Scraping 🚀

Why Traditional Scraping is Becoming Obsolete

Traditional web scraping is like using a flip phone in a smartphone era. It's rigid, breakable, and painfully limited.

AI scraping, however, is the quantum computing of data extraction.

Key Advantages:

- Dynamic Web Intelligence: Adapts to changing website structures in real-time

- Precision Extraction: Pulls exactly what you need, nothing less, nothing more

- Contextual Understanding: Interprets content with human-like intelligence

- Flexible Output: Generates clean, structured data in multiple formats

- Enterprise-Grade Scalability: Handles massive data crawling effortlessly

Real-World Use Cases That Will Blow Your Mind

Where AI Scraping Transforms Industries:

- Data Science: Build pristine datasets in minutes

- Machine Learning: Source high-quality training data

- Business Intelligence: Track market trends and competitor strategies

- Content Aggregation: Create dynamic, always-updated platforms

- Academic Research: Collect comprehensive, nuanced data ecosystems

Traditional vs. AI Scraping: The Ultimate Showdown

Old School Approach

- Pros: Simple, straightforward

- Cons: Breaks constantly, limited understanding, manual maintenance

AI-Powered Approach

- Pros: Adaptive, intelligent, scalable

- Cons: Initial complexity, requires some ML/ LLM and RAG knowledge

Pro Tip for Data Enthusiasts 💡

Think of AI scraping like having a brilliant research assistant who never sleeps. It doesn't just collect data—it understands context, adapts to challenges, and delivers insights.

Who Wins with AI Scraping?

- Data Analysts: Pristine, ready-to-analyze datasets

- Data Engineers: Simplified, robust extraction workflows

- Data Scientists: Unlimited potential for innovative research

The Future is Adaptive

Traditional scraping is a relic. AI scraping is the future—intelligent, flexible, and powerful. It's not just about collecting data; it's about understanding the story behind the numbers.

Ready to Level Up Your Data Game?

Embrace AI scraping. Transform your data collection from a mundane task to an intelligent, strategic operation.

In the following is a list of the best open-source projects that make AI scrapping easy.

1- LLM Scraper

LLM Scraper is a TypeScript library that allows you to extract structured data from any webpage using LLMs.

Features

- Supports Local (Ollama, GGUF), OpenAI, Vercel AI SDK Providers

- Schemas defined with Zod

- Full type-safety with TypeScript

- Based on Playwright framework

- Streaming objects

- NEW Code-generation

- Supports 4 formatting modes:

htmlfor loading raw HTMLmarkdownfor loading markdowntextfor loading extracted text (using Readability.js)imagefor loading a screenshot (multi-modal only)

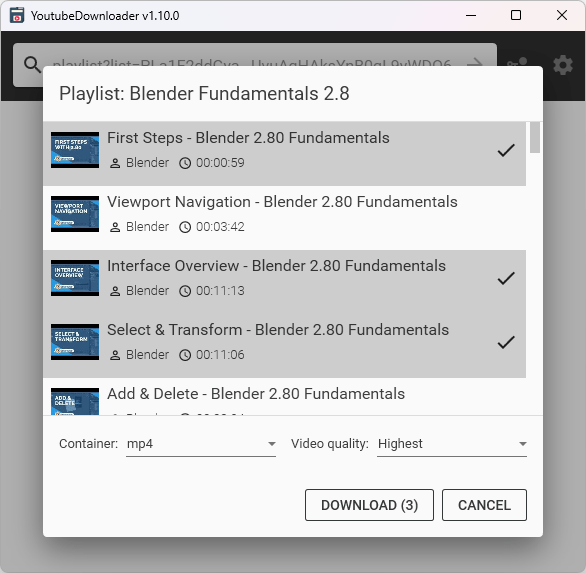

2- 🔥🕷️ Crawl4AI: LLM Friendly Web Crawler & Scraper

Crawl4AI is a user-friendly tool that makes web crawling and data extraction simple and efficient, perfect for AI and LLM applications. It's free, fast, and built for modern needs.

Crawl4AI makes web scraping simple, powerful, and AI-ready! 🚀

Features

- 💸 100% Free & Open-Source: Enjoy top-tier crawling without spending a dime.

- 🚀 Lightning-Fast Performance: Outperforms many paid services for quick and reliable scraping.

- 🤖 Built for AI & LLMs: Outputs data in JSON, cleaned HTML, or markdown formats tailored for AI apps.

- 🌐 Multi-Browser Support: Works seamlessly with Chromium, Firefox, and WebKit.

- 🌍 Crawl Multiple URLs: Handle multiple websites at once for efficient data extraction.

- 🎨 All-Media Support: Extract images, audio, videos, and all media tags effortlessly.

- 🔗 Link Extraction: Grabs all internal and external links for deeper insights.

- 📚 Metadata Retrieval: Captures page titles, descriptions, and other essential metadata.

- 🔄 Customization Ready: Add hooks for authentication, headers, or custom page modifications.

- 🕵️ Be Anonymous: Customize user-agent settings to stay under the radar.

- 🖼️ Screenshots: Take snapshots of pages with robust error handling.

- 📜 Custom JavaScripts: Execute your scripts before crawling for tailored results.

- 📊 Structured Output: Generate well-organized data using advanced JSON strategies.

- 🧠 Smart Extraction: Use LLMs, clustering, regex, or CSS selectors for accurate data scraping.

- 🔒 Proxy & Authentication: Access protected content with secure proxy support.

- 🔄 Session Management: Handle multi-page navigation with ease.

- 🖼️ Better Images: Supports lazy-loading and responsive images.

- 🕰️ Dynamic Content Handling: Manages delayed loading for interactive pages.

- 🔑 AI-Friendly Headers: Pass custom headers for LLM-specific interactions.

- 📝 Precision Extraction: Refine results using keywords or instructions.

- ⏱️ Flexible Settings: Adjust timeouts and delays for smoother crawling.

- 🖼️ iframe Support: Extract content within iframes for deeper data insights.

3- Firecrawl

Firecrawl is an advanced API service designed to power AI applications with clean, structured data from any website. It effortlessly scrapes and crawls accessible subpages, converting them into clean markdown or structured formats without requiring a sitemap.

Firecrawl eliminates the complexity of web scraping, enabling developers and businesses to extract high-quality data with ease.

It supports diverse frameworks, making it adaptable for AI-powered apps, automation, and data-driven projects. Perfect for creating streamlined, scalable solutions!

Features

- Powerful Data Extraction: Retrieves and processes data from any URL into usable formats.

- Broad SDK Support: Works with Python, Node.js, Go, and Rust SDKs for seamless integration.

- LLM Framework Compatibility: Supports LangChain (Python/JS), Llama Index, Crew.ai, Vectorize, and more.

- Low-Code Frameworks: Compatible with Dify, LangFlow, Flowise AI, Cargo, and Pipedream for simplified workflows.

- Automation Tools: Integrated with Zapier and Pabbly Connect for effortless task automation.

4- LLM-Scraper

The LLM Fetcher is a Python script that automates the task of fetching user-level order details from the Amazon website. By leveraging Selenium, the script navigates to the order history page, extracts key order details from each historical order, and saves them in a structured format.

Features

- Automated navigation to the Amazon order history page.

- Extraction of order details such as order number, product names, quantities, prices, and delivery status.

- Saving of order details in raw HTML files with a structured naming convention.

- Structured storage of order details in JSON or CSV format.

- Secure handling of user authentication credentials.

- Robust handling of unexpected events and website changes

5- Scrapy LLM

LLM integration for Scrapy as a middleware. Extract any data from the web using your own predefined schema with your own preferred language model.

Features

- Extract data from web page text using a language model.

- Define a schema for the extracted data using pydantic models.

- Validate the extracted data against the defined schema.

- Seamlessly integrate with any API compatible with the OpenAI API specification.

- Use any language model deployed on an API compatible with the OpenAI API specification.

6- Crawlee

Crawlee is a Python library for web scraping and browser automation, perfect for building reliable crawlers. It supports AI, LLMs, and GPT workflows, handling HTML, PDFs, images, and more.

With proxy rotation and human-like crawling, it bypasses modern bot protections. Compatible with BeautifulSoup, Playwright, and HTTP, Crawlee offers flexibility, rich configurations, and persistent storage for seamless data extraction.

7- Parsera

Parsera is a free and open-source lightweight Python library for scraping websites with LLMs.

8- CyberScraper 2077

CyberScraper 2077 is a cutting-edge web scraping tool powered by OpenAI, Gemini, and LocalLLM models. Designed for precise and efficient data extraction, it caters to data analysts, tech enthusiasts, and anyone needing streamlined access to online information.

Features

- 🤖 AI-Powered Extraction: Utilizes cutting-edge AI models to understand and parse web content intelligently.

- 🖥️ Sleek Streamlit Interface: User-friendly GUI that even a chrome-armed street samurai could navigate.

- 🔄 Multi-Format Support: Export your data in JSON, CSV, HTML, SQL or Excel – whatever fits your cyberdeck.

- 🧅 Tor Network Support: Safely scrape .onion sites through the Tor network with automatic routing and security features.

- 🕵️ Stealth Mode: Implemented stealth mode parameters that help avoid detection as a bot.

- 🦙 Ollama Support: Use a huge library of open source LLMs.

- ⚡ Async Operations: Lightning-fast scraping that would make a Trauma Team jealous.

- 🧠 Smart Parsing: Structures scraped content as if it was extracted straight from the engram of a master netrunner.

- 💾 Caching: Implemented content-based and query-based caching using LRU cache and a custom dictionary to reduce redundant API calls.

- 📊 Upload to Google Sheets: Now you can easily upload your extracted CSV data to Google Sheets with one click.

- 🛡️ Bypass Captcha: Bypass captcha by using the -captcha at the end of the URL. (Currently only works natively, doesn't work on Docker)

- 🌐 Current Browser: The current browser feature uses your local browser instance which will help you bypass 99% of bot detections. (Only use when necessary)

- 🔒 Proxy Mode (Coming Soon): Built-in proxy support to keep you ghosting through the net.

- 🧭 Navigate through the Pages (BETA): Navigate through the webpage and scrape data from different pages.

9- Scrapegraph-ai

ScrapeGraphAI is a web scraping python library that uses LLM and direct graph logic to create scraping pipelines for websites and local documents (XML, HTML, JSON, Markdown, etc.).

10- ScraperAI

ScraperAI is an open-source, AI-powered tool designed to simplify web scraping for users of all skill levels.

This app leverages Large Language Models, such as ChatGPT, ScraperAI extracts data from web pages and generates reusable and shareable scraping recipes.

Features

- Serializable & reusable Scraper Configs

- Automatic data detection

- Automatic XPATHs detection

- Automatic pagination & page type detection

- HTML minification

- ChatGPT support

- Custom LLMs support

- Selenium support

- Custom crawlers support

11- LLM-based Web Crawler

A Web Crawler based on LLMs implemented with Ray and Huggingface. The embeddings are saved into a vector database for fast clustering and retrieval. Use it for your RAG.

Features

- This service can crawl recursively the web storing links it's text and the corresponding text embedding.

- We use a large language model (e.g Bert) to obtain the text embeddings, i.e. a vector representation of the text present at each website.

- The service is scalable, we use Ray to spread across multiple workers.

- The entries are stored into a vector database. Vector databases are ideal to save and retrieve samples according to a vector representation.

12- ScrapeGPT

ScrapeGPT is a RAG-based Telegram bot designed to scrape and analyze websites, then answer questions based on the scraped content.

The bot utilizes Retrieval Augmented Generation and webscraping to return natural language answers to the user's queries.

Features

- Web Scraping: Automatically scrapes text from provided URLs, including PDF files.

- Context Retrieval: Utilizes embeddings and retrieval models to extract relevant context from scraped content.

- Question Answering: Generates answers to user questions based on the retrieved context.

- Robots.txt Parsing: Respects website's robots.txt to avoid scraping restricted areas.

- Database Management: Stores scraped content in a database for future reference and quick access.

- Proxy Support: Uses rotating proxies to bypass geo-restrictions and anonymize requests.

- LLM-based: Supports both public and local LLMs.

13- RAG Web Browser

RAG Web Browser is an Apify Actor to feed your LLM applications and RAG pipelines with up-to-date text content scraped from the web.

The RAG Web Browser can be used in two ways: as a standard Actor by passing it an input object with the settings, or in the Standby mode by sending it an HTTP request.

Features

- 🚀 Quick response times for great user experience

- ⚙️ Supports dynamic JavaScript-heavy websites using a headless browser

- 🕷 Automatically bypasses anti-scraping protections using proxies and browser fingerprints

- 📝 Output formats include Markdown, plain text, and HTML

- 🪟 It's open source, so you can review and modify it

14- Rag Crawler

This open-source JavaScript/ TypeScript project enables you to crawl any website to generate knowledge file for RAG.

15- Democracy Chatbot

Democracy Chatbot is an open-source project designed to extract and organize information from meeting records.

It uses a Large Language Model (LLM) to pull data from PDF files scraped from the city of Nykerleby’s website.

The extracted information is structured into a knowledge graph, making it easy to search and access. The project also includes a chatbot app that lets users interact with the data seamlessly.

16- RAGScraper

RAGScraper is a Python library designed for efficient and intelligent scraping of web documentation and content.

It is tailored for Retrieval-Augmented Generation systems, RAGScraper extracts and preprocesses text into structured, machine-learning-ready formats.

It emphasizes precision, context preservation, and ease of integration with RAG models, making it an ideal tool for developers looking to enhance AI-driven applications with rich, web-sourced knowledge.