Top 11 Free Open-Source AI Search Engines Powered by LLMs You Can Self-Host

Table of Content

The AI Search Revolution: Beyond Keywords

The way we search online is changing dramatically. Gone are the days of awkwardly stringing keywords together, hoping to find what we need. A new wave of search engines, powered by Large Language Models (LLMs), is making search feel more like asking a smart friend for help.

Perplexity.ai is a popular example that leads this transformation by turning search into a natural conversation. Instead of getting a streamed wall of links, you receive synthesized answers drawn from multiple reliable sources, complete with citations.

It's like having a research assistant who reads everything and gives you the key points.

What makes these new AI search engines different?

- They understand natural questions: Ask "What's causing the chip shortage?" instead of "semiconductor supply chain issues 2024"

- They keep context: Your follow-up questions make sense without restating everything

- They connect the dots for you based on your answers: Related topics and deeper insights emerge naturally

- They verify sources: Real-time fact-checking and direct citations come standard

The technology behind this works by transforming your natural questions into precise search queries, understanding meaning rather than just matching words, and ranking results based on how well they actually answer your question.

For everyday users, this means finding better information faster. Complex research tasks that once took hours can now be completed in minutes.

Whether you're planning a trip, researching a topic, or trying to understand current events, these AI-powered search engines help you cut through the noise to find exactly what you need.

However, it is important to note that traditional search isn't dead, but the future clearly belongs to these smarter, more conversational tools. As the technology improves, expect even better at understanding complex questions and providing personalized, accurate answers.

The next time you need to find something online, try Perplexity.ai or similar AI-powered search engines. You might be surprised at how much better the experience can be when your search engine actually understands what you're asking.

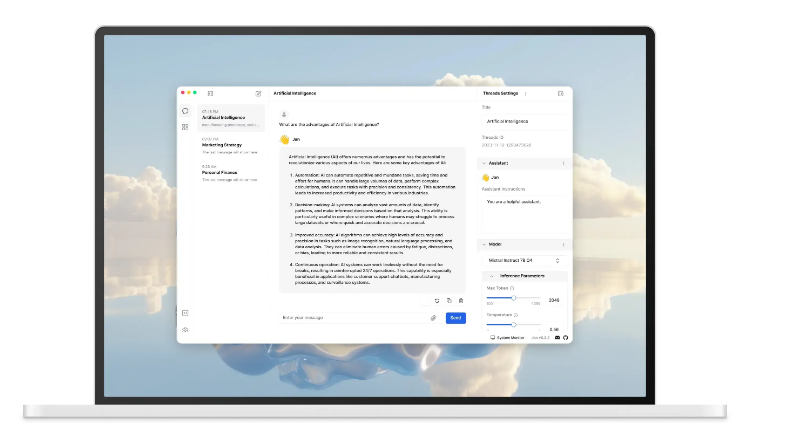

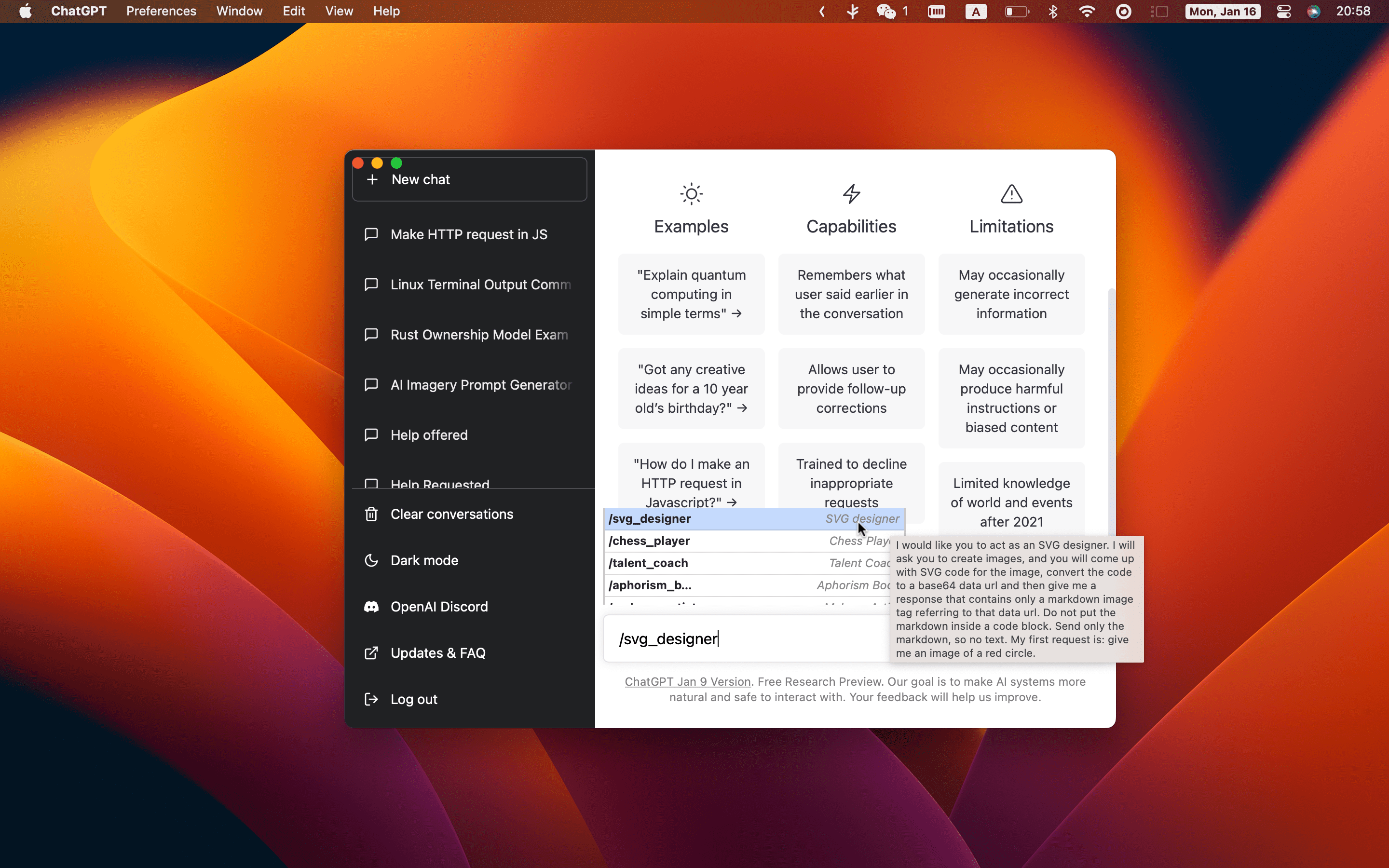

Running LLMs offline

If you are looking to run Large Language Models offline, we got you covered with several posts, you may check the next list:

- Free Apps for Running LLMs offline on Windows

- Running LLMs offline on macOS with Open-source Free Apps

- LLMS Web UI

Open-source AI-Search Engines based on LLMs

1- MemFree

MemFree is a hybrid AI-powered search tool that provides accurate answers by combining your personal knowledge base with web search results. It efficiently manages information, saving you from organizing notes or browsing multiple sources by delivering direct, summarized insights.

MemFree also doubles as a powerful AI-driven page generator, instantly creating production-ready UI pages using Claude 3.5 Sonnet, React, and Tailwind.

With this tool, you can quickly design, visualize, and publish web pages, making it an efficient, cost-effective solution for productivity and development.

Features

- Integrates ChatGPT (OpenAI) , Claude, and Gemini AI models.

- Supports Google, Exa, and Vector search engines.

- Multi-format search (text, images, files, web pages).

- Presents results as text, mind maps, images, and videos.

- Supports file types: PDF, Docx, PPTX, and Markdown.

- Syncs across devices.

- Multi-language support.

- Chrome bookmark sync.

- Contextual continuous search.

- One-click UI publishing.

- Real-time UI preview and responsive code.

- AI-powered content search for UI.

- Easy deployment on various platforms.

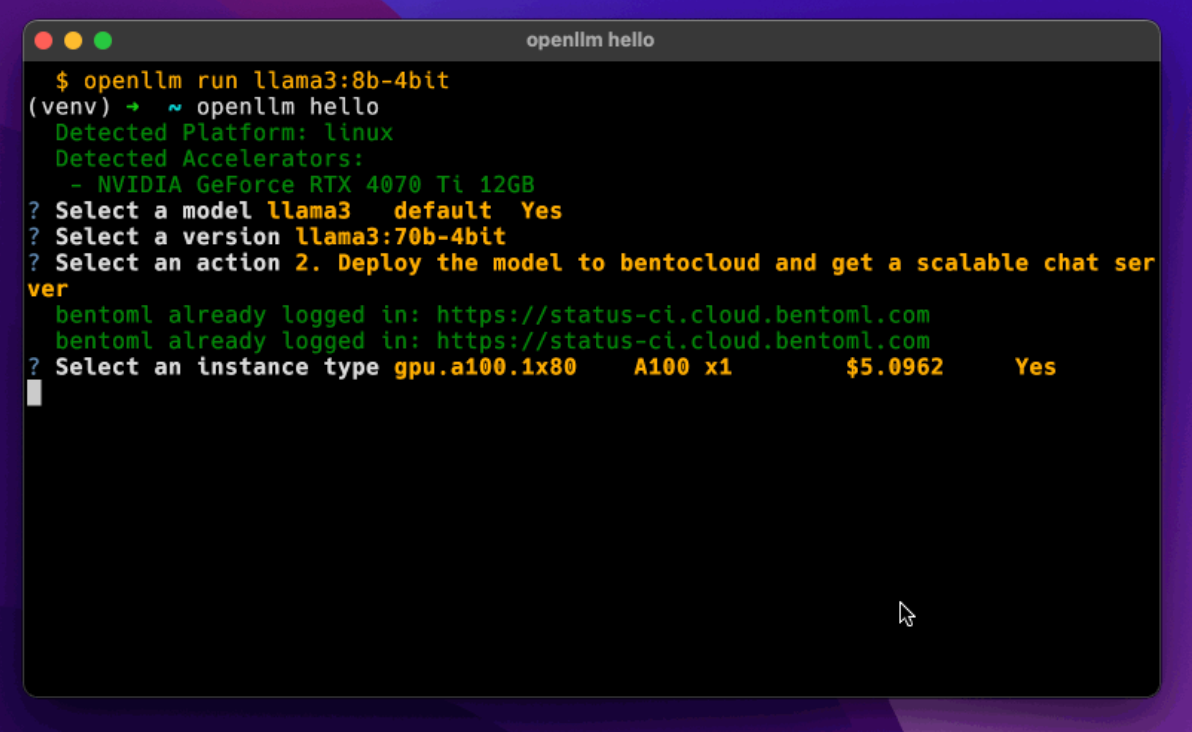

2- Perplexica

Perplexica is an open-source AI-powered searching tool or an AI-powered search engine that goes deep into the internet to find answers. Inspired by Perplexity AI, it's an open-source option that not just searches the web but understands your questions.

It uses advanced machine learning algorithms like similarity searching and embeddings to refine results and provides clear answers with sources cited.

Using SearxNG to stay current and fully open source, Perplexica ensures you always get the most up-to-date information without compromising your privacy.

Features

- Perplexica Key Features:

- Local LLM support (Llama3, Mixtral via Ollama).

- Copilot Mode (in development) for boosted, multi-query searches.

- Normal Mode for direct web search processing.

- Focus Modes tailored for specific queries.

- Academic Search Mode for research articles and papers.

- YouTube Search Mode to find relevant videos.

- Writing Assistant Mode for non-web-based writing help.

- All Mode for comprehensive web search.

- Reddit Search Mode for community insights.

- Wolfram Alpha Mode for calculations and data analysis.

- Uses SearxNG for updated, ranked search results.

- API integration for seamless app use.

- Ensures access to current information without daily updates.

3- Farfalle

Farfalle is a self-hosted open-source AI-powered search engine. (Perplexity Clone). It enables you to run local LLMs (llama3, gemma, mistral, phi3), custom LLMs through LiteLLM, or use cloud models (Groq/Llama3, OpenAI/gpt4-o).

Like many amazing Next.js apps,

Features

- ChatGPT like interface

- Chat History

- Add support for Searxng

- Support for local LLMs

- Easy to deploy using Docker.

- Search with multiple search providers (Tavily, Searxng, Serper, Bing)

- Answer questions with cloud models (OpenAI/gpt4-o, OpenAI/gpt3.5-turbo, Groq/Llama3)

- Answer questions with local models (llama3, mistral, gemma, phi3)

- Answer questions with any custom LLMs through LiteLLM

- Search with an agent that plans and executes the search for better results

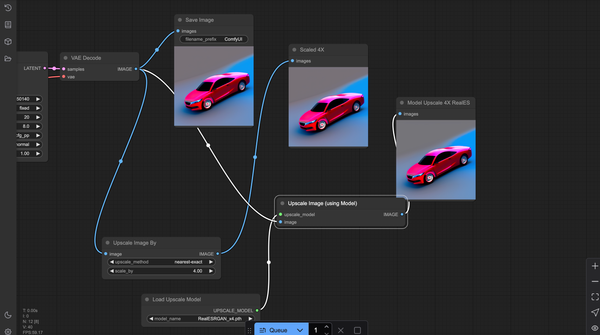

5- MindSearch

MindSearch is a self-hosted open-source LLM-based Multi-agent Framework of Web Search Engine (like Perplexity.ai Pro and SearchGPT).

One of its amazing features is that you can use it in a pure headless mode as just the backend only without running any frontend.

MindSearch is released under the Apache-2.0 License.

6- MiniPerplx

MiniPerplx is a minimalistic AI-powered search engine that helps you find information on the internet. Powered by Vercel AI SDK Search with models like GPT-4o mini, GPT-4o and Claude 3.5 Sonnet(New)!

Built with Next.js as many other trending AI apps, it can be easily deployed to Vercel, Netlify and similar platforms.

Features

- AI-powered search: Get answers to your questions using Anthropic's Models.

- Web search: Search the web using Tavily's API.

- URL Specific search: Get information from a specific URL.

- Weather: Get the current weather for any location using OpenWeather's API.

- Programming: Run code snippets in multiple languages using E2B's API.

- Maps: Get the location of any place using Google Maps API.

- Results Overview: Get a quick overview of the results from different providers.

- Translation: Translate text to different languages using Microsoft's Translator API.

7- Morphic

Yet another open-sorurce AI-powered search engine with a generative UI.

Features

- Here’s an organized feature list for GenerativeUI with added stack details:

- Search & Answering with Generative AI support

- User Question Interpretation

- Search History functionality

- Optional Sharing of Search Results

- Video Search Support (optional)

- Answer Retrieval from Specific URLs

- General Search Engine functionality

- Multi-Provider Support: Google Generative AI, Azure OpenAI, Anthropic, Ollama, Groq

- Redis Support for local caching

- SearXNG API with customizable depth (basic/advanced)

- Configurable Search Depth options

- Next.js Framework for app structure

- Vercel AI SDK for text streaming/generative UI

- Search APIs: Tavily AI, Serper, SearXNG

- Extraction APIs: Tavily AI, Jina AI

- Serverless & Local Databases: Upstash, Redis

- Component Library: shadcn/ui and Radix UI for headless components

- Styling with Tailwind CSS

8- Gerev

Gerev is an open-source, self-hosted search engine optimized for productivity, allowing teams to find information across apps like GitHub, Slack, Notion, and Jira. It features configurable integrations, search history, and a responsive interface.

With Docker support, Gerev is easily deployed for teams needing a centralized, privacy-focused search solution.

9- TurboSeek

TurboSeek is a search engine template starter built with Next.js and Tailwind CSS, using AI to extract and retrieve information from diverse sources. It’s fast, customizable, and suitable for various search applications.

Developers can use it as a base to build their own AI-powered search engines.

10- Athena for Search

Athena for search is a free, open-source, and high performance alternative to Perplexity AI. Our priority lays in providing you with reliable Multi-Modal LLM backed search.

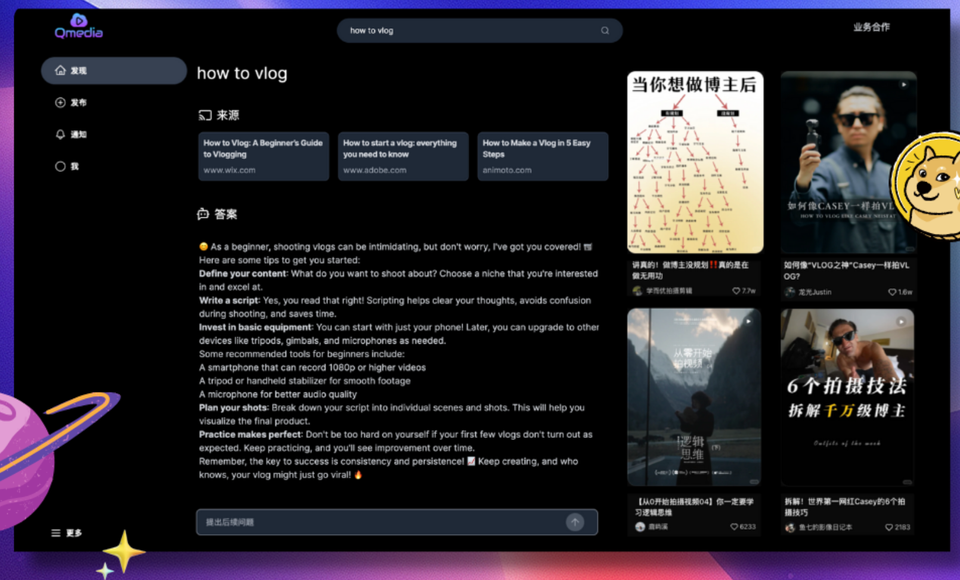

11- QMedia

QMedia is a self-hosted AI content search engine designed specifically for content creators. Supports extraction of text, images, and short videos. Allows full local deployment (web app, RAG server, LLM server). Supports multi-modal RAG content Q&A.

Features

- Search for image/text and short video materials.

- Efficiently analyze image/text and short video content, integrating scattered information.

- Provide content sources and decompose image/text and short video information, presenting information through content cards.

- Generate customized search results based on user interests and needs from image/text and short video content.

- Local deployment, enabling offline content search and Q&A for private data.

- Media rich content cards

- Multimodal Content RAG

- Search for image/text and short video materials.

- Extract useful information from image/text and short video content based on user queries to generate high-quality answers.

- Present content sources and the breakdown of image/text and short video information through content cards.

- Retrieval and Q&A rely on the breakdown of image/text and short video content, including image style, text layout, short video transcription, video summaries, etc.

- Support Google content search.

- Pure Local Multimodal Models

- Deployment of various types of models locally Separation from the RAG application layer, making it easy to replace different models Local model lifecycle management, configurable for manual or automatic release to reduce server load

- Supports many LLMs, Media/ video models, and image models

Explore Our Article Archive on AI, LLMs, and Machine Learning