Exploring 12 Free Open-Source Web UIs for Hosting and Running LLMs Locally or On Server

Table of Content

Are you looking to harness the capabilities of Large Language Models (LLMs) while maintaining control over your data and resources?

You're in the right place. In this comprehensive guide, we'll explore 12 free open-source web interfaces that let you run LLMs locally or on your own servers – putting the power of AI right at your fingertips.

Think of LLMs as your personal AI assistants, capable of everything from answering complex questions to helping with coding projects and creative writing. While commercial solutions like ChatGPT have made headlines, there's a growing movement toward self-hosted alternatives that offer more privacy, customization, and control.

Why Consider Running Self-hosted LLM Web UI Interface?

Imagine having a ChatGPT-like experience, but with the freedom to:

- Keep sensitive data completely private on your own hardware

- Customize the AI's responses to match your specific needs

- Access AI assistance even without an internet connection

- Save costs, especially if you're running AI tools at scale

- Control your computing resources for optimal performance

Whether you're a developer looking to integrate AI into your workflow, a business seeking to automate customer support, or an educator wanting to create powerful learning tools, self-hosted LLM interfaces offer unprecedented flexibility and control.

In this article, we'll dive into 12 fantastic open-source solutions that make hosting your own LLM interface not just possible, but practical. From simple, user-friendly options to powerful, feature-rich platforms, we'll help you find the perfect fit for your needs.

Ready to take control of your AI experience? Let's explore these game-changing tools together.

Continue reading to discover our curated list of the 12 best open-source LLM web interfaces.

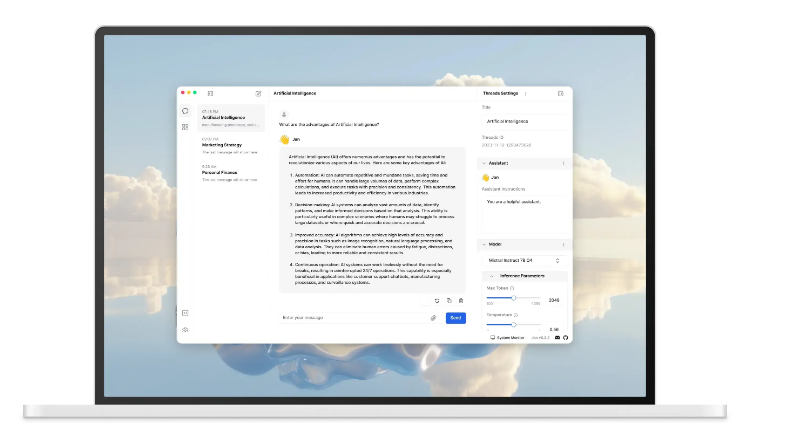

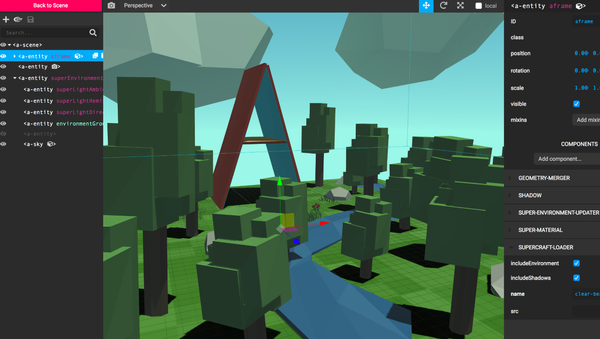

1- AnythingLLM

AnythingLLM is a versatile, full-stack AI app that transforms any document or content into contextual data that can be referenced during chats with Large Language Models (LLMs). Designed for ease of use, it offers a hyper-configurable, multi-user environment without complex setup. Users can integrate both commercial and open-source LLMs, select their preferred vector databases, and manage access permissions.

The app organizes documents into "Workspaces," containerized units that keep context distinct between different threads.

Workspaces can share documents, but maintain isolated context for focused conversations. AnythingLLM is available for desktop on Mac, Windows, and Linux, and can run locally or remotely, making it a powerful tool for building custom, private ChatGPT-like experiences.

Features

- 🆕 Custom AI Agents

- 🖼️ Multi-modal support (both closed and open-source LLMs!)

- 👤 Multi-user instance support and permissioning Docker version only

- 🦾 Agents inside your workspace (browse the web, run code, etc)

- 💬 Custom Embeddable Chat widget for your website Docker version only

- 📖 Multiple document type support (PDF, TXT, DOCX, etc)

- Simple chat UI with Drag-n-Drop funcitonality and clear citations.

- 100% Cloud deployment ready.

- Works with all popular closed and open-source LLM providers.

- Built-in cost & time-saving measures for managing very large documents compared to any other chat UI.

- Full Developer API for custom integrations!

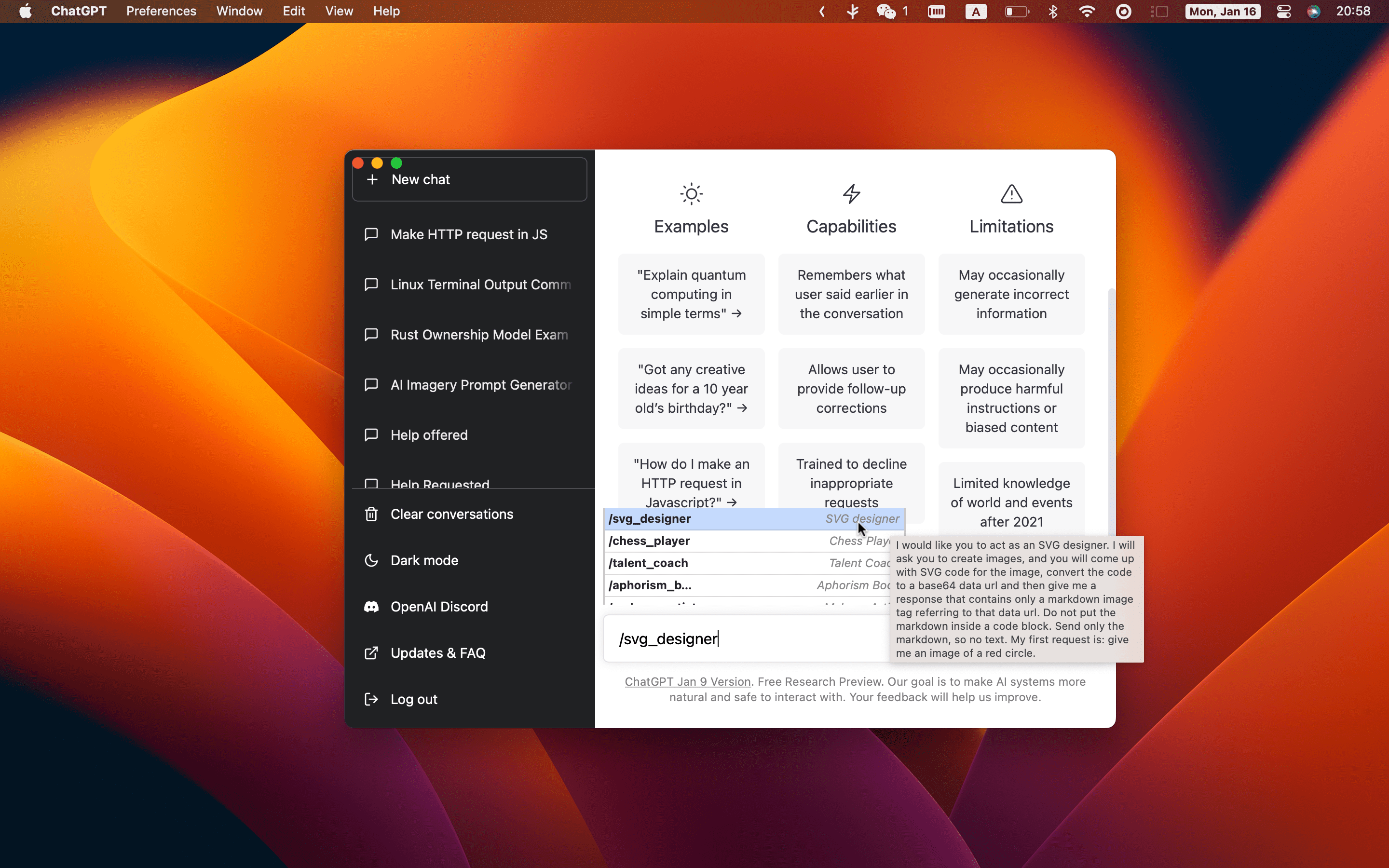

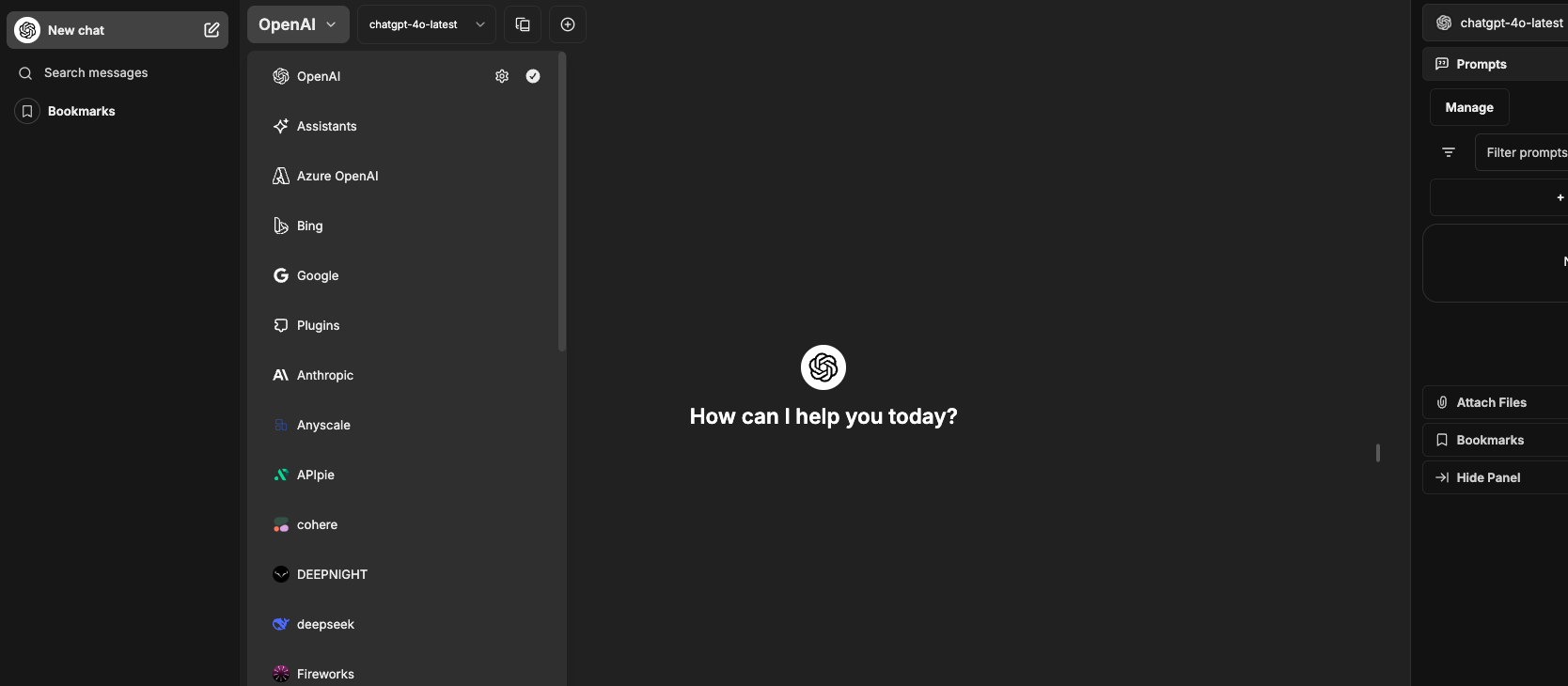

2- LibreChat

LibreChat is a free web-based app that can act as a personal private ChatGPT clone, locally or on your own server.

Features

- 🖥️ UI matching ChatGPT, including Dark mode, Streaming, and latest updates

- 🤖 AI model selection:

- Anthropic (Claude), AWS Bedrock, OpenAI, Azure OpenAI, BingAI, ChatGPT, Google Vertex AI, Plugins, Assistants API (including Azure Assistants)

- ✅ Compatible across both Remote & Local AI services:

- groq, Ollama, Cohere, Mistral AI, Apple MLX, koboldcpp, OpenRouter, together.ai, Perplexity, ShuttleAI, and more

- 🪄 Generative UI with Code Artifacts

- Create React, HTML code, and Mermaid diagrams right in chat

- 💾 Create, Save, & Share Custom Presets

- 🔀 Switch between AI Endpoints and Presets, mid-chat

- 🔄 Edit, Resubmit, and Continue Messages with Conversation branching

- 🌿 Fork Messages & Conversations for Advanced Context control

- 💬 Multimodal Chat:

- Upload and analyze images with Claude 3, GPT-4 (including

gpt-4oandgpt-4o-mini), and Gemini Vision 📸 - Chat with Files using Custom Endpoints, OpenAI, Azure, Anthropic, & Google. 🗃️

- Advanced Agents with Files, Code Interpreter, Tools, and API Actions 🔦

- Available through the OpenAI Assistants API 🌤️

- Non-OpenAI Agents in Active Development 🚧

- Upload and analyze images with Claude 3, GPT-4 (including

- 🌎 Multilingual UI:

- English, 中文, Deutsch, Español, Français, Italiano, Polski, Português Brasileiro,

- Русский, 日本語, Svenska, 한국어, Tiếng Việt, 繁體中文, العربية, Türkçe, Nederlands, עברית

- 🎨 Customizable Dropdown & Interface: Adapts to both power users and newcomers

- 📧 Verify your email to ensure secure access

- 🗣️ Chat hands-free with Speech-to-Text and Text-to-Speech magic

- Automatically send and play Audio

- Supports OpenAI, Azure OpenAI, and Elevenlabs

- 📥 Import Conversations from LibreChat, ChatGPT, Chatbot UI

- 📤 Export conversations as screenshots, markdown, text, json

- 🔍 Search all messages/conversations

- 🔌 Plugins, including web access, image generation with DALL-E-3 and more

- 👥 Multi-User, Secure Authentication with Moderation and Token spend tools

- ⚙️ Configure Proxy, Reverse Proxy, Docker, & many Deployment options:

- Use completely local or deploy on the cloud

- 📖 Completely Open-Source & Built in Public

- 🧑🤝🧑 Community-driven development, support, and feedback

3- Open WebUI (Formerly Ollama WebUI)

Open WebUI is an open-source self-hosted extensible, feature-rich, and user-friendly WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs.

Features

- Intuitive Interface: User-friendly chat inspired by ChatGPT

- Responsive Design: Smooth performance on desktop and mobile

- Effortless Setup: Easy install with Docker/Kubernetes

- Theme Customization: Personalize with multiple themes

- Syntax Highlighting: Enhanced readability for code

- Markdown & LaTeX Support: Comprehensive formatting options

- Local RAG Integration: In-chat document access with # command

- RAG Embedding Model Support: Choose embedding models (Ollama/OpenAI)

- Web Browsing: Integrate websites with # command

- Prompt Presets: Quick access with / command

- RLHF Annotation: Rate messages for human feedback

- Conversation Tagging: Categorize chats for easy reference

- Model Management: Download, delete, and update models

- GGUF File Upload: Create Ollama models from GGUF files

- Multiple Model Support: Switch models for varied responses

- Multi-Modal Support: Includes image interaction

- Modelfile Builder: Customize characters and agents

- Multi-Model Conversations: Leverage multiple models together

- Collaborative Chat: Group model conversations with @ command

- Local Chat Sharing: Share chat links between users

- Regeneration & Chat History: Access all past interactions

- Archive & Import/Export Chats: Organize and transfer chat data

- Voice Input: Send voice input automatically

- Configurable TTS Endpoint: Customize text-to-speech

- Advanced Parameter Control: Adjust temperature, system prompts

- Image Generation Integration: Options for local APIs and DALL-E

- OpenAI API & Multiple API Support: Flexible integration

- API Key Generation: Streamline OpenAI library usage

- External Ollama Server Connection: Connect remote instances

- Ollama Load Balancing: Distribute requests for reliability

- Multi-User Management: Admin panel for user oversight

- Webhook Integration: Real-time notifications for new sign-ups

- Model Whitelisting: Controlled access for users

- Trusted Email Authentication: Enhanced security layer

- RBAC: Role-based access for restricted permissions

- Backend Reverse Proxy: Secure backend communication

- Multilingual Support: Internationalization with i18n

- Continuous Updates: Regular new features and improvements

4- Nextjs Ollama LLM UI

This app, Next.js Ollama LLM UI, offers a fully-featured, beautiful web interface for interacting with Ollama Large Language Models (LLMs) with ease.

Designed for quick, local, and even offline use, it simplifies LLM deployment with no complex setup.

The interface, inspired by ChatGPT, is intuitive and stores chats directly in local storage, eliminating the need for a separate database. It’s a streamlined solution for those looking to work with LLMs on a local system without hassle.

Features

- Beautiful & intuitive UI: Inspired by ChatGPT, to enhance similarity in the user experience.

- Fully local: Stores chats in localstorage for convenience. No need to run a database.

- Fully responsive: Use your phone to chat, with the same ease as on desktop.

- Easy setup: No tedious and annoying setup required. Just clone the repo and you're good to go!

- Code syntax highligting: Messages that include code, will be highlighted for easy access.

- Copy codeblocks easily: Easily copy the highlighted code with one click.

- Download/Pull & Delete models: Easily download and delete models directly from the interface.

- Switch between models: Switch between models fast with a click.

- Chat history: Chats are saved and easily accessed.

- Light & Dark mode: Switch between light & dark mode.

5- WebLLM

WebLLM is a high-performance in-browser LLM inference engine that brings language model inference directly onto web browsers with hardware acceleration. Everything runs inside the browser with no server support and is accelerated with WebGPU.

WebLLM is fully compatible with OpenAI API. That is, you can use the same OpenAI API on any open source models locally, with functionalities including streaming, JSON-mode, function-calling (WIP), etc.

We can bring a lot of fun opportunities to build AI assistants for everyone and enable privacy while enjoying GPU acceleration.

6- Any-LLM

Any-LLM is an adaptable tool designed to integrate any Large Language Model (LLM) into a user-friendly chat interface. Built for flexibility, it supports a variety of LLMs, including open-source and commercial models, and can be customized for different applications.

With minimal setup, Any-LLM offers an intuitive, web-based UI that makes it easy to interact with LLMs locally or in the cloud, enabling developers to experiment with various models quickly.

Features ⭐

- 🖥️ Intuitive Interface: A user-friendly interface that simplifies the chat experience.

- 💻 Code Syntax Highlighting: Code readability with syntax highlighting feature.

- 🤖 Multiple Model Support: Seamlessly switch between different chat models.

- 💬 Chat History: Remembers chat and knows topic you are talking.

- 📜 Chat Store: Chat will be saved in db and can be accessed later time.

- 🎨🤖 Generate Images: Image generation capabilities using DALL-E.

- ⬆️ Attach Images: Upload images for code and text generation.

7- Open LLM WebUI

Open-LLM-WebUI is a versatile, open-source web interface for working with various Large Language Models (LLMs).

It is designed for ease of use, supports integration with both open-source and commercial LLMs, and allow users to set up it on a local or remote environment for AI-driven interactions.

With a focus on accessibility and flexibility, this web UI offers an intuitive chat experience, making it simple to connect with different LLMs and manage AI conversations. It's ideal for developers looking to deploy and interact with LLMs without complex setup requirements.

Features

- Microsoft: Phi-3-mini-4k-instruct

- Google: gemma-2-9b-it, gemma-1.1-2b-it, gemma-1.1-7b-it

- NVIDIA: Llama3-ChatQA-1.5-8B

- Qwen: Qwen2-7B-Instruct

- Mistral AI: Mistral-7B-Instruct-v0.3

- Rakuten: RakutenAI-7B-chat, RakutenAI-7B-instruct

- rinna: youri-7b-chat

- TheBloke: Llama-2-7b-Chat-GPTQ, Kunoichi-7B-GPTQ

8- Open WebUI

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs.

Features

Here’s a compact feature list:

- Effortless Setup: Seamless installation via Docker or Kubernetes, supporting :ollama and :cuda tagged images.

- Ollama/OpenAI API Integration: Easy integration with OpenAI-compatible APIs for versatile conversations.

- Pipelines & Open WebUI Plugin Support: Integrate custom logic, Python libraries, and tools like Langfuse, LibreTranslate, and more.

- Responsive Design: Optimized for Desktop, Laptop, and Mobile devices.

- Progressive Web App (PWA): Native app-like experience with offline support on mobile.

- Full Markdown and LaTeX Support: Enhanced interactions with comprehensive formatting support.

- Hands-Free Voice/Video Call: Integrated voice and video call features for dynamic interactions.

- Model Builder: Easily create and customize Ollama models via the Web UI.

- Native Python Function Calling Tool: Integrate custom Python functions seamlessly into LLMs.

- Local RAG Integration: Document interactions and #command-based access for efficient query handling.

- Web Search for RAG: Perform web searches and inject results directly into chat.

- Web Browsing Capability: Integrate websites into chat using the #command.

- Image Generation Integration: Support for image generation via AUTOMATIC1111, ComfyUI, and DALL-E.

- Many Models Conversations: Engage with multiple models simultaneously for diverse responses.

- Role-Based Access Control (RBAC): Secure access with restricted permissions for model creation and access.

- Multilingual Support: Internationalization for a global user experience.

- Continuous Updates: Regular updates with new features and improvements.

9- Text generation web UI

This is an open-source Gradio-based web UI for Large Language Models.

Features

- Supports multiple text generation backends in one UI/API, including Transformers, llama.cpp, and ExLlamaV2. TensorRT-LLM, AutoGPTQ, AutoAWQ, HQQ, and AQLM are also supported but you need to install them manually.

- OpenAI-compatible API with Chat and Completions endpoints – see examples.

- Automatic prompt formatting using Jinja2 templates.

- Three chat modes:

instruct,chat-instruct, andchat, with automatic prompt templates inchat-instruct. - "Past chats" menu to quickly switch between conversations.

- Free-form text generation in the Default/Notebook tabs without being limited to chat turns. You can send formatted conversations from the Chat tab to these.

- Multiple sampling parameters and generation options for sophisticated text generation control.

- Switch between different models easily in the UI without restarting.

- Simple LoRA fine-tuning tool.

- Requirements installed in a self-contained

installer_filesdirectory that doesn't interfere with the system environment. - Extension support, with numerous built-in and user-contributed extensions available.

10- LoLLMs

LoLLMS WebUI (Lord of Large Language Multimodal Systems) is an all-in-one platform that offers access to a wide array of AI models for various tasks, including writing, coding, image generation, music creation, and more. It supports over 500 expert-conditioned models and 2500 fine-tuned models across diverse domains.

Users can choose models tailored to specific needs, whether it's for coding assistance, medical advice, legal guidance, creative storytelling, or entertainment.

The platform is designed with ease of use in mind, offering a user-friendly interface with light and dark mode options. LoLLMS can help with email enhancement, code debugging, problem-solving, and even provide fun features like a Laughter Bot, Creative Story Generator, and LordOfMusic for personalized music generation.

It combines productivity and entertainment in a single interface, making it a versatile tool for various personal and professional needs.

Features

- Choose your preferred binding, model, and personality for your tasks

- Enhance your emails, essays, code debugging, thought organization, and more

- Explore a wide range of functionalities, such as searching, data organization, image generation, and music generation

- Easy-to-use UI with light and dark mode options

- Integration with GitHub repository for easy access

- Support for different personalities with predefined welcome messages

- Thumb up/down rating for generated answers

- Copy, edit, and remove messages

- Local database storage for your discussions

- Search, export, and delete multiple discussions

- Support for image/video generation based on stable diffusion

- Support for music generation based on musicgen

- Support for multi generation peer to peer network through Lollms Nodes and Petals.

- Support for Docker, conda, and manual virtual environment setups

- Support for LM Studio as a backend

- Support for Ollama as a backend

- Support for vllm as a backend

- Support for prompt Routing to various models depending on the complexity of the task

11- llm-webui

A Gradio web UI for Large Language Models. Supports LoRA/QLoRA finetuning,RAG(Retrieval-augmented generation) and Chat

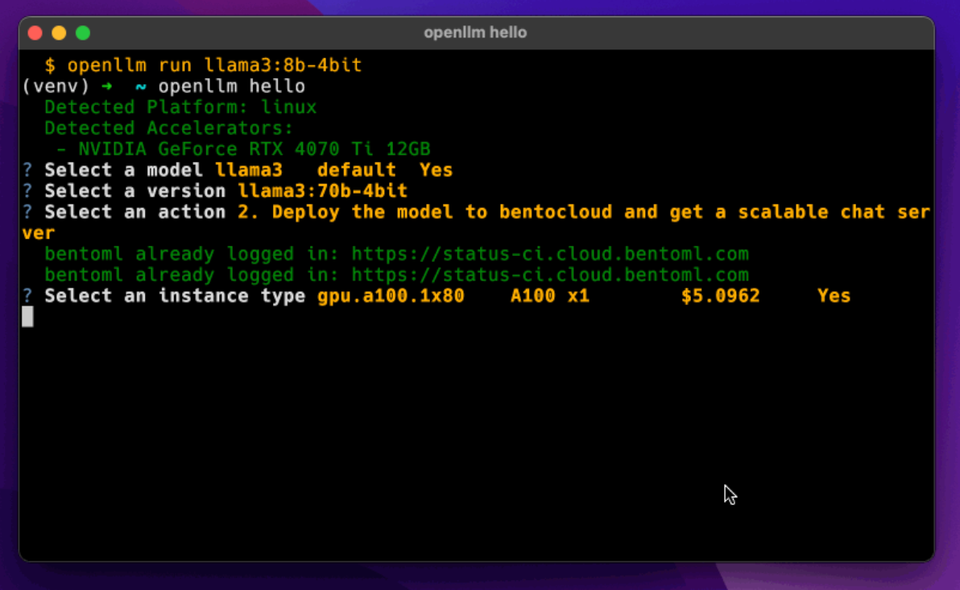

12- OpenLLM

OpenLLM is a tool that allows developers to run open-source language models (LLMs) like Llama, Qwen, and Phi, as OpenAI-compatible API endpoints in the cloud. It simplifies the deployment of models with Docker, Kubernetes, and BentoCloud.

OpenLLM supports multiple models and provides a user-friendly interface, including a built-in chat UI. It also integrates with BentoML for enterprise-level AI inference and deployment. Additionally, users can contribute models to its repository or deploy custom models on their own infrastructure.

More Open-source Large Language Models (LLMs) Resources