Why You Should Think Twice Before Sharing Personal Health Info with AI Chatbots

Table of Content

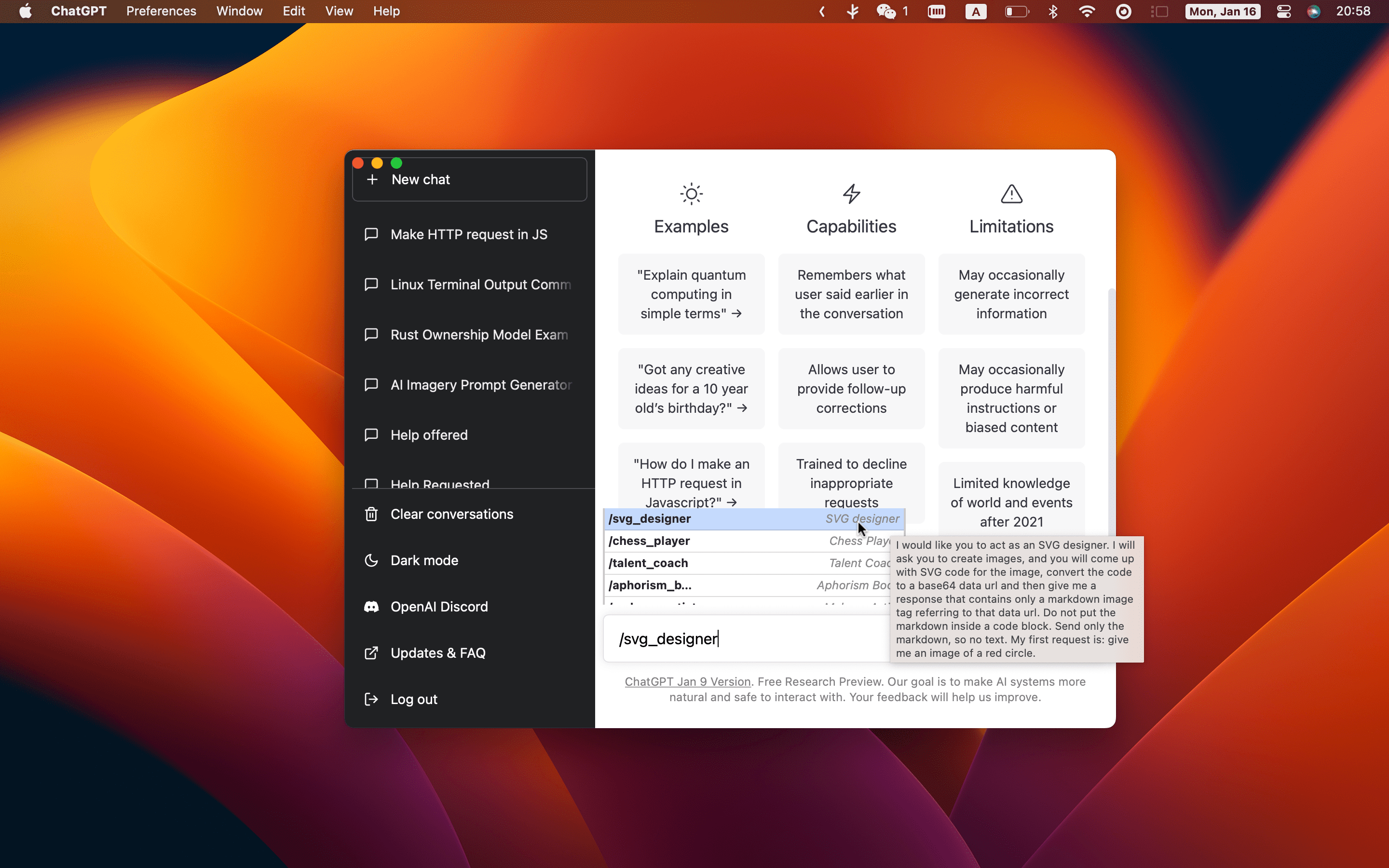

Let me tell you a story. A few weeks ago, I stumbled across something that shook me to my core. It wasn’t some dramatic plot twist in a Netflix series or a shocking news headline—it was a video on social media. Someone casually mentioned how they were sharing their ChatGPT account with friends and colleagues. Nothing unusual there, right? People share streaming services all the time. But then it hit me like a ton of bricks: one of those users—a complete stranger—was using this shared AI tool to look at medical records. Real ones. With names attached.

The person who posted the video joked about it, saying, “Yeah, turns out one guy’s probably a doctor.” They laughed it off, but I couldn’t shake the feeling of unease.

What if that "doctor" wasn’t actually a doctor? What if sensitive information got leaked? Or worse, what if someone decided to misuse it? That moment stuck with me because it perfectly illustrates why we need to talk seriously about the risks of sharing personal health information with AI chatbots.

The Allure of AI Chatbots

Let’s be honest: AI chatbots are kind of magical. Need quick advice? Boom, here’s an answer within seconds. Feeling overwhelmed by symptoms and don’t want to wait for an appointment? Just type your question into a chatbot, and voilà—you get instant feedback. For many people, especially those without easy access to healthcare, these tools feel like a lifeline. And hey, who doesn’t love convenience?

But here’s the thing: just because something feels helpful doesn’t mean it’s safe. Imagine handing over your deepest secrets—your medical history, your family’s health issues—to a total stranger. Would you do it? Probably not.

Yet, when it comes to AI chatbots, we often let our guard down way too easily. Why? Because they’re designed to seem trustworthy. Their responses are calm, confident, and reassuring. But behind that polished interface lies a world of potential dangers.

What Happens When You Overshare?

So, let’s break it down. Say you decide to ask a chatbot about a weird rash you’ve had for weeks. Maybe you include details like where it is, how long it’s been there, and any medications you’re taking. Innocent enough, right? Wrong. If the chatbot isn’t HIPAA-compliant (and most aren’t), your data could end up anywhere. Seriously. Anywhere.

Think about it: every word you type is stored somewhere. Even if the company promises privacy, data breaches happen all the time. Hackers can swoop in and steal that info faster than you can say “cybersecurity.” Suddenly, your private health concerns become public property—or worse, fodder for identity theft.

And remember that shared ChatGPT account I mentioned earlier? Now imagine your medical query popping up in front of someone else logging in. Awkward, right? But more than awkward, it’s dangerous. That person now has access to intimate details about your life. And no, they’re not bound by doctor-patient confidentiality rules. Yikes.

The Bigger Picture: Self-Diagnosis Gone Wrong

Here’s another scary scenario. Let’s say you start relying on AI chatbots for self-diagnosis. You punch in your symptoms, and the bot spits out a list of possible conditions. Sounds harmless until you realize two things:

- AI isn’t perfect. These systems are trained on massive datasets, sure, but they’re not infallible. They can misinterpret symptoms, overlook rare diseases, or even give conflicting answers depending on how you phrase your question.

- You’re not a doctor. No matter how much research you do online, you don’t have the training to interpret complex medical information. Misunderstanding a diagnosis can lead to unnecessary panic—or worse, ignoring serious symptoms because the chatbot told you it’s “probably nothing.”

I’ll never forget a patient I once treated who came to me convinced she had cancer. She’d spent hours Googling her symptoms and consulting various AI tools.

By the time she walked into my office, she was practically catatonic with fear. Turns out, she had a minor infection that cleared up with antibiotics. But the emotional toll of believing she was dying? That took months to heal.

Real-Life Consequences

Now, let’s zoom out a bit. Beyond individual cases, there’s a broader issue at play here: trust. Healthcare relies heavily on trust between patients and providers. When you visit a doctor, you assume they’ll keep your information confidential. But when you share that same info with an AI chatbot, you’re rolling the dice. Who owns your data? Where does it go? Can it be sold to third parties? These questions should terrify anyone thinking about typing sensitive info into a chat window.

Take the case of Clearview AI, a facial recognition company that scraped billions of images from social media without consent. Once exposed, people realized just how vulnerable their personal data had become. The same thing could happen with health information.

Imagine pharmaceutical companies buying anonymized datasets to target ads based on your medical history. Or insurance companies hiking premiums after learning about pre-existing conditions through leaked data. Creepy, huh?

What is Anonymizing Patient Records?

Anonymizing patient records is basically the process of scrubbing personal details from medical data so that it can’t be traced back to the individual. Think about it: when you share your health information—whether it’s for research, analysis, or even AI training—you don’t want anyone figuring out it’s you . That’s where anonymization comes in. It removes or masks things like your name, address, phone number, Social Security number, and even specific dates (like your birthday) that could potentially identify you.

The goal? To protect your privacy while still making the data useful for things like medical studies or improving healthcare systems.

Now, why does this matter so much? Well, there are laws in place to make sure companies and organizations handle your data responsibly. In the U.S., we’ve got HIPAA (Health Insurance Portability and Accountability Act), which sets strict rules about how health information should be protected. Over in Europe, GDPR (General Data Protection Regulation) takes it a step further, giving people more control over their personal data and slapping hefty fines on anyone who messes up.

These laws aren’t just bureaucratic red tape—they exist because people care about their privacy, and rightfully so. Nobody wants their medical history floating around the internet or being sold to advertisers.

The tricky part is that anonymizing data isn’t foolproof. If someone really wanted to, they could potentially “re-identify” a person by piecing together bits of anonymized data with other sources. For example, if you know someone’s zip code, age, and gender, you might be able to figure out who they are, even if their name isn’t attached. That’s why experts are constantly working on better ways to anonymize data without losing its value.

At the end of the day, anonymizing patient records is all about finding that sweet spot between protecting privacy and allowing data to be used for good—because let’s face it, no one wants their personal info leaked, but we also need that data to improve healthcare for everyone.

How to Protect Yourself

Okay, so maybe I’ve scared you a little. Good. Awareness is the first step toward staying safe. Here are some practical tips to protect yourself while still enjoying the benefits of AI technology:

- Keep it vague. If you absolutely must use a chatbot, strip away identifying details. Instead of saying, “I’m John Smith, 35, with chest pain,” try, “A 35-year-old male experiencing chest pain.” Less specific = less risky.

- Use asterisks or redactions. Replace sensitive words with placeholders like “REDACTED” or “****”. This simple trick can prevent accidental leaks.

- Stick to reputable platforms. Not all chatbots are created equal. Some are specifically designed for healthcare settings and comply with regulations like HIPAA. Look for those instead of generic models.

- Don’t store critical info. Avoid uploading documents containing personal health data. Remember, once it’s out there, you can’t take it back.

- Trust your gut. If something feels off, listen to your instincts. Don’t sacrifice privacy for convenience.

Final Thoughts

At the end of the day, AI chatbots are tools—not replacements for human judgment. They can provide guidance, entertainment, and even inspiration, but they’re not equipped to handle the weight of your personal health information. Trusting them blindly is like leaving your front door wide open and hoping no one walks in.

As someone who works in both medicine and tech, I see the incredible potential of AI. But I also see its limitations—and its dangers. So next time you’re tempted to spill your guts to a chatbot, pause for a second. Ask yourself: Is this worth the risk? Chances are, the answer will be no.

Because at the heart of it all, your health is yours alone. Protect it fiercely.