21 ChatGPT Alternatives: A Look at Free, Self-Hosted, Open-Source AI Chatbots

Open-source Free Self-hosted AI Chatbot, and ChatGPT Alternatives

Table of Content

ChatGPT has taken the internet by storm, leading to numerous open-source implementations of the OpenAI engine. This allows users to host the technology themselves, maintaining their privacy and control over their data.

What is an AI ChatBot?

An AI ChatBot is a powerfully engineered computer program that expertly utilizes artificial intelligence to simulate human conversation.

With its ability to comprehend and respond to both written and spoken language, it serves as an indispensable tool in customer service, adeptly answering questions, delivering information, and executing simple tasks.

Benefits of AI ChatBots

- 24/7 availability: AI ChatBots can provide round-the-clock support, answering customer queries at any time.

- Cost-effective: They reduce the need for a large customer service team, helping to lower costs.

- Instant responses: AI ChatBots can respond to customer queries instantly, improving customer satisfaction.

- Scalability: They can handle a large number of queries simultaneously, allowing businesses to scale their customer service efforts easily.

Use-cases for AI ChatBots

- Customer support: They can provide instant answers to frequently asked questions, resolve complaints, or guide users through processes.

- Sales and marketing: AI ChatBots can recommend products, share promotional offers, or help customers through the buying process.

- Data collection: They can gather customer feedback, helping businesses understand their customers better and improve their products or services.

New Players

While major companies like Google Gemini and Microsoft Copilot have released their own competitive products, many are unaware that they can operate their own system, running various AI models in the backend.

In this post, we provide the best free solution that allows you to run your own AI Chatbot on a server or your local machine.

1. Tabby

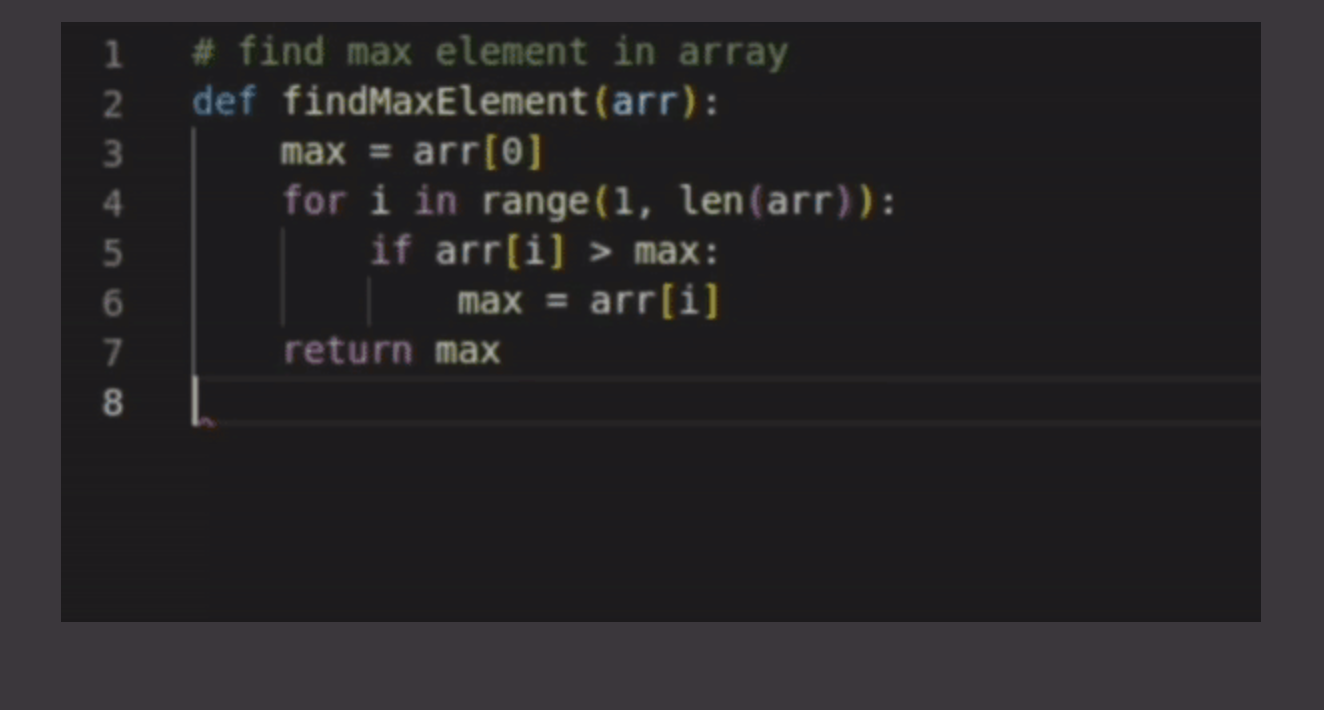

Tabby is a self-hosted AI coding assistant, serving as a viable open-source and on-premises alternative to GitHub Copilot. It presents several appealing features that make it a competitive choice.

Firstly, it is self-contained, eliminating the need for a Database Management System (DBMS) or any cloud service, which significantly streamlines its installation and operation. Secondly, it comes with an OpenAPI interface, simplifying the integration process with existing infrastructure, such as a Cloud Integrated Development Environment (IDE). This flexibility makes it adaptable to various workflows and systems. Thirdly, it supports consumer-grade Graphics Processing Units (GPUs), making it accessible for a broad range of users.

Lastly, the ease of installation on various platforms, including local machines or servers like DigitalOcean, enhances its user-friendliness. This combination of features positions Tabby as a practical and efficient tool for those seeking a self-hosted AI coding assistant.

2. Self-Hosted AI

The Self-Hosted AI project presents a compatible API interface with openai, enabling easy adaptation of various open-source projects. The developer encourages others to develop similarly compatible API interfaces, promoting the growth of related applications.

Project packages can be downloaded from the release, including an 8MB online installation program. The project is based on ChatGLM and is available offline. The built-in model within the package is a trimmed version of the open-source project, ChatGLM, licensed under Apache-2.0. After unzipping, users can run the model file directly.

The project also provides a feature for users to host the model on their own. They can go to Huggingface, copy the space, switch the CPU to the second 8-core for a fast experience, and then the API address will be their custom URL.

The project supports a built-in command line interactive program, which was modified to support Unicode input on windows. The built-in model is from Chinese-LLaMA-Alpaca. If users already have the model, they can directly replace the existing file.

Moreover, this project supports AI Art, using Stable Diffusion, and comes with integrated apps, broadening its applicability and functionality.

3. LlamaGPT

LlamaGPT is a self-hosted, fully offline chatbot, similar to ChatGPT, that is powered by Llama 2. It emphasizes privacy with all data remaining on the user's device, ensuring no data transfer occurs. Recently, it has added support for Code Llama models and Nvidia GPUs.

LlamaGPT currently provides support for several models, with plans to facilitate custom models in the future. The supported models include various versions of the Nous Hermes Llama 2 Chat and Code Llama Chat, each with specific memory requirements and download sizes.

Installation of LlamaGPT is made user-friendly with varying instructions based on the user's device. For umbrelOS home server users, the installation is as simple as a one-click install from the Umbrel App Store. For users with an M1/M2 Mac, Docker and Xcode need to be pre-installed. LlamaGPT can also be installed on other devices using Docker.

4. Refact (Coding AI Assistant)

Refact WebUI is a resourceful repository for those interested in fine-tuning and self-hosting code models. Users can utilize these models inside Refact plugins for code completion and chat functionality. The repo also offers the ability to download and upload Lloras, host several small models on a single GPU, and even connect GPT-models for chat using OpenAI and Anthropic keys.

Running Refact Self-Hosted is made simpler through the use of a pre-built Docker image. To use it, a user needs to install Docker with NVidia GPU support - Windows users will need to install WSL 2 first.

The Docker container can be run with a specific command provided within the repo. A notable feature here is the 'perm-storage' volume that is mounted inside the container. This stores all configuration files, downloaded weights, and logs which will be retained even upon upgrading or deleting the Docker.

At the end of the process, users can access the server Web GUI by visiting a specific URL. This makes the platform user-friendly and easy to navigate. Overall, Refact WebUI is a comprehensive tool for individuals interested in exploring code modelling.

5. Serge - LLaMA made easy 🦙

Serge is a user-friendly, self-hosted chat interface developed with llama.cpp for running GGUF models. It eliminates the need for API keys, adding to its privacy and security features.

Serge operates with a SvelteKit frontend and uses Redis for storing chat history and parameters. The API is designed with FastAPI + LangChain, wrapping calls to llama.cpp using Python bindings.

For quick setup, Serge offers a Docker command. To run it, users need to ensure they have Docker Desktop installed, WSL2 configured, and sufficient free RAM to run models.

In addition to Docker, Serge can also be set up on Kubernetes, with instructions available in the wiki.

The chat interface supports a variety of AI models, including Alfred 40B-1023, among others. This extensive model support enhances its versatility and usability across different applications.

6. Fuse.ai

FuseAI is a free, self-hosted, and open-source web application designed to interact with OpenAI APIs. As of now, it supports ChatGPT, offering users direct interaction with this advanced AI model. Future updates plan to incorporate support for DALLE and Whisper, further expanding its applicability.

Developed using a robust tech stack including Prisma, tRPC, NextJS, TypeScript, SQLite, and the Mantine React component library, FuseAI combines the power of these technologies to deliver a seamless user experience.

One of its defining features is its ease of installation. Users can quickly deploy FuseAI using Docker, a platform that packages applications into standardized units for software development. This feature significantly simplifies the setup process, making FuseAI a user-friendly choice for those seeking to interact with OpenAI APIs in a self-hosted environment.

7. GPT4All

GPT4All is privacy-centric software designed for chatting with large language models directly from your computer. This powerful ecosystem enables running customized large language models, working seamlessly on consumer-grade CPUs, and both NVIDIA and AMD GPUs. However, it should be noted that the user's CPU needs to support AVX instructions.

Each GPT4All model is a 3GB - 8GB file that can be easily downloaded and plugged into the GPT4All software. Nomic AI supports and maintains this software ecosystem, ensuring its quality and security. They also lead the efforts to make it possible for any individual or enterprise to deploy their own on-edge large language models.

GPT4All has seen various updates, including the launch of GGUF Support, the addition of local LLM inference on NVIDIA and AMD GPUs, and Docker-based API server launch, among others. It also provides stable support for LocalDocs, a feature that allows you to chat with your data privately and locally.

Building the GPT4All Chat UI from the source is achievable by following the instructions provided. GPT4All also offers official Python bindings and Typescript bindings, making it easier to integrate with various platforms.

The software's integrations include Langchain and the Weaviate Vector Database, making it a versatile tool for users. As an open-source project, GPT4All also welcomes contributions from the developer community.

8. LocalAI

LocalAI is a free, Open Source alternative to OpenAI. It functions as a drop-in replacement REST API, compatible with OpenAI, Elevenlabs, and Anthropic API specifications. LocalAI is designed for local AI inferencing, allowing users to run Large Language Models (LLMs), and generate images and audio locally or on-premises using consumer-grade hardware. It supports multiple model families and doesn't require a GPU, making it accessible for a wide range of users.

LocalAI is developed and maintained by Ettore Di Giacinto. It has several roadmap items and hot topics for enhancement, including improvements to the WebUI, the development of a Reranker API, and more. It also supports a multitude of features, such as text generation with GPTs, text to audio and audio to text conversion, image generation with stable diffusion, and more.

Moreover, it offers functionalities like embeddings generation for vector databases, constrained grammars, and the ability to download models directly from Huggingface. It also includes a Vision API and a new Reranker API.

In summary, LocalAI is a versatile tool designed to provide a comprehensive set of AI capabilities in a local environment, offering an open-source alternative to other AI platforms.

9. Biniou

Biniou is a versatile, self-hosted web interface for various types of Generative Artificial Intelligence (GenAI). It allows users to generate multimedia content and chat with AI on their own devices without the need for a dedicated GPU. Biniou can operate offline once deployed and models are downloaded. This software is compatible with numerous operating systems, including GNU/Linux, Windows, and macOS (experimentally), and it can also be run on Docker.

The application includes text generation capabilities using llama-cpp based chatbot modules, Llava multimodal chatbot modules, Microsoft GIT image captioning modules, Whisper speech-to-text modules, and nllb translation modules, which support 200 languages. Image generation and modification features are provided through multiple modules, including Stable Diffusion, Kandinsky, Latent Consistency Models, Midjourney-mini, PixArt-Alpha, and several others.

Biniou also offers audio generation using the MusicGen, MusicLDM, Audiogen, Harmonai, and Bark modules. Video generation and modification functionalities are available through the Modelscope, Text2Video-Zero, AnimateDiff, Stable Video Diffusion, and Video Instruct-Pix2Pix modules. Biniou supports 3D object generation using the Shap-E txt2shape and img2shape modules.

One of the standout features of Biniou is its zero-configuration installation through one-click installers or Windows executables. All required components for running Biniou are installed automatically, either at install time or on first use, making it user-friendly. Biniou also supports CUDA and ROCm and is compatible with various models.

In summary, Biniou is a comprehensive, user-friendly tool offering several AI capabilities in a self-hosted environment, making it an attractive choice for those looking for an alternative to other AI platforms.

10. OwnAI

OwnAI is an open-source platform that enables you to host and manage your own AI applications. Built with Python and the Flask framework, it offers a web interface for AI interaction. The platform supports AI customization to cater to specific needs and provides a flexible environment for AI projects.

Furthermore, it allows for the creation and management of additional knowledge for AIs. For those seeking a demo or a managed private cloud service, ownAI offers these services through their website. The platform is community-driven, reinforcing its open-source nature.

11. GerevAI

GerevAI/gerev is a free, self-hosted, AI-powered enterprise search engine. It allows users to find conversations, documents, or internal pages within seconds. It is designed for help desk technicians and can be integrated with platforms like Slack, Confluence, Jira, Google Drive, and more.

It supports multiple users, GPU machine and Docker install.

12. SimpleAI

SimpleAI is a self-hosted alternative to AI APIs, replicating main endpoints for LLM. It serves as a platform to experiment with new models, create benchmarks, and handle specific use cases without over-reliance on external services.

13. OpenOpenAI

The project is a self-hosted version of OpenAI's Assistants API, with all API route definitions and types auto-generated from OpenAI's official OpenAPI spec. This allows easy switching between the official API and a custom API.

The project supports using OpenAI Assistants with custom models, fully customizable RAG via the built-in retrieval tool, and self-hosting/on-premise deployments of Assistants. It also provides full control over assistant evaluations and sandboxed testing of custom Actions.

14. ChatGPT (Desktop)

The open-sourced ChatGPT Desktop Application has gained attention but faces development issues. Features include multi-platform support, text-to-speech, export options, upgrade notifications, shortcut keys, system tray hover window, menu items, slash commands, global shortcuts, and pop-up search.

However, due to Tauri's security restrictions, some action buttons may not work.

15. Quivr

Quivr, a personal productivity assistant, leverages GenerativeAI to function as a second brain. It offers rapid access to data, user control over data, compatibility with various operating systems and file types, and an open-source platform.

Users can share their 'brains' publicly or privately, and there's a marketplace for sharing and using others' 'brains'. Quivr also operates offline for constant data access.

Quivr is a fast, efficient, and secure AI tool compatible with Ubuntu 22 or newer. It supports various file types and works offline. It's open-source and allows users to control their data. Users can share their work publicly or privately and can also use the marketplace to boost productivity.

16. Lobe Chat

LobeChat, an open-source AI chat framework, now supports multiple AI providers and modalities, including OpenAI's latest gpt-4-vision model for visual recognition. It allows users to upload images for intelligent conversation based on their content.

The application also supports Text-to-Speech (TTS) and Speech-to-Text (STT) technologies, offering a range of high-quality voice options.

Furthermore, LobeChat has integrated the latest text-to-image generation technology, enabling users to create images within conversations using AI tools like DALL-E 3, MidJourney, and Pollinations.

It also comes with a plugin system, and supports many AI models out of the box.

17. AgentGPT

AgentGPT allows for the configuration and deployment of Autonomous AI agents. The project comes with an automatic setup CLI that sets up environment variables, database, backend, and frontend. Prerequisites include an editor, Node.js, Git, Docker, an OpenAI API key, and optional Serper and Replicate API keys.

The tech stack includes Nextjs 13, Typescript, FastAPI, Next-Auth.js, Prisma & SQLModel, Planetscale, TailwindCSS, HeadlessUI, Zod, Pydantic, and Langchain.

18. Jan

Jan is a free offline AI chatbot offering privacy, security, and advanced AI technology across multiple engine supports. It's compatible with various hardware and architectures, including Nvidia GPUs, Apple M-series, Apple Intel, Linux Debian, and Windows x64.

Jan also provides an OpenAI-equivalent API server, easy installation via Docker, and supports remote API and cross-platform functionality.

19. Go OpenAI

Go OpenAI is an unofficial Golang library that provides clients for the OpenAI API. This library supports various OpenAI offerings such as ChatGPT, GPT-3, GPT-4, DALL·E 2, and Whisper. It's designed for developers who want to leverage OpenAI's capabilities within their own Golang applications.

Installation of this library is straightforward with the Go package manager, using the command go get github.com/sashabaranov/go-openai. The only prerequisite is that the development environment must be running Go version 1.18 or greater.

By utilizing this library, developers can build interactive AI solutions with ease, harnessing the power of OpenAI's advanced models directly in their Golang applications.

20. Dalai

Dalai is a self-hosted application that enables users to run LLaMA and Alpaca AI models on their computers or servers. It is powered by llama.cpp, llama-dl CDN, and alpaca.cpp, ensuring efficient and reliable operation. The application also comes with a hackable web app, providing users with the flexibility to customize and adapt the application to their specific needs.

Moreover, Dalai includes a JavaScript API and a Socket.io API, offering developers a wide range of options for integrating the application into their existing systems or software.

The app is available for Windows, Linux and macOS.

21. The Ultimate ChatGPT

The Ultimate ChatGPT is an AI-powered virtual assistant offering personalized writing and problem-solving services. Key features include language translation, plagiarism detection, grammar correction, and text summarization.

It has a user-friendly interface, supports multiple languages, and can be integrated with other software and services. The app, written in Next.js and React, is ready for installation on the Vercel platform.