Open the Web to AI: 17 Free Apps for Running LLMs Online

17 Running LLMs on the Web? Yes It is Possible, Here are 13 Solution to Do that

Table of Content

What are LLMs, and Why Do They Matter?

LLMs, or Large Language Models, are advanced artificial intelligence systems capable of processing and generating human-like text based on enormous datasets. They understand context, answer questions, summarize content, and even write code!

Think of them as an incredibly smart companion that knows a little bit (or a lot) about almost everything.

At Medevel.com, we’ve consistently highlighted open-source LLMs and RAG solutions.

Why? Because we believe in democratizing technology. Open-source projects not only reduce reliance on proprietary tools but also promote transparency and innovation.

From lightweight LLMs that can run on modest hardware to powerful alternatives for enterprise use, our platform showcases it all.

With this ethos, we encourage businesses, educators, and developers to explore open-source LLMs, integrate them into their workflows, and harness their potential to revolutionize industries.

If you are looking for more AI, LLMs, RAG, and Machine Learning open-source apps, do not forget to check our list at the end of this post.

Running LLMs on the Web: Why It Matters

Hosting and running LLMs on the web isn’t just a tech fad; it’s a game-changer. Instead of relying on your local device’s limited resources, web-based LLMs allow you to tap into powerful servers optimized for speed and scalability.

This approach brings high-performance AI capabilities to users without requiring hefty downloads or expensive hardware.

It’s an inclusive model that makes AI accessible to everyone—from solo entrepreneurs to global corporations.

Use Cases for Running LLMs Online

The practical applications of web-hosted LLMs are as diverse as their capabilities. Here are just a few examples:

- Customer Support Automation:

Replace static FAQ pages with dynamic chatbots that understand context and provide instant, accurate responses. - Content Generation:

Bloggers, marketers, and educators can draft articles, summaries, and presentations faster than ever. - Code Assistance:

Developers can integrate online LLMs into their IDEs for debugging, suggestions, or even full-fledged code snippets. - Language Translation and Learning:

Break language barriers with instant translations or personalized language tutoring. - Data Analysis and Reporting:

Transform raw data into digestible insights, summaries, or presentations in seconds.

Benefits of Running LLMs Online

Running LLMs online offers a host of compelling advantages, making them accessible, efficient, and highly adaptable. Here are the key benefits:

1. Scalability

Online platforms effortlessly handle varying workloads, from assisting a handful of users to managing the demands of a high-traffic e-commerce site. Whether you’re running a chatbot for customer support or a virtual assistant for enterprise solutions, scaling up or down is seamless.

2. Accessibility

Forget expensive GPUs and massive local storage. With web-based LLMs, all you need is a browser. This ensures that even resource-limited users can tap into cutting-edge AI capabilities without breaking the bank.

3. Cost-Efficiency

Shared online infrastructure lowers hosting expenses, making high-performance AI tools affordable for businesses of all sizes, including startups and small enterprises.

4. Seamless Integration

With APIs and web services, integrating LLMs into existing workflows is a breeze. Whether you need advanced customer support, automated content creation, or data analysis, online LLMs fit effortlessly into your systems.

5. Real-Time Updates

Centralized hosting ensures you’re always running the latest version, complete with new features, optimizations, and the highest security standards. No manual updates or maintenance required!

6. Enhanced Collaboration

Running LLMs online fosters teamwork like never before. Teams can simultaneously access the same tools, work on shared projects, and leverage AI to streamline communication, brainstorm ideas, and produce cohesive results.

This is particularly valuable for remote teams that need unified access to AI-driven solutions.

Open-source Web-ready LLMs Solutions

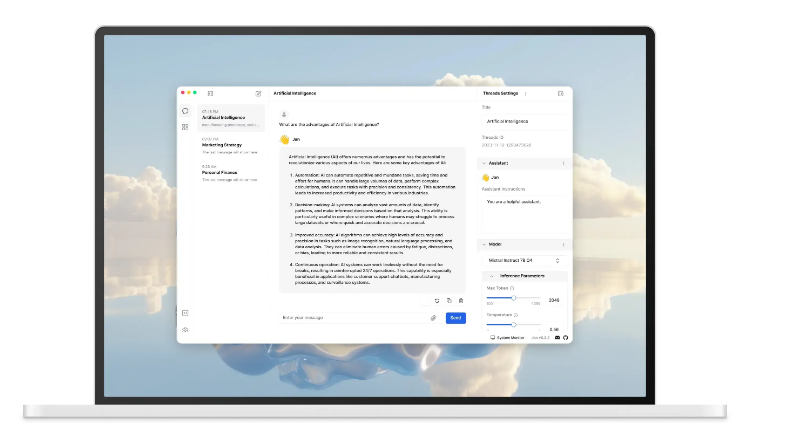

1- AnythingLLM: Your All-in-One Solution for LLM Magic

AnythingLLM is a super-friendly and open-source platform designed to make working with Large Language Models (LLMs) a breeze. Whether you're looking to set up chatbots, process documents, or integrate LLMs into your existing workflows, AnythingLLM has got you covered.

This project simplifies everything—just feed it data, and it’ll automatically index and process it for you. You can interact with your custom AI assistant via a sleek interface or API, making it perfect for both non-tech users and developers.

Want to bring your own LLM (BYOLL)? No problem! It’s designed to work with popular LLMs like OpenAI's GPT models or your preferred alternatives.

Why wait? Dive in, explore the features, and start building your AI-powered applications today!

Features

- Custom AI Agents: Build agents to browse the web, run code, and more.

- Multi-modal Support: Works with both closed and open-source LLMs.

- Multi-User & Permissions: Supports multiple users with role management (Docker only).

- Embeddable Chat Widget: Add a chat widget to your website (Docker only).

- Document Support: Handles PDFs, TXT, DOCX, and more.

- User-Friendly Chat UI: Drag-n-drop functionality with clear citations.

- Cloud Ready: Fully deployable in the cloud.

- LLM Compatibility: Supports OpenAI, Azure, AWS Bedrock, Google Gemini Pro, Hugging Face, and many more.

- Embedder Models: Includes AnythingLLM Native Embedder, OpenAI, Azure, and others.

- Speech Models: Built-in audio transcription, TTS, and STT options.

- Vector Databases: Compatible with LanceDB, Pinecone, Chroma, and more.

- Developer API: Full API support for custom integrations.

- Efficiency: Optimized for cost and time savings with large documents.

2- LibreChat

LibreChat is an open-source AI platform designed to provide the power of ChatGPT without compromising on privacy or control. Built for flexibility and freedom, LibreChat empowers you to deploy AI capabilities on your terms—whether locally or in the cloud.

What makes LibreChat stand out? It’s not just an alternative; it’s an improvement. You get all the features you expect from a ChatGPT-like system, but with full transparency, customization, and the ability to integrate with both open-source and proprietary LLMs. Plus, it's entirely open source, ensuring your data stays yours.

LibreChat isn’t just another AI chat platform; it’s a step toward democratizing AI.

Whether you’re a developer, business, or AI enthusiast, LibreChat gives you the tools to harness the power of language models on your terms. Install it today and take control of your AI experience!

Personally, I have been using it to develop some AI-based products in the past few weeks and it is amazing.

3- Lobe chat

AI belongs to everyone. and tis amazing LobeChat ensures that, It is not just another chatbot—it’s a movement. This open-source platform reimagines what conversational AI can do, giving users full control over their data, workflows, and integrations.

Forget the limits of commercial platforms. With LobeChat, you get a powerful, customizable, and community-driven solution that grows with you. Why settle for a black box when you can have the full blueprint?

Why LobeChat Stands Out

- Total Control: Host it on your own infrastructure—local or cloud—and stay in charge of your data.

- Multi-Model Support: Seamlessly integrates with leading LLMs like OpenAI, Anthropic, Hugging Face, and community-built models.

- Extensible Features: Leverage APIs to create, innovate, and scale your AI-driven projects.

- Multi-User Ready: Perfect for teams with built-in user management and permissions.

- Sleek Interface: Designed with simplicity and usability in mind—no clunky menus or distractions.

- 100% Open Source: No hidden fees, no locked-down features—just AI freedom.

4- Louis Open WebUI (Formerly Ollama WebUI)

Louis is an open-source AI-powered code assistant designed to simplify programming tasks and boost productivity. Supporting multiple programming languages, Louis helps with code generation, debugging, and refactoring right in your editor.

With seamless integration, an intuitive interface, and the power of AI, it’s the perfect tool for developers who want smarter, faster coding without relying on proprietary solutions.

5- Nextjs Ollama LLM UI

Next.js Ollama LLM UI offers a clean, user-friendly interface for interacting with large language models.

It is built with Next.js, it’s perfect for creating a modern, customizable frontend to enhance your AI-powered applications. Whether for personal or professional use, it makes working with LLMs effortless and visually appealing!

Why Next.js Ollama LLM UI Stands Out?

- Beautiful & intuitive UI: Inspired by ChatGPT, to enhance similarity in the user experience.

- Fully local: Stores chats in localstorage for convenience. No need to run a database.

- Fully responsive: Use your phone to chat, with the same ease as on desktop.

- Easy setup: No tedious and annoying setup required. Just clone the repo and you're good to go!

- Code syntax highligting: Messages that include code, will be highlighted for easy access.

- Copy codeblocks easily: Easily copy the highlighted code with one click.

- Download/Pull & Delete models: Easily download and delete models directly from the interface.

- Switch between models: Switch between models fast with a click.

- Chat history: Chats are saved and easily accessed.

- Light & Dark mode: Switch between light & dark mode.

6- WebLLM: High-Performance LLMs Right in Your Browser

WebLLM is a cutting-edge, in-browser LLM inference engine that brings language model capabilities directly to your web browser—no servers required. Powered by WebGPU, it delivers hardware-accelerated performance entirely within your browser, ensuring speed, privacy, and seamless operation.

Fully compatible with the OpenAI API, WebLLM lets you run open-source models locally while enjoying features like streaming, JSON-mode, and function-calling (coming soon). It’s a game-changer for building AI assistants that prioritize privacy and GPU-powered efficiency.

With WebLLM, you unlock endless possibilities to create powerful AI solutions—all from the comfort of your browser.

Key Features for WebLLM

- In-Browser Inference: Execute language model tasks entirely within the browser, ensuring privacy and responsiveness.

- OpenAI API Compatibility: Utilize OpenAI-compatible APIs for functionalities like streaming, JSON-mode, and function calling.

- Extensive Model Support: Supports a variety of models, including Llama 3, Phi 3, Gemma, Mistral, and Qwen (通义千问).

- Custom Model Integration: Deploy custom models in MLC format to tailor the engine to specific needs.

- Streaming & Real-Time Interactions: Facilitates real-time output generation, enhancing interactive applications.

- Web Worker & Service Worker Support: Offload computations to separate threads to optimize UI performance.

- Chrome Extension Support: Extend browser functionality through custom extensions built with WebLLM.

7- Any-LLM

React(MERN) ChatGPT / GPT 4 Template for Utilizing Any OpenAI Language Model.. Enjoy the benefits of GPT 4, upload images with your chat, and save your chats in db for later.

ChatGPT Template to utilize any OpenAI Language Model, i.e. GPT-3, GPT-4, Davinci, DALL-E and more.

Features

- 🖥️ Intuitive Interface: A user-friendly interface that simplifies the chat experience.

- 💻 Code Syntax Highlighting: Code readability with syntax highlighting feature.

- 🤖 Multiple Model Support: Seamlessly switch between different chat models.

- 💬 Chat History: Remembers chat and knows topic you are talking.

- 📜 Chat Store: Chat will be saved in db and can be accessed later time.

- 🎨🤖 Generate Images: Image generation capabilities using DALL-E.

- ⬆️ Attach Images: Upload images for code and text generation.

8- Text Generation WebUI

Text Generation Web UI LLM UI is a Gradio-based self-hosted, web-based interface designed for seamless interaction with Large Language Models (LLMs). It empowers anyone to generate text-based responses effortlessly while offering compatibility with a wide range of LLMs.

Packed with useful features, it’s the perfect solution for AI enthusiasts and developers looking for an easy-to-use, customizable platform.

It is ideal for creating text, writing plans, and policies.

Features

- Flexible Chat Modes: Choose from instruct, chat-instruct, or chat, each with tailored prompt templates.

- OpenAI-Compatible API: Includes Chat and Completions endpoints for seamless integration.

- Dynamic Prompt Formatting: Leverages Jinja2 templates for automatic prompt structuring.

- Extension Support: Comes with built-in and community-contributed extensions for added functionality.

- Advanced Generation Controls: Offers multiple sampling parameters and customizable options.

- Multi-Backend Support: Works with Transformers, llama.cpp, ExLlamaV2, TensorRT-LLM, AutoGPTQ, AutoAWQ, HQQ, and AQLM (manual installation required for some).

- Simple Fine-Tuning: Built-in LoRA fine-tuning tool for custom model training.

- Conversation Management: "Past chats" menu for quick switching and notebook-style free-form text generation.

- Model Flexibility: Switch between models directly in the UI without restarting.

- Self-Contained Setup: Dependencies are installed in a separate directory to avoid system conflicts.

9- LoLLMs (Lord of Large Language Multimodal Systems)

LoLLMS WebUI is a self-hosted, user-friendly interface designed to bring AI text generation to everyone.

It supports a wide range of LLM backends, including llama.cpp and Transformers, while offering flexible chat modes, OpenAI-compatible APIs, and advanced customization options.

With easy model switching, built-in fine-tuning, and extension support, it’s the perfect solution for developers and AI enthusiasts who want a powerful, versatile, and accessible platform to explore the capabilities of language models.

Features

- Choose your preferred binding, model, and personality for your tasks

- Enhance your emails, essays, code debugging, thought organization, and more

- Explore a wide range of functionalities, such as searching, data organization, image generation, and music generation

- Easy-to-use UI with light and dark mode options

- Integration with GitHub repository for easy access

- Support for different personalities with predefined welcome messages

- Thumb up/down rating for generated answers

- Copy, edit, and remove messages

- Local database storage for your discussions

- Search, export, and delete multiple discussions

- Support for image/video generation based on stable diffusion

- Support for music generation based on musicgen

- Support for multi generation peer to peer network through Lollms Nodes and Petals.

- Support for Docker, conda, and manual virtual environment setups

- Support for LM Studio as a backend

- Support for Ollama as a backend

- Support for vllm as a backend

- Support for prompt Routing to various models depending on the complexity of the task

10- llm-webui

Yet another Gradio-based LLMs interface that you can self-hosted, and use for free. It comes with built-in finetune engine, RAG (Retrieval-augmented generation) that supports document files, and supports online and offline models and datasets.

Key Features for LLM-WebUI

- Fine-Tuning with LoRA/QLoRA: Fine-tune models to your specific needs, enabling precise and customized AI performance for niche applications.

Benefit: Enhance model accuracy and adapt it to specialized tasks effortlessly. - Retrieval-Augmented Generation (RAG): Combine external data sources with LLMs to generate more accurate and contextually relevant responses.

Benefit: Boosts the AI's ability to provide factually correct and context-rich outputs. - Document Support (TXT, PDF, DOCX): Upload and process a variety of document formats directly in the UI.

Benefit: Seamlessly extract and use information from different file types for AI interactions. - Retrieved Chunk Display: View the data chunks retrieved during RAG for better understanding and transparency.

Benefit: Helps validate and refine AI responses based on sourced data. - Support for Fine-Tuned Models: Use pre-trained models tailored to specific domains or tasks.

Benefit: Accelerates deployment for specialized use cases without starting from scratch. - Training Tracking and Visualization: Monitor and visualize model training progress through an intuitive interface.

Benefit: Streamlines debugging and optimization during fine-tuning or training. - Prompt Template Configuration via UI: Easily design and configure prompt templates directly through the interface.

Benefit: Simplifies crafting effective inputs to guide AI behavior. - Online and Offline Model Support: Use models hosted locally or accessed through online services.

Benefit: Ensures flexibility for privacy-focused tasks or high-performance cloud integrations. - Online and Offline Dataset Support: Work with datasets stored locally or retrieved from online sources.

Benefit: Provides versatility for training, testing, or enhancing AI capabilities based on user requirements.

These features make LoLLMS WebUI a robust and adaptable platform for AI-powered workflows!

11- LLMatic

Node-LLMatic is a minimalist, open-source server for hosting Large Language Models (LLMs) with ease. Designed for Node.js, it offers a simple, efficient way to run AI-powered applications locally or in the cloud.

12- OpenLLM

OpenLLM makes it effortless for developers to run open-source language models or custom models as OpenAI-compatible APIs—all with just one command. It features a sleek built-in chat UI, cutting-edge inference backends, and a simplified workflow for enterprise-grade cloud deployments using Docker, Kubernetes, and BentoCloud.

Supported Models:

OpenLLM supports a wide range of popular and powerful models, including:

- Llama 2

- Falcon

- Mistral

- StableLM

- Dolly V2

- ChatGLM

- Flan-T5

- StarCoder

- SantaCoder

- GPT-NeoX

- Claude

- Vicuna

- Baichuan

Whether you’re building a custom chatbot, deploying AI at scale, or experimenting with the latest open-source LLMs, OpenLLM provides the tools to do it quickly and efficiently.

13- WebLLM Assistant (Browser-based)

WebLLM Assistant brings the power of AI agent directly to your browser! This amazing app is powered WebGPU, WebLLM It runs completely inside your browser and ensures 100% data privacy while providing seamless AI assistance as you browse the internet.

14- Web-LLM Assistant

Web LLM Assistant is a feature-packed, self-hosted web interface that combines the power of LlamaCpp and Ollama.

It enables seamless interaction with language models directly in your browser, supports flexible model integration, and prioritizes user privacy. Perfect for AI enthusiasts and developers looking for a customizable and private LLM experience!

Features

- Local LLM usage via llama_cpp or ollama.

- Web scraping of search results for full information for the LLM to utilise

- Web searching using DuckDuckGo for privacy-focused searching for pages for scraping

- Self-improving search mechanism that refines queries based on initial results

- Rich console output with colorful and animated indicators for better user experience

- Multi-attempt searching with intelligent evaluation of search results

- Comprehensive answer synthesis using both LLM knowledge, web search results, and scraped information from the LLMs selected webpages

15- Open AI Playground

OpenPlayground is an open-source platform for testing, comparing, and fine-tuning various AI models in a user-friendly environment.

Features

- Use any model from OpenAI, Anthropic, Cohere, Forefront, HuggingFace, Aleph Alpha, Replicate, Banana and llama.cpp.

- Full playground UI, including history, parameter tuning, keyboard shortcuts, and logprops.

- Compare models side-by-side with the same prompt, individually tune model parameters, and retry with different parameters.

- Automatically detects local models in your HuggingFace cache, and lets you install new ones.

- Works OK on your phone.

- Probably won't kill everyone.

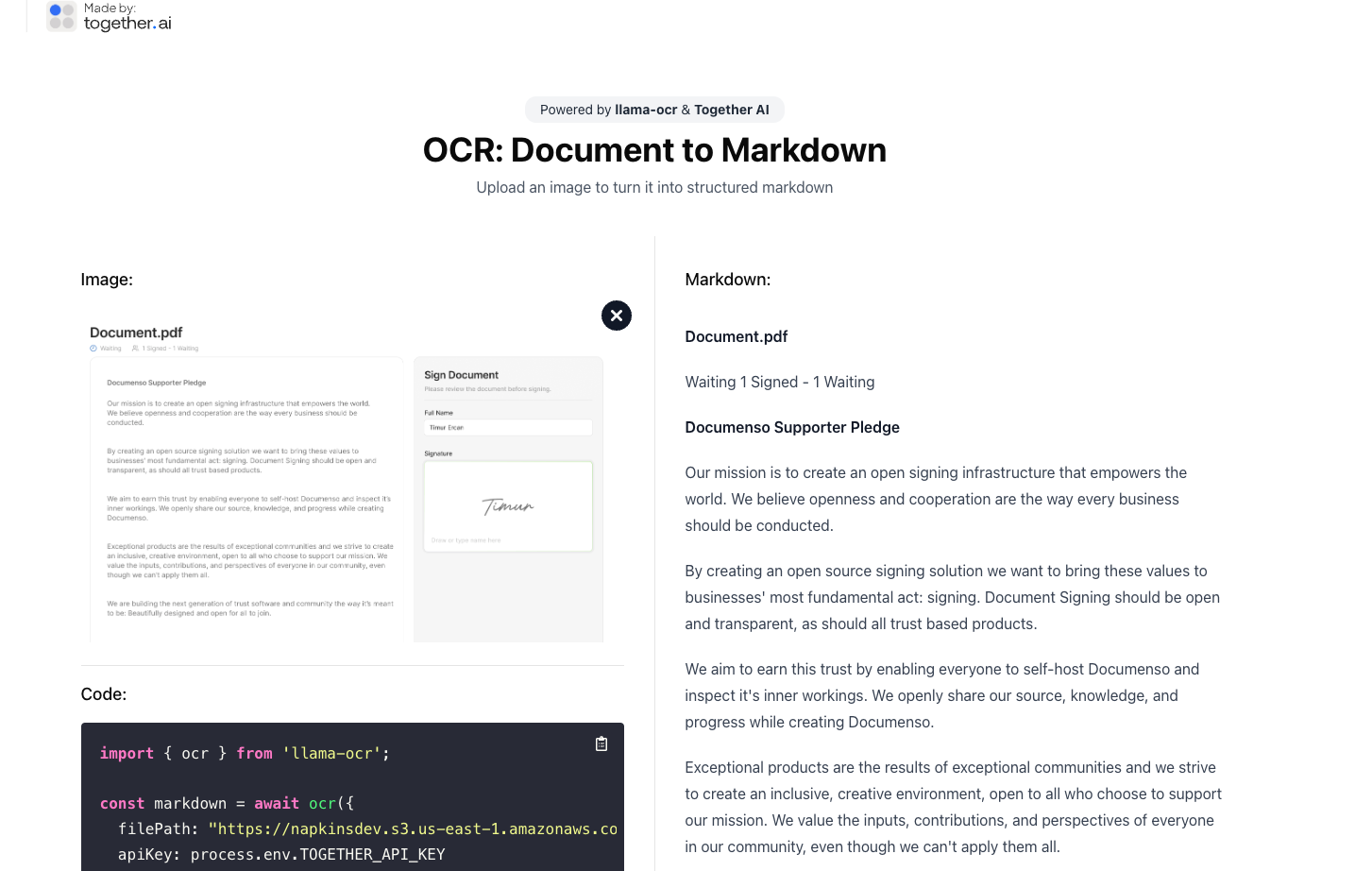

16- LLM RAG - Streamlit RAG Language Model App 🤖

LLM-RAG integrates Retrieval-Augmented Generation (RAG) with Large Language Models, enabling AI to provide precise, context-rich responses using external data sources.

It a perfect choice for creating smarter assistants, it supports document indexing, retrieval, and seamless LLM integration. A must-have for advanced AI workflows!

17- Lumos

Lumos is an open-source tool designed to optimize your AI interactions. It simplifies managing, testing, and sharing prompts across different Large Language Models, helping developers and teams streamline workflows efficiently.

It is perfect for boosting productivity in AI projects!

Final Thought

Running LLMs on the web is not just a tech upgrade—it’s a step toward a smarter, more inclusive future. Whether you’re a small business, a developer, or just curious about AI, the online approach brings unmatched flexibility and convenience. Explore the potential today and join us in shaping tomorrow's tech landscape.

More AI, RAG, LLMs, Machine Learning Resources?

We got you covered here!